"official/vision/beta/projects/basnet/train.py" did not exist on "bab70e6b59129ed6c64f1dd44514eff9ea942317"

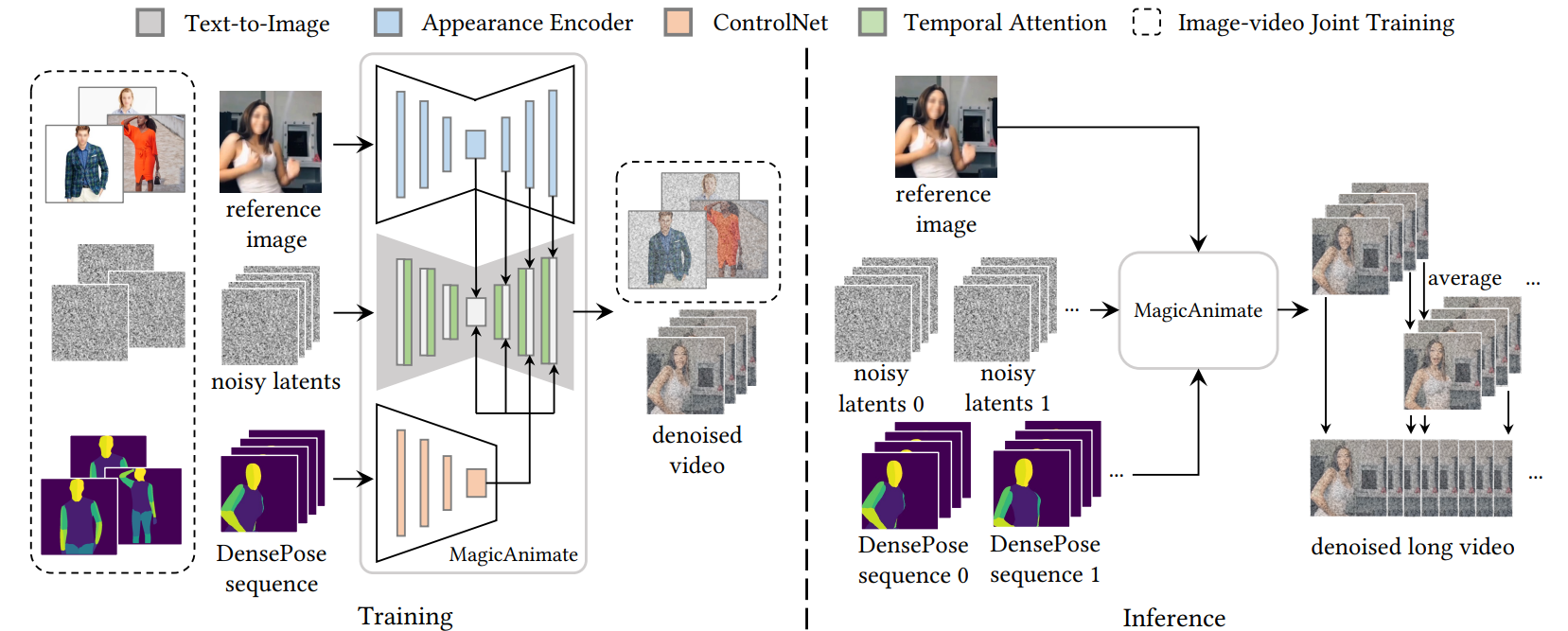

magic-animate

Showing

magicanimate/utils/util.py

0 → 100644

model.properties

0 → 100644

readme_images/image-1.png

0 → 100644

484 KB

readme_images/image-5.png

0 → 100644

102 KB

readme_images/m.gif

0 → 100644

638 KB

requirements.txt

0 → 100644

scripts/animate.sh

0 → 100644

scripts/animate_dist.sh

0 → 100644