No commit message

No commit message

Showing

benchmarks/sonnet.txt

0 → 100644

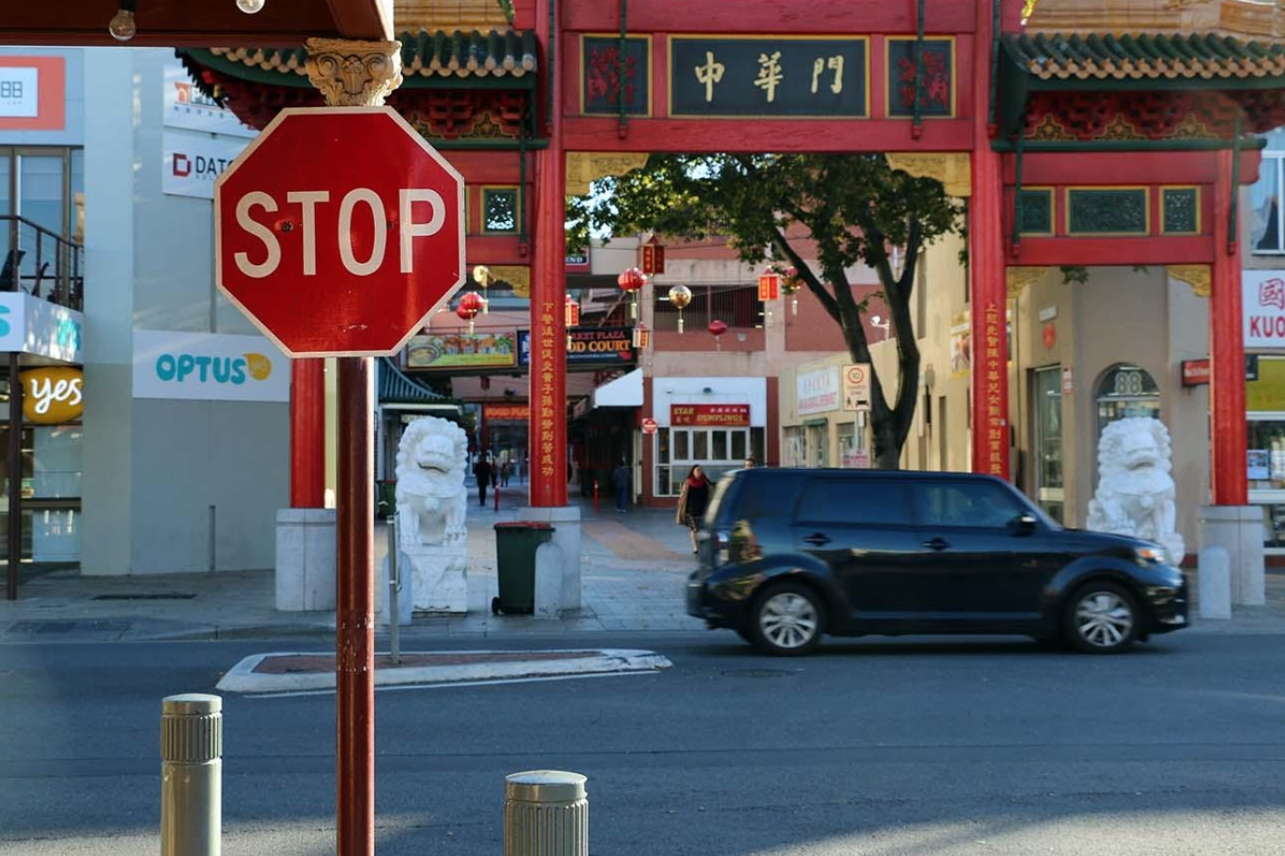

doc/images.png

0 → 100644

625 KB

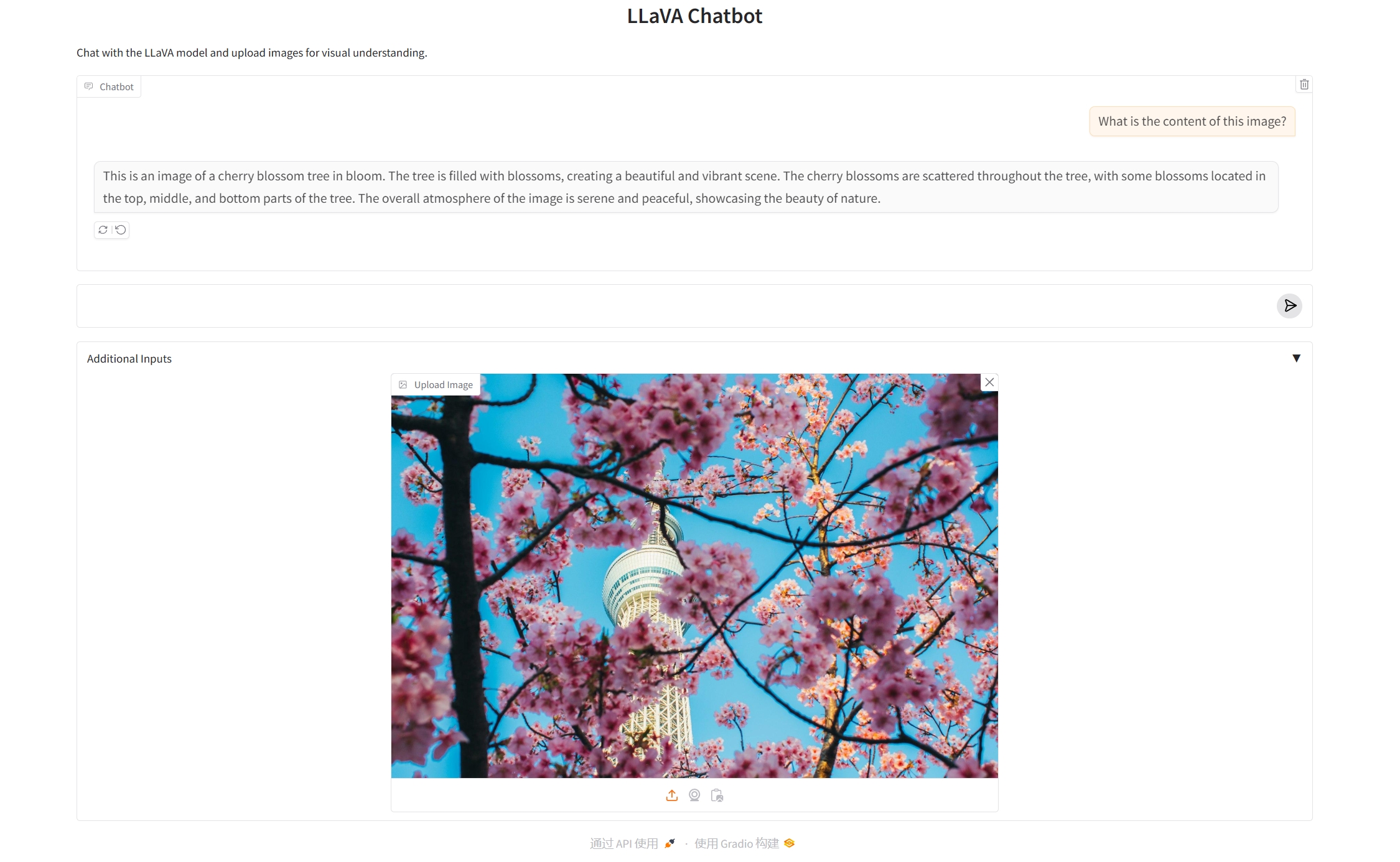

doc/llava_gradio.png

0 → 100644

788 KB

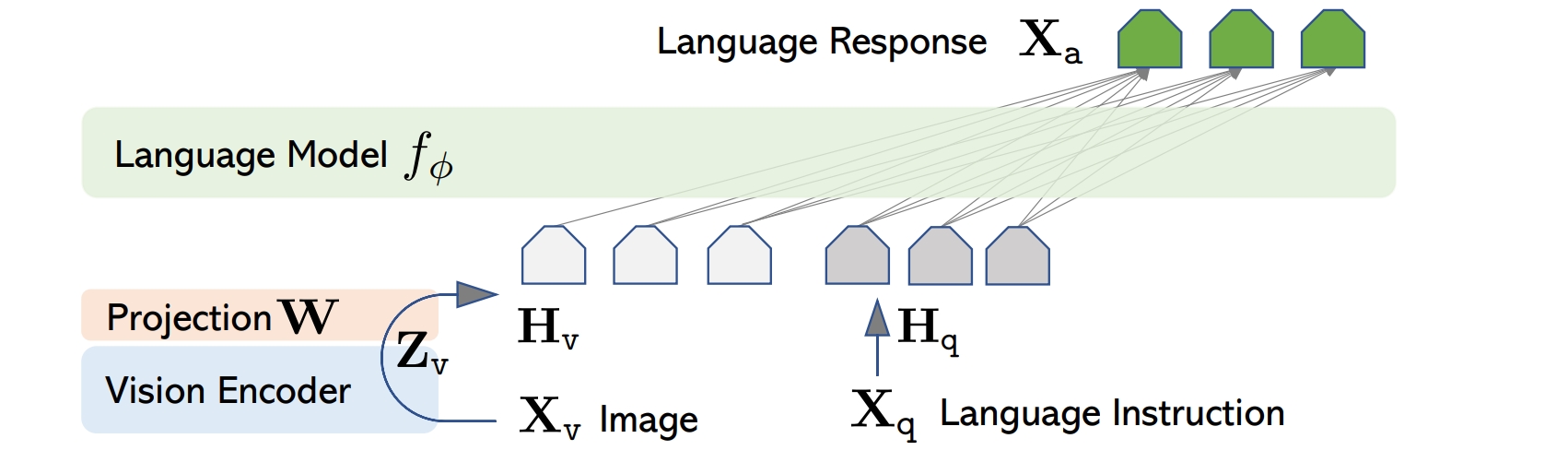

doc/llava_network.png

0 → 100644

166 KB

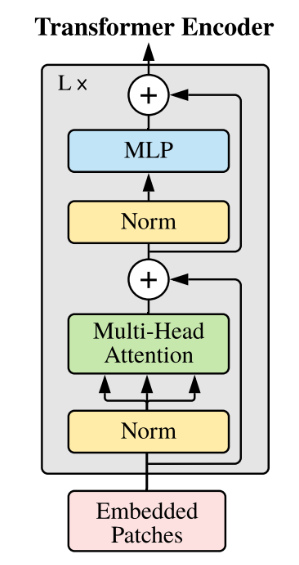

doc/qwen1.5.jpg

0 → 100644

32.7 KB

doc/qwen1.5.png

0 → 100644

112 KB

examples/api_client.py

0 → 100644

examples/aqlm_example.py

0 → 100644

examples/fp8/README.md

0 → 100644

examples/gradio_webserver.py

0 → 100644

examples/llava_example.py

0 → 100644