v1.0

Showing

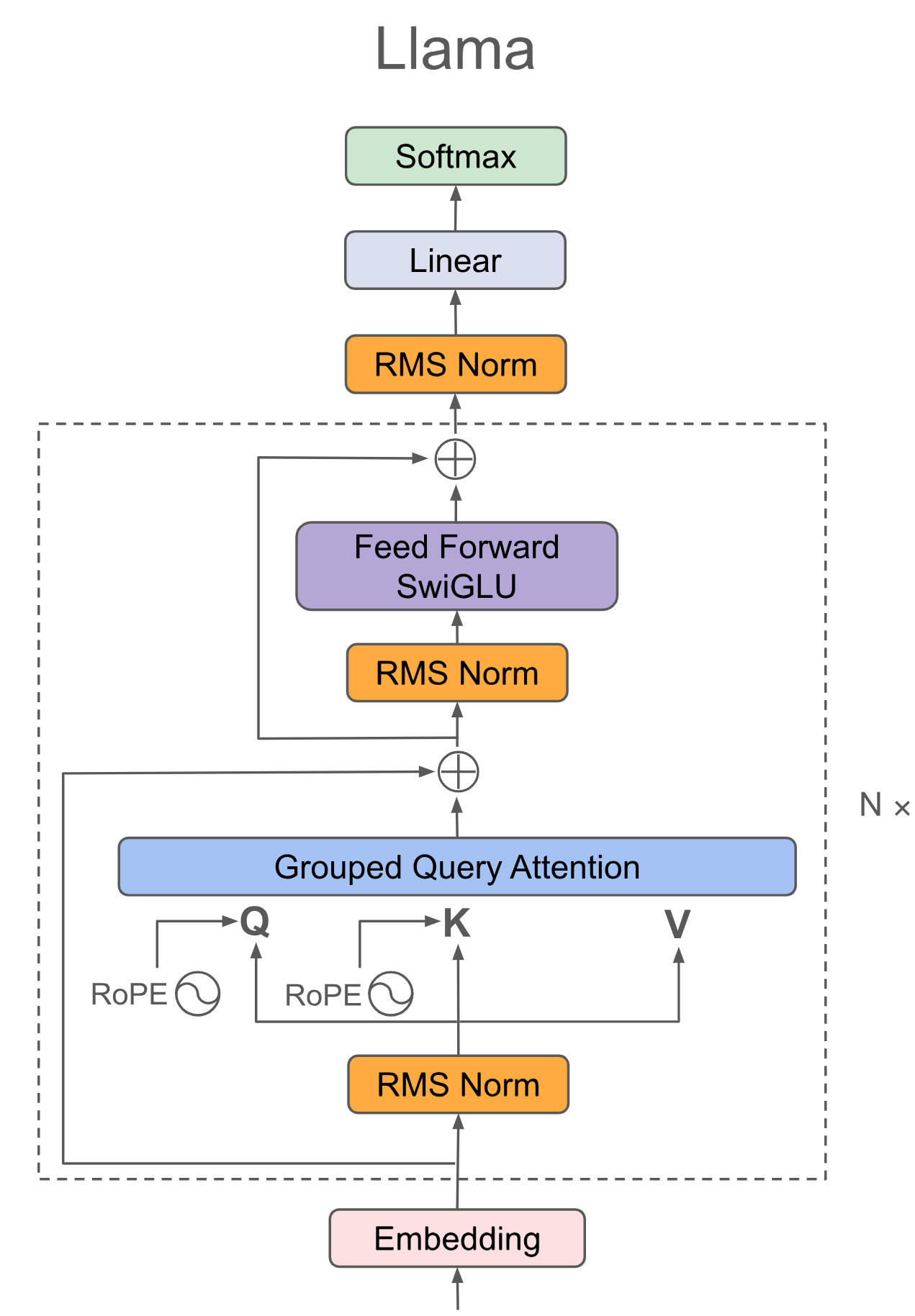

doc/llama3.png

0 → 100644

334 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_start.sh

0 → 100644

evaluation/ceval/ceval.py

0 → 100644

evaluation/ceval/ceval.zip

0 → 100644

File added

evaluation/cmmlu/cmmlu.py

0 → 100644

evaluation/cmmlu/cmmlu.zip

0 → 100644

File added

evaluation/mmlu/mapping.json

0 → 100644

evaluation/mmlu/mmlu.py

0 → 100644

evaluation/mmlu/mmlu.zip

0 → 100644

File added

examples/README.md

0 → 100644