# Jina-Embeddings-V3

## 论文

`jina-embeddings-v3: Multilingual Embeddings With Task LoRA`

- https://arxiv.org/abs/2409.10173

## 模型结构

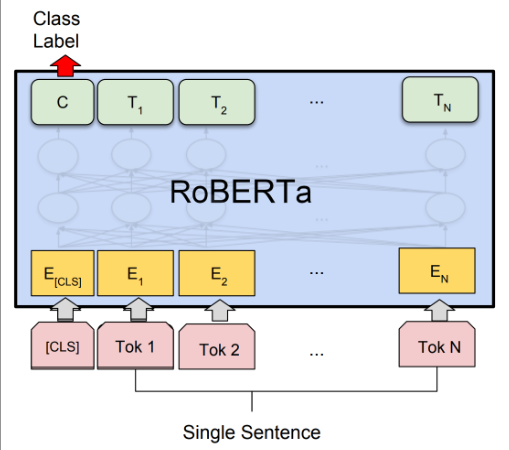

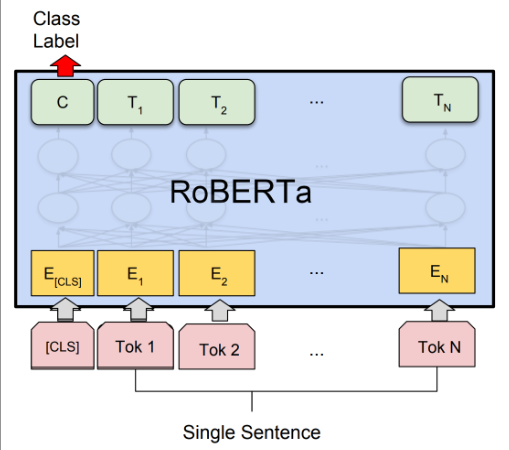

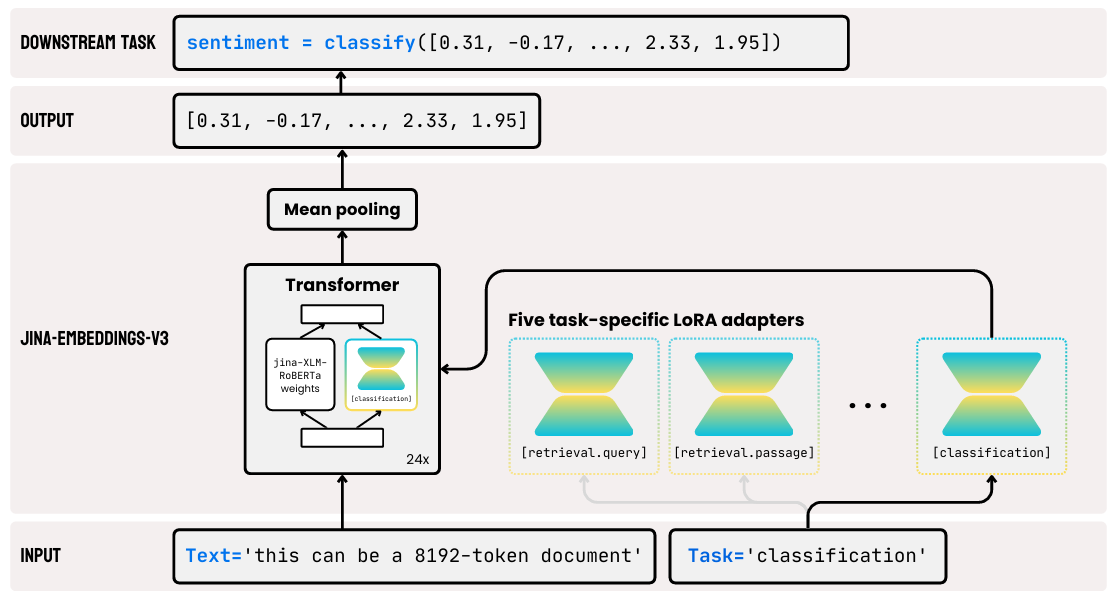

jina-embeddings-v3 以 XLM-ROBERTa 为基础架构,通过集成旋转位置编码(RoPE)来支持长文本,并为检索、分类等不同任务配备了专用的低秩适配器(LoRA)以生成任务特定的嵌入向量。

## 算法原理

该模型采用三阶段训练流程,首先进行多语言掩码模型(MLM)预训练,然后基于海量文本对进行对比学习微调,最后冻结主模型并使用任务专属的数据和损失函数(如InfoNCE、CoSent)独立训练各个LoRA适配器,同时利用合成数据来修复特定的检索失败场景。

## 环境配置

### 硬件需求

DCU型号:K100_AI,节点数量:1台,卡数:1张。

### Docker(方法一)

```bash

docker pull image.sourcefind.cn:5000/dcu/admin/base/custom:vllm0.8.5-ubuntu22.04-dtk25.04-rc7-das1.5-py3.10-20250612-fixpy-rocblas0611-rc2

docker run -it --shm-size 200g --network=host --name {docker_name} --privileged --device=/dev/kfd --device=/dev/dri --device=/dev/mkfd --group-add video --cap-add=SYS_PTRACE --security-opt seccomp=unconfined -u root -v /path/your_code_data/:/path/your_code_data/ -v /opt/hyhal/:/opt/hyhal/:ro {imageID} bash

cd /your_code_path/jina-embeddings-v3_vllm

```

### Dockerfile(方法二)

此处提供dockerfile的使用方法

```bash

cd docker

docker build --no-cache -t multilingual-e5:latest .

docker run -it --shm-size 200g --network=host --name {docker_name} --privileged --device=/dev/kfd --device=/dev/dri --device=/dev/mkfd --group-add video --cap-add=SYS_PTRACE --security-opt seccomp=unconfined -u root -v /path/your_code_data/:/path/your_code_data/ -v /opt/hyhal/:/opt/hyhal/:ro {imageID} bash

cd /your_code_path/jina-embeddings-v3_vllm

```

### Anaconda(方法三)

关于本项目DCU显卡所需的特殊深度学习库可从[光合](https://developer.sourcefind.cn/tool/)开发者社区下载安装。

```bash

DTK: 25.04

python: 3.10

vllm: 0.8.5

torch: 2.4.1+das.opt2.dtk2504

```

`Tips:以上dtk驱动、pytorch等DCU相关工具版本需要严格一一对应`

其它非深度学习库安装方式如下:

```bash

pip install transformers>=4.51.1

```

## 数据集

暂无

## 训练

暂无

## 推理

### vllm推理方法

```bash

## 必须添加HF_ENDPOINT环境变量

export HF_ENDPOINT=https://hf-mirror.com

## model_name_or_path 模型地址参数

python ./infer/infer_vllm.py --model /path/your_model_path/

```

## result

```

Generated Outputs:

Only text matching task is supported for now. See #16120

------------------------------------------------------------

Prompt: 'Follow the white rabbit.'

Embeddings for text matching: [-0.142578125, -0.050537109375, 0.01336669921875, 0.046142578125, 0.0810546875, 0.03564453125, -0.00091552734375, 0.058837890625, -0.04833984375, -0.032958984375, -0.07275390625, 0.0625, -0.08154296875, 0.0634765625, -0.0849609375, -0.02685546875, ...] (size=1024)

------------------------------------------------------------

Prompt: 'Sigue al conejo blanco.'

Embeddings for text matching: [-0.048828125, -0.04833984375, -0.045166015625, -0.0255126953125, 0.1357421875, -0.0267333984375, -0.0021209716796875, 0.052734375, -0.08837890625, 0.006561279296875, -0.02978515625, 0.0017242431640625, -0.03955078125, 0.08544921875, -0.1181640625, 0.0634765625, ...] (size=1024)

------------------------------------------------------------

Prompt: 'Suis le lapin blanc.'

Embeddings for text matching: [-0.1328125, -0.0458984375, -0.08154296875, 0.0162353515625, 0.07421875, 0.01019287109375, 0.054931640625, 0.031005859375, -0.08837890625, 0.043212890625, 0.0439453125, 0.08154296875, -0.1318359375, -0.0167236328125, -0.0927734375, -0.0111083984375, ...] (size=1024)

------------------------------------------------------------

Prompt: '跟着白兔走。'

Embeddings for text matching: [-0.0213623046875, -0.146484375, 0.0128173828125, 0.0194091796875, 0.138671875, -0.04931640625, -0.10400390625, 0.0849609375, -0.08203125, 0.017578125, -0.030029296875, 0.134765625, -0.0908203125, -0.047119140625, -0.0625, 0.033203125, ...] (size=1024)

------------------------------------------------------------

Prompt: 'اتبع الأرنب الأبيض.'

Embeddings for text matching: [-0.095703125, -0.0478515625, -0.055419921875, 0.020263671875, 0.0712890625, -0.0086669921875, 0.04541015625, 0.038818359375, 0.021484375, 0.034423828125, -0.01019287109375, 0.00885009765625, -0.1015625, 0.04541015625, -0.11474609375, 0.02099609375, ...] (size=1024)

------------------------------------------------------------

Prompt: 'Folge dem weißen Kaninchen.'

Embeddings for text matching: [-0.07421875, -0.06787109375, -0.006988525390625, 0.00023555755615234375, 0.1455078125, 0.00689697265625, 0.0007781982421875, 0.0712890625, -0.138671875, 0.01513671875, -0.055908203125, 0.055908203125, -0.060546875, 0.08984375, -0.10107421875, 0.008544921875, ...] (size=1024)

所有嵌入已保存到: ./infer/embeddings_K100_AI.npy

```

### 精度

```

# 运行acc.py之前,请分别在DCU和GPU上运行infer_vllm.py,得到各自的embedding数据

python ./infer/acc.py --gpu_embeddings ./infer/embeddings_A800.npy --dcu_embeddings ./infer/embeddings_K100_AI.npy

```

结果

```

abs_diff:

[[0.00097656 0.00048828 0.00036621 ... 0.00024414 0.00079346 0. ]

[0. 0.00024414 0.00024414 ... 0.00036049 0.00012207 0. ]

[0.00097656 0. 0. ... 0.00024414 0.00061035 0.00012207]

[0.00158691 0.00097656 0.00073242 ... 0.00036621 0.00061035 0. ]

[0. 0. 0. ... 0. 0.00018311 0.00097656]

[0. 0.00097656 0.00057983 ... 0.00015259 0. 0.00061035]]

mean_abs_diff:

[0.00028698 0.00033706 0.00036549 0.00031435 0.00039574 0.00033835]

```

DCU与GPU精度一致,推理框架:vllm。

## 应用场景

### 算法类别

`文本理解`

### 热点应用行业

`制造,金融,教育`

## 预训练权重

- [jina-embeddings-v3](https://huggingface.co/jinaai/jina-embeddings-v3)

## 源码仓库及问题反馈

- https://developer.sourcefind.cn/codes/modelzoo/jina-embeddings-v3_vllm

## 参考资料

- https://github.com/jina-ai