ip-adapter

Showing

This diff is collapsed.

This diff is collapsed.

ip_adapter/resampler.py

0 → 100644

ip_adapter/test_resampler.py

0 → 100644

ip_adapter/utils.py

0 → 100644

This diff is collapsed.

ip_adapter_demo.ipynb

0 → 100644

This diff is collapsed.

This diff is collapsed.

ip_adapter_sdxl_demo.ipynb

0 → 100644

This diff is collapsed.

This diff is collapsed.

ip_adapter_t2i_demo.ipynb

0 → 100644

prepare_for_training.py

0 → 100644

pyproject.toml

0 → 100644

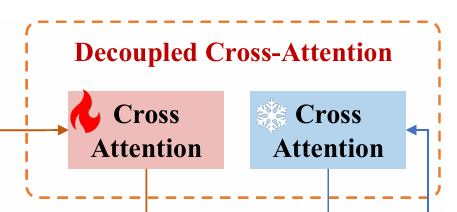

readme_imgs/alg.png

0 → 100644

19 KB

readme_imgs/input.png

0 → 100644

223 KB

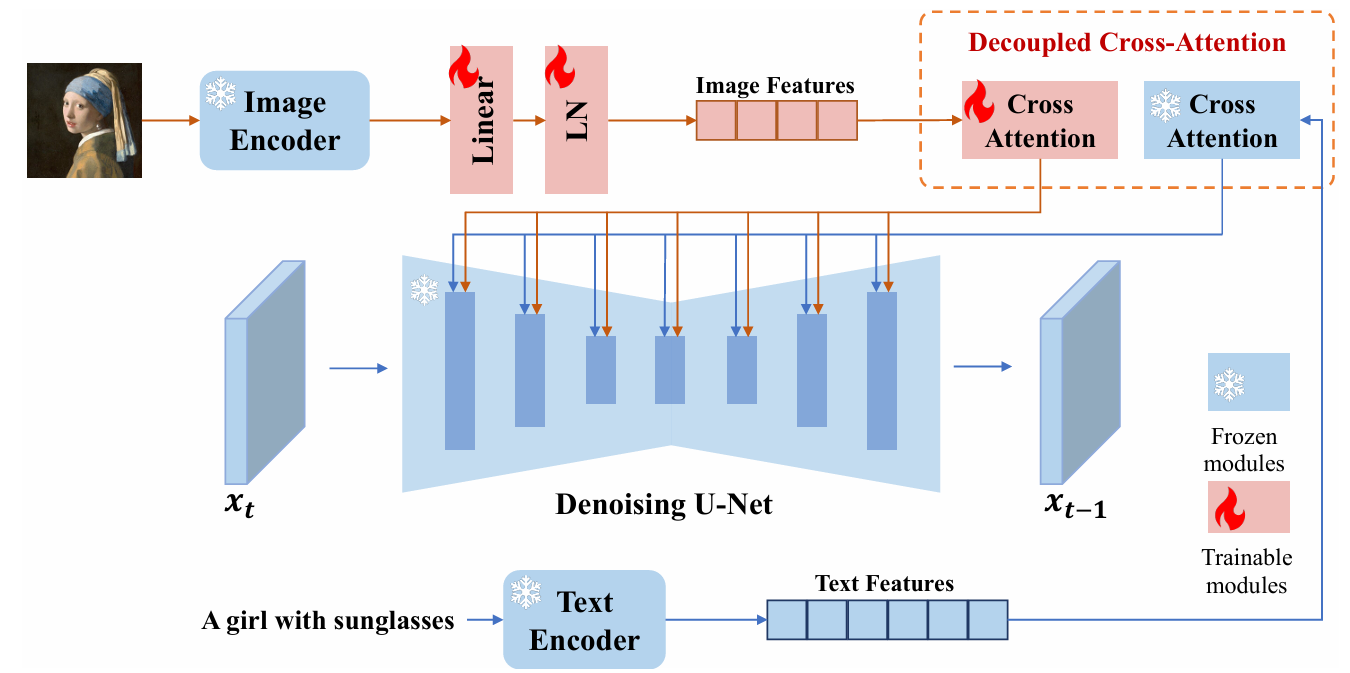

readme_imgs/model.png

0 → 100644

119 KB

readme_imgs/result.png

0 → 100644

2.76 MB