hyi2v

Showing

hyvideo/utils/file_utils.py

0 → 100644

hyvideo/utils/helpers.py

0 → 100644

hyvideo/utils/lora_utils.py

0 → 100644

hyvideo/utils/train_utils.py

0 → 100644

hyvideo/vae/__init__.py

0 → 100644

hyvideo/vae/vae.py

0 → 100644

icon.png

0 → 100644

70.5 KB

model.properties

0 → 100644

modified/config.py

0 → 100644

modified/envs.py

0 → 100644

modified/fix.sh

0 → 100644

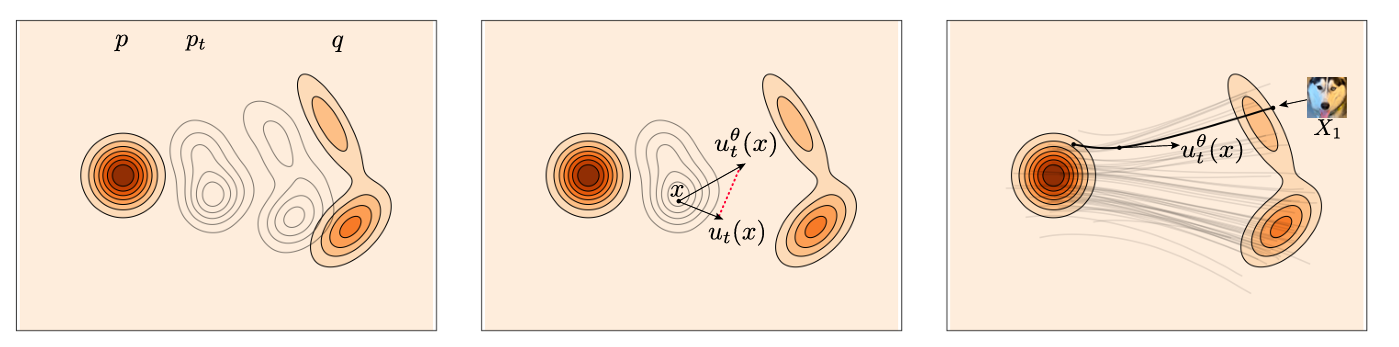

readme_imgs/alg.png

0 → 100644

189 KB

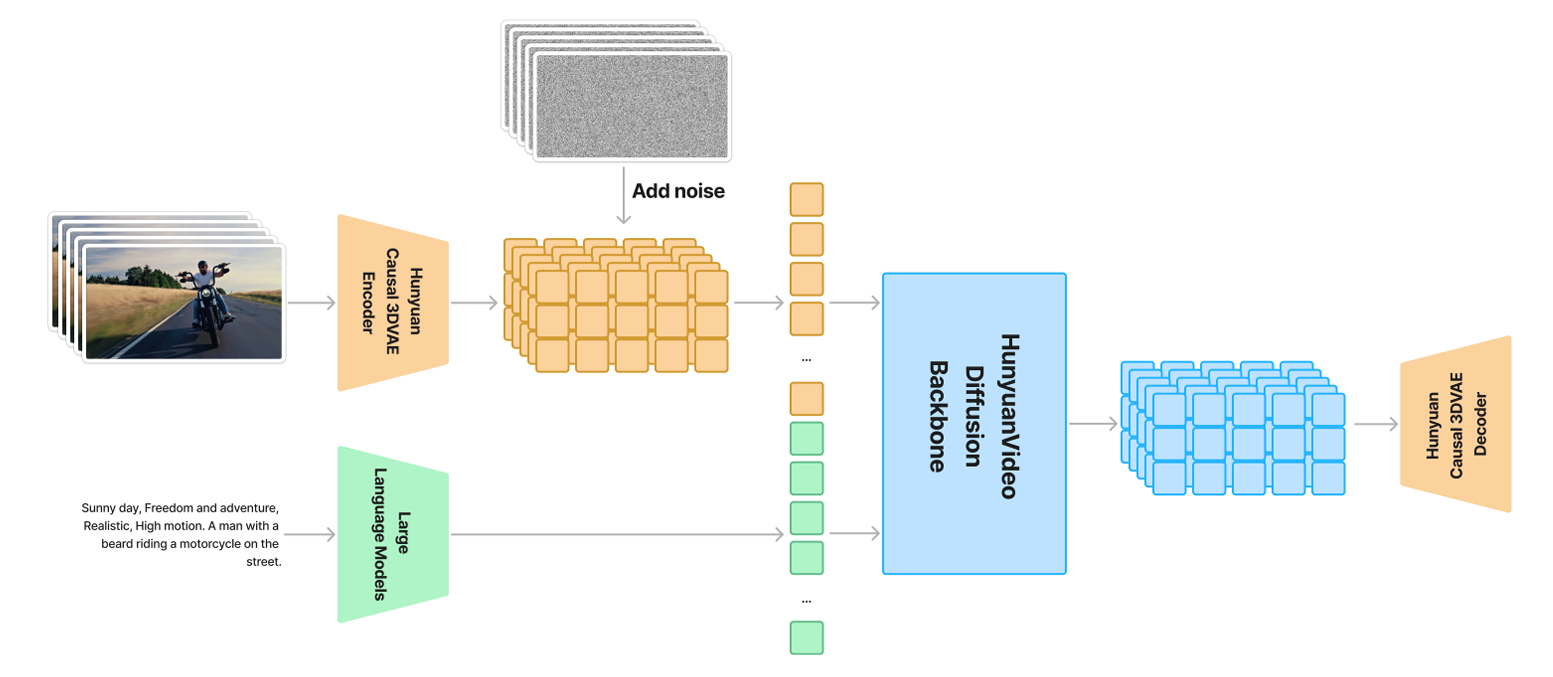

readme_imgs/arch.png

0 → 100644

247 KB

readme_imgs/hy_i2v.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

requirements.txt

0 → 100644

| opencv-python==4.9.0.80 | ||

| diffusers==0.31.0 | ||

| accelerate==1.1.1 | ||

| pandas==2.0.3 | ||

| # numpy==1.24.4 | ||

| einops==0.7.0 | ||

| tqdm==4.66.2 | ||

| loguru==0.7.2 | ||

| imageio==2.34.0 | ||

| imageio-ffmpeg==0.5.1 | ||

| safetensors==0.4.3 | ||

| peft==0.13.2 | ||

| transformers==4.39.3 | ||

| tokenizers==0.15.0 | ||

| # deepspeed==0.15.1 | ||

| pyarrow==14.0.1 | ||

| tensorboard==2.19.0 | ||

| # git+https://github.com/openai/CLIP.git |

sample_image2video.py

0 → 100644