huatuogpt-o1

parents

Showing

Dockerfile

0 → 100644

README.md

0 → 100644

README_official.md

0 → 100644

RL_stage2.py

0 → 100644

SFT_stage1.py

0 → 100644

assets/paper.pdf

0 → 100644

File added

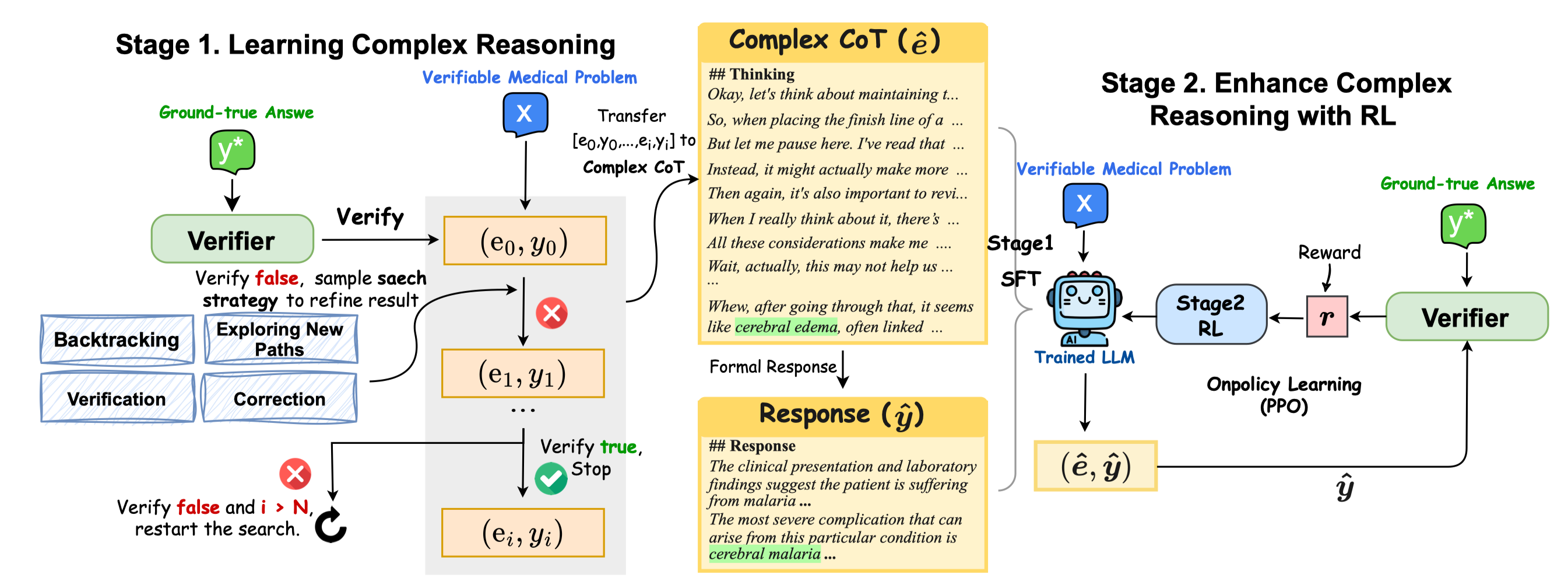

assets/pic1.jpg

0 → 100644

636 KB

configs/deepspeed_zero3.yaml

0 → 100644

data/demo_data.json

0 → 100644

This diff is collapsed.

This diff is collapsed.

evaluation/eval.py

0 → 100644

evaluation/scorer.py

0 → 100644

icon.png

0 → 100644

53.8 KB

inferences/vllm_offline.py

0 → 100644

model.properties

0 → 100644

ppo_utils/__init__.py

0 → 100644