"vscode:/vscode.git/clone" did not exist on "104fcea0c8672b138a9bdd1ae00603c9240867c1"

graphcast

Showing

.gitignore

0 → 100644

CONTRIBUTING copy.md

0 → 100755

CONTRIBUTING.md

0 → 100644

Dockerfile

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_official.md

0 → 100644

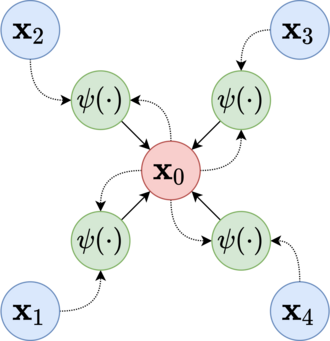

asset/alg.png

0 → 100644

42.7 KB

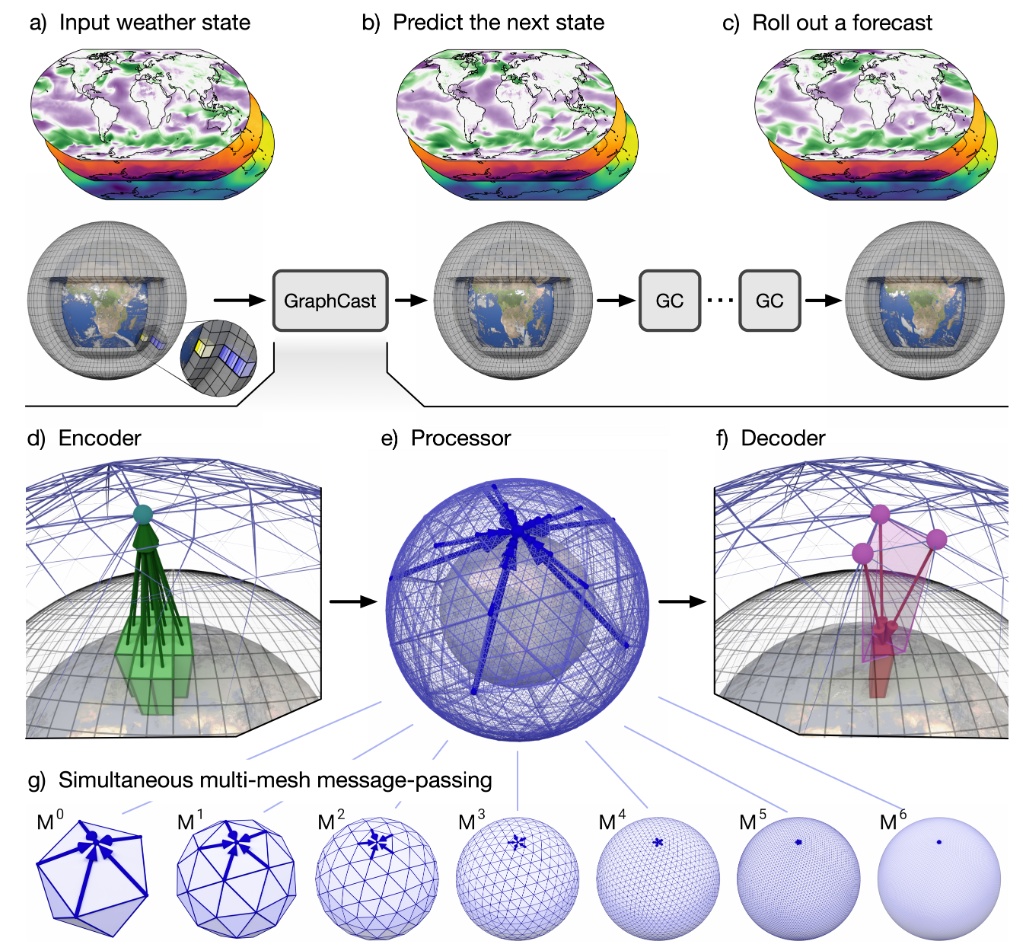

asset/model_structure.png

0 → 100644

1.02 MB

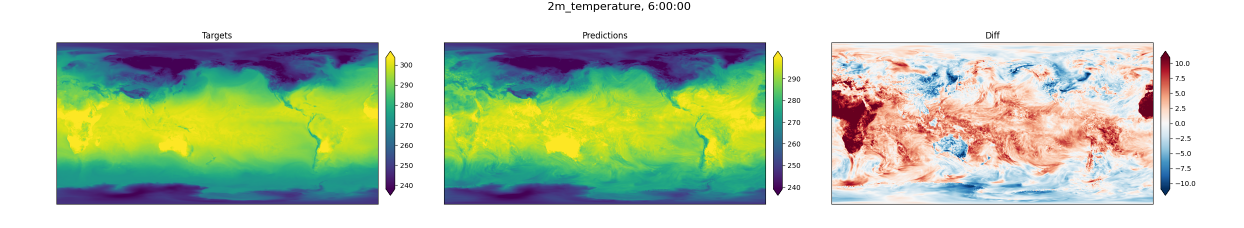

asset/result.png

0 → 100644

313 KB

graphcast/autoregressive.py

0 → 100644

graphcast/casting.py

0 → 100644

graphcast/checkpoint.py

0 → 100644

graphcast/checkpoint_test.py

0 → 100644

graphcast/data_utils.py

0 → 100644

graphcast/data_utils_test.py

0 → 100644

graphcast/graphcast.py

0 → 100644

This diff is collapsed.