Support GLM-4-0414

Showing

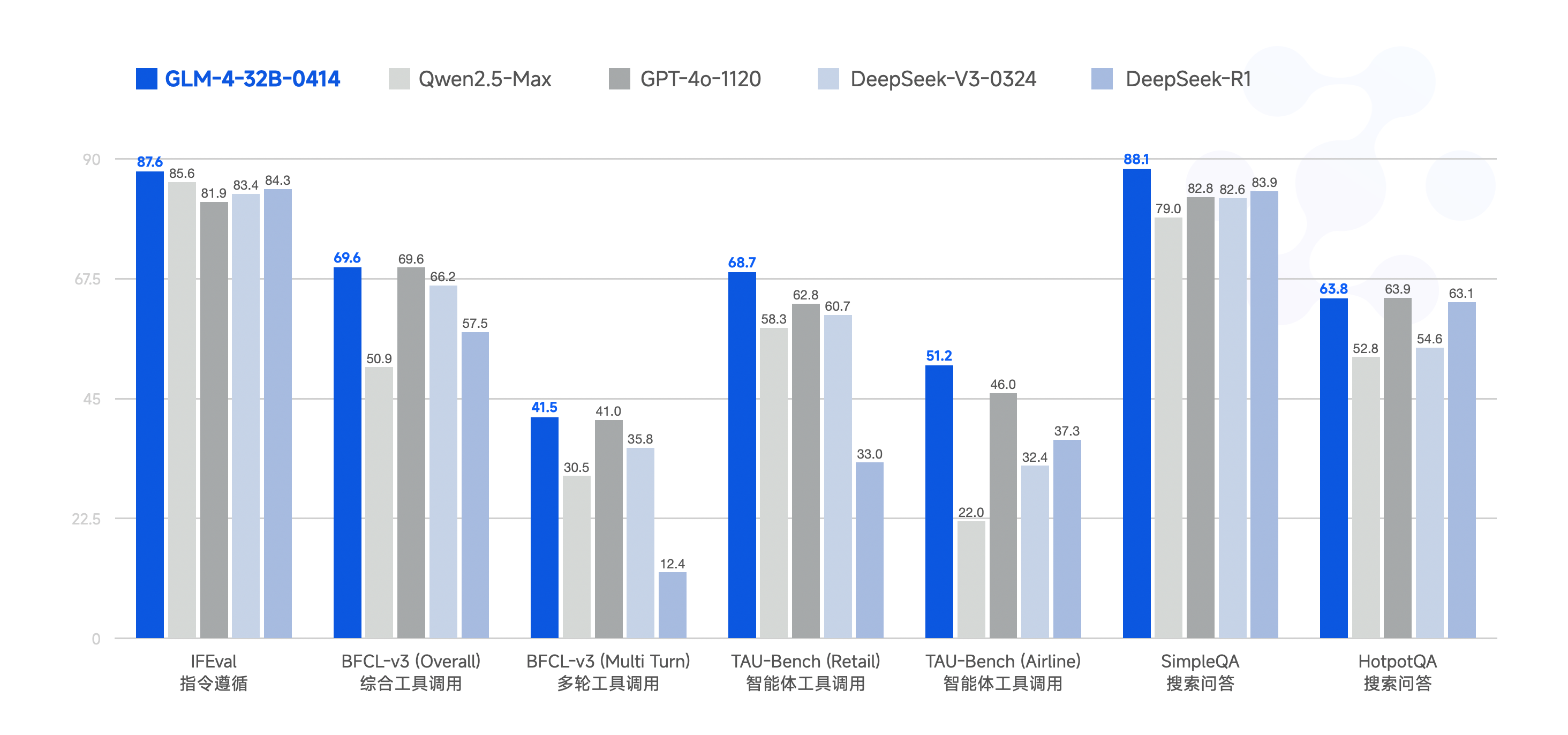

resources/Bench-32B.png

0 → 100644

883 KB

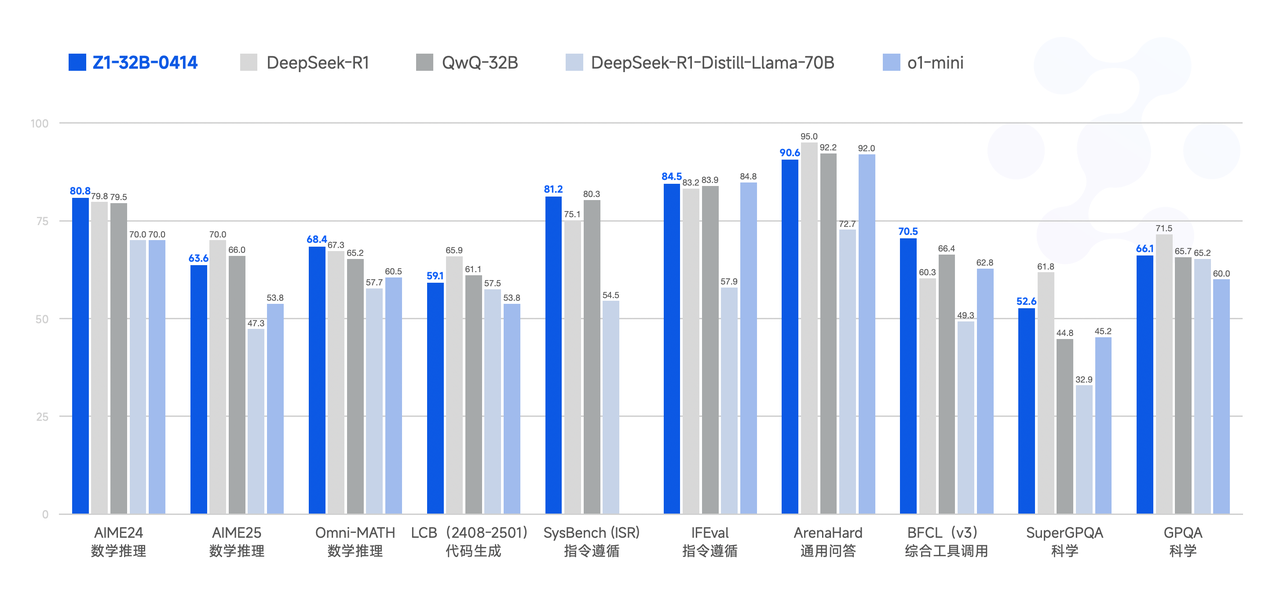

resources/Bench-Z1-32B.png

0 → 100644

200 KB

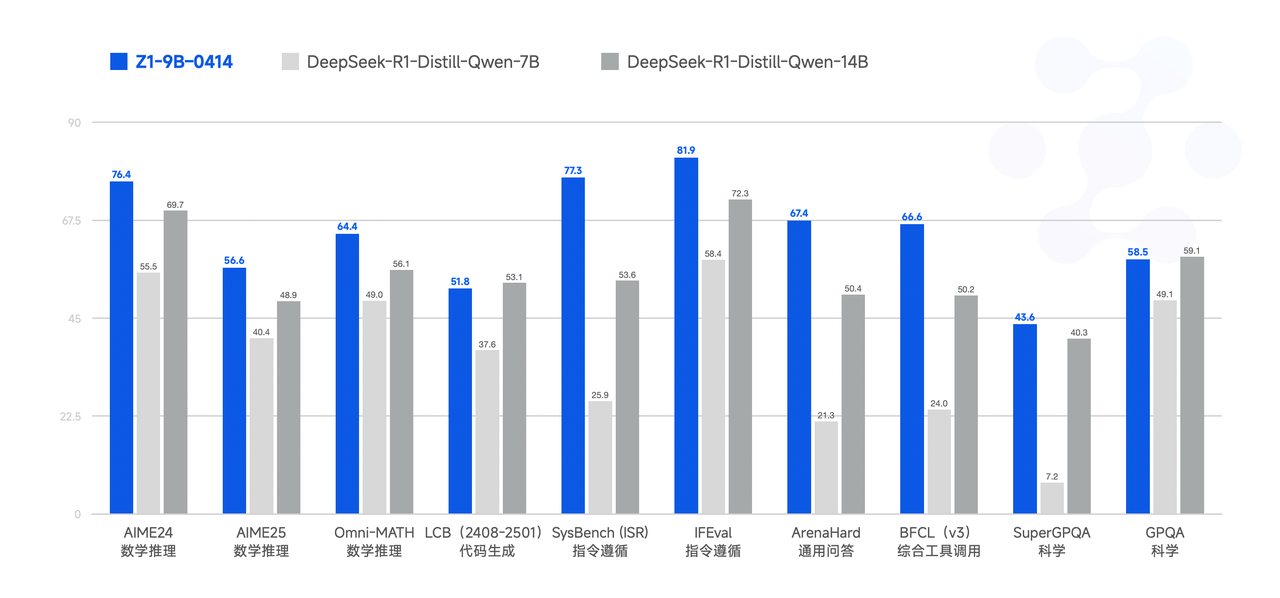

resources/Bench-Z1-9B.png

0 → 100644

168 KB

| W: | H:

| W: | H:

883 KB

200 KB

168 KB

151 KB | W: | H:

37.3 KB | W: | H: