Initial commit

parents

Showing

FLAVR_README.md

0 → 100644

LICENSE

0 → 100644

Middleburry_Test.py

0 → 100644

README.md

0 → 100644

config.py

0 → 100644

dataset/Davis_test.py

0 → 100644

dataset/GoPro.py

0 → 100644

dataset/GoPro_test.txt

0 → 100644

dataset/GoPro_train.txt

0 → 100644

dataset/Middleburry.py

0 → 100644

dataset/transforms.py

0 → 100644

dataset/ucf101_test.py

0 → 100644

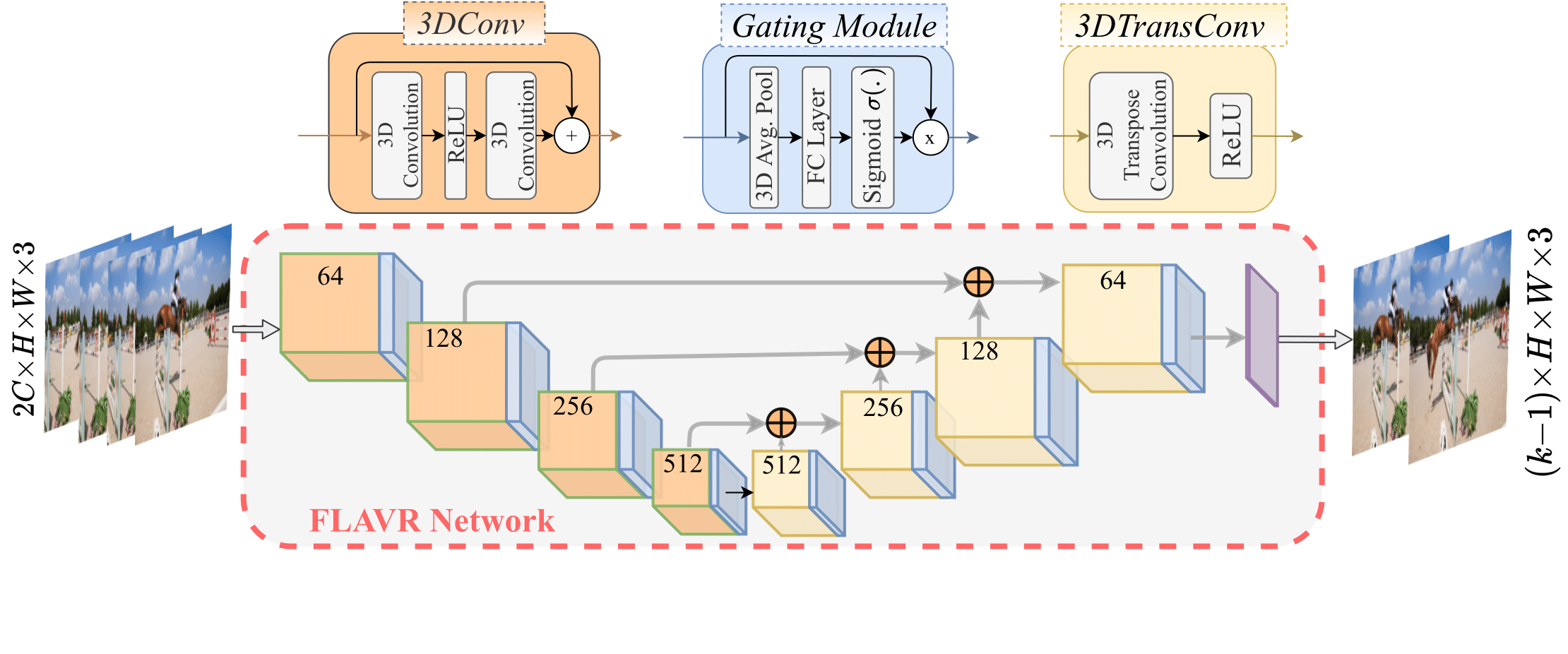

figures/arch_dia.pdf

0 → 100644

File added

figures/arch_dia.png

0 → 100644

266 KB

figures/baloon.gif

0 → 100644

2.11 MB

figures/sprite.gif

0 → 100644

7.88 MB

figures/teaser.pdf

0 → 100644

File added

interpolate.py

0 → 100644

loss.py

0 → 100644