evtexture

Showing

5.13 MB

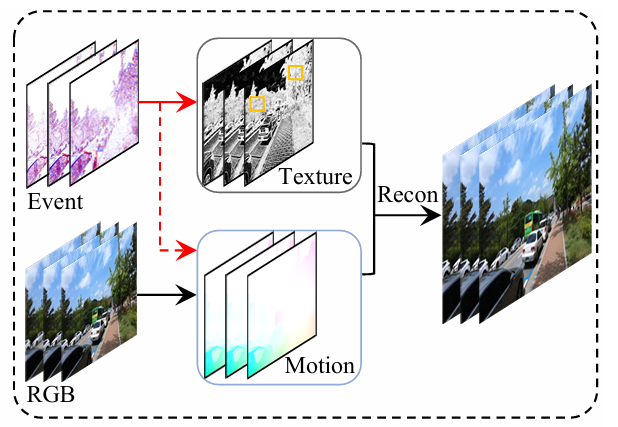

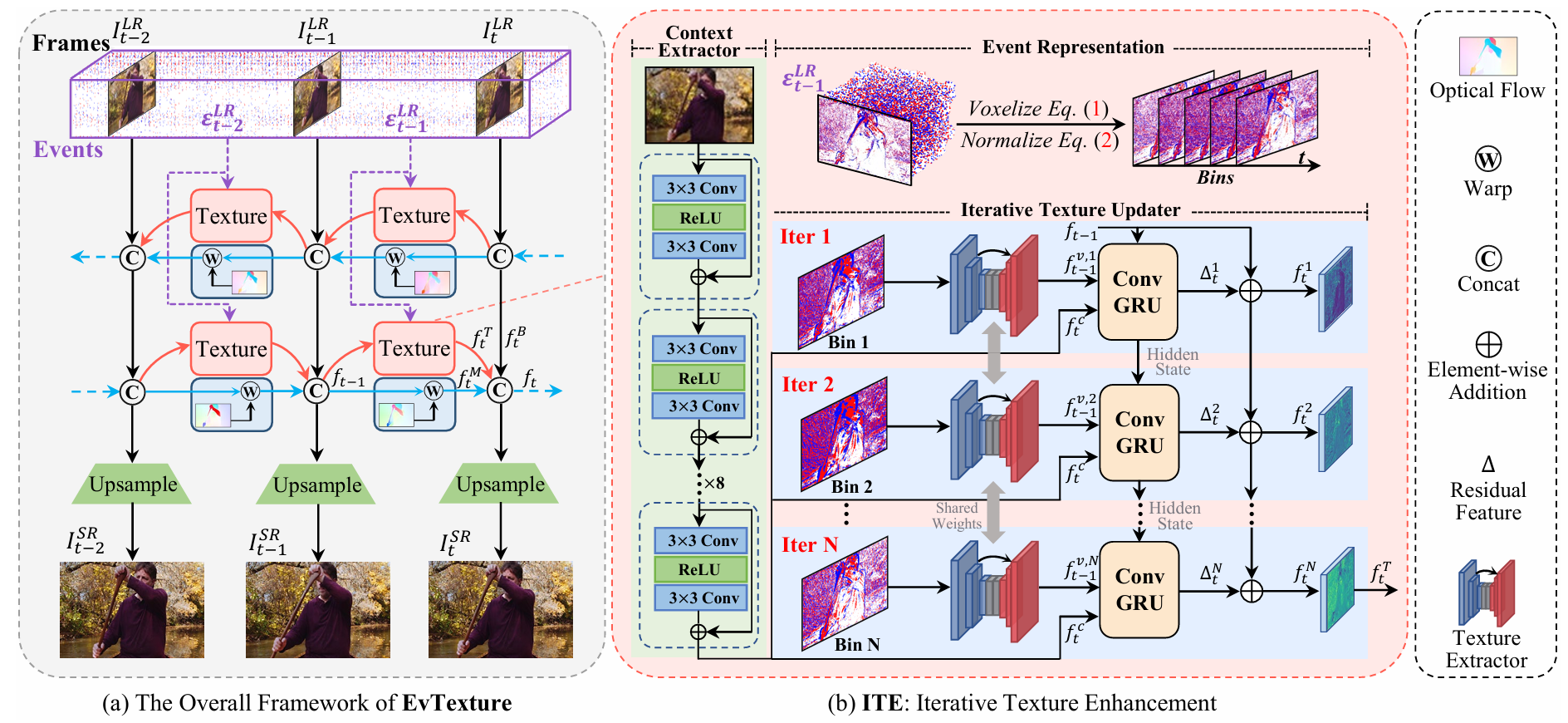

readme_imgs/alg.png

0 → 100644

201 KB

readme_imgs/models.png

0 → 100644

857 KB

readme_imgs/ori_10y.avi

0 → 100644

File added

readme_imgs/vid4_10y.avi

0 → 100644

File added

requirements.txt

0 → 100644

| addict | |||

| argparse | |||

| einops | |||

| future | |||

| h5py | |||

| lpips | |||

| lmdb | |||

| matplotlib | |||

| numpy>=1.17 | |||

| opencv-python | |||

| pandas | |||

| Pillow | |||

| pyyaml | |||

| requests | |||

| scikit-image | |||

| scipy | |||

| setuptools | |||

| # tb-nightly | |||

| tensorboard | |||

| tensorboardX | |||

| # torch>=1.10.2 | |||

| # torchvision>=0.11.3 | |||

| tqdm | |||

| wandb | |||

| yapf |

scripts/dist_test.sh

0 → 100755

scripts/dist_train.sh

0 → 100755

setup.cfg

0 → 100644

setup.py

0 → 100644