First commit

parents

Showing

Contributors.md

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

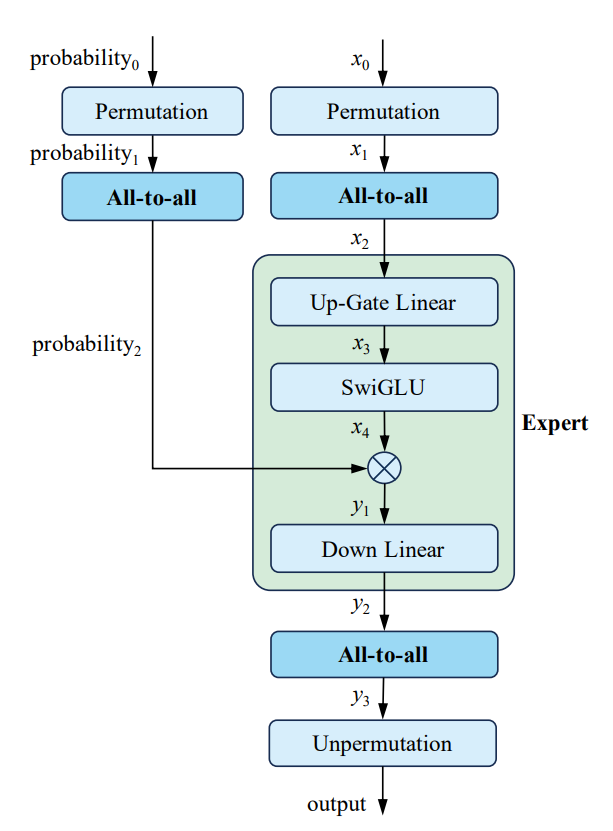

doc/methods.png

0 → 100644

156 KB

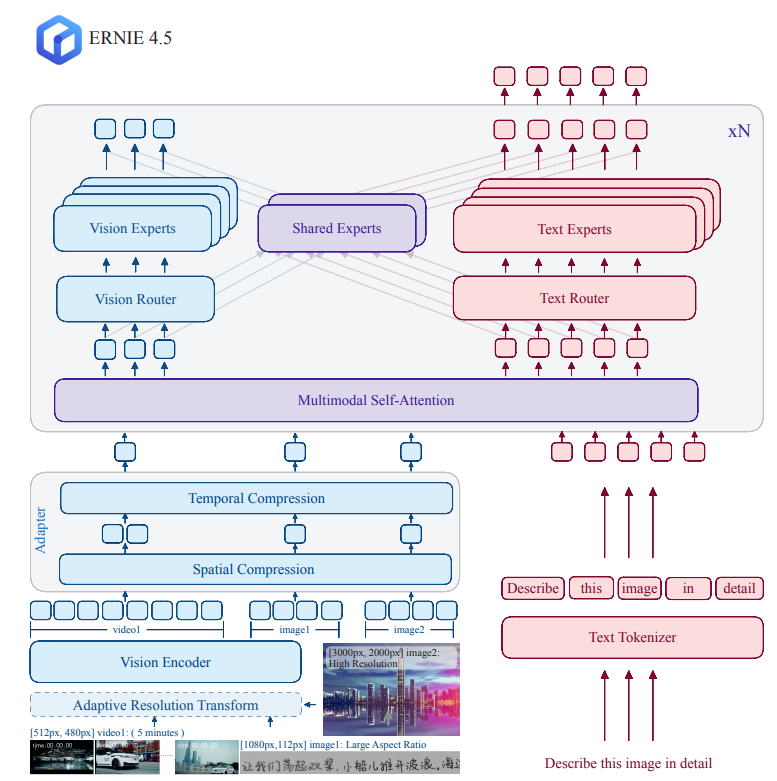

doc/model.png

0 → 100644

60.6 KB

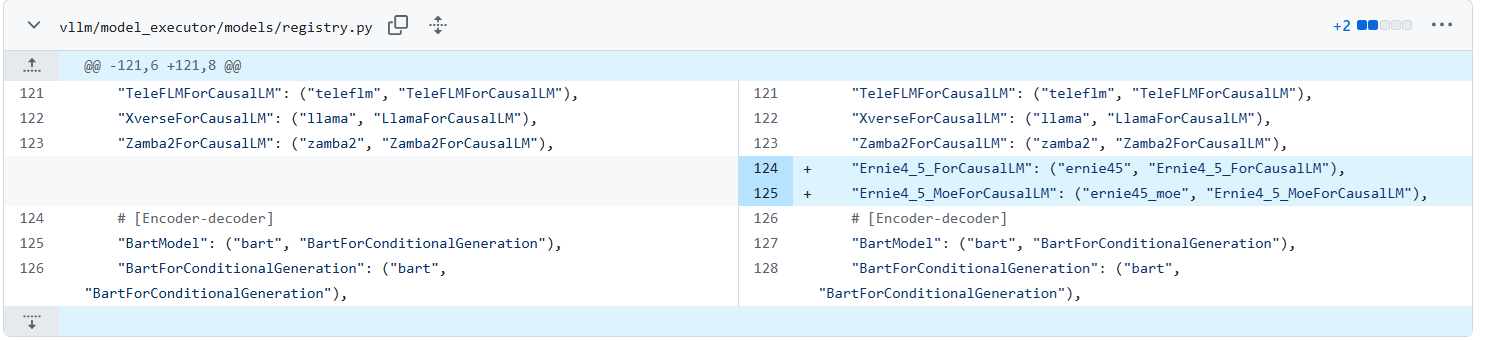

doc/registry.png

0 → 100644

46.9 KB

doc/results-dcu.png

0 → 100644

97.3 KB

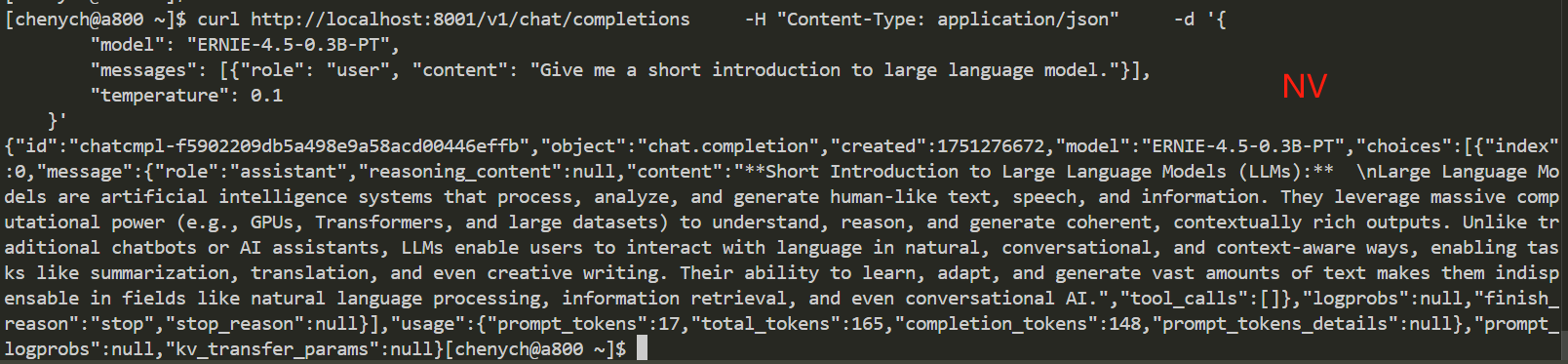

doc/results-nv.png

0 → 100644

82.1 KB

docker/Dockerfile

0 → 100644

icon.png

0 → 100644

53.8 KB

infer_transformers.py

0 → 100644

model.properties

0 → 100644

vllm/ernie45.py

0 → 100644

vllm/ernie45_moe.py

0 → 100644

vllm/registry.py

0 → 100644