优化精度,并更新readme

Showing

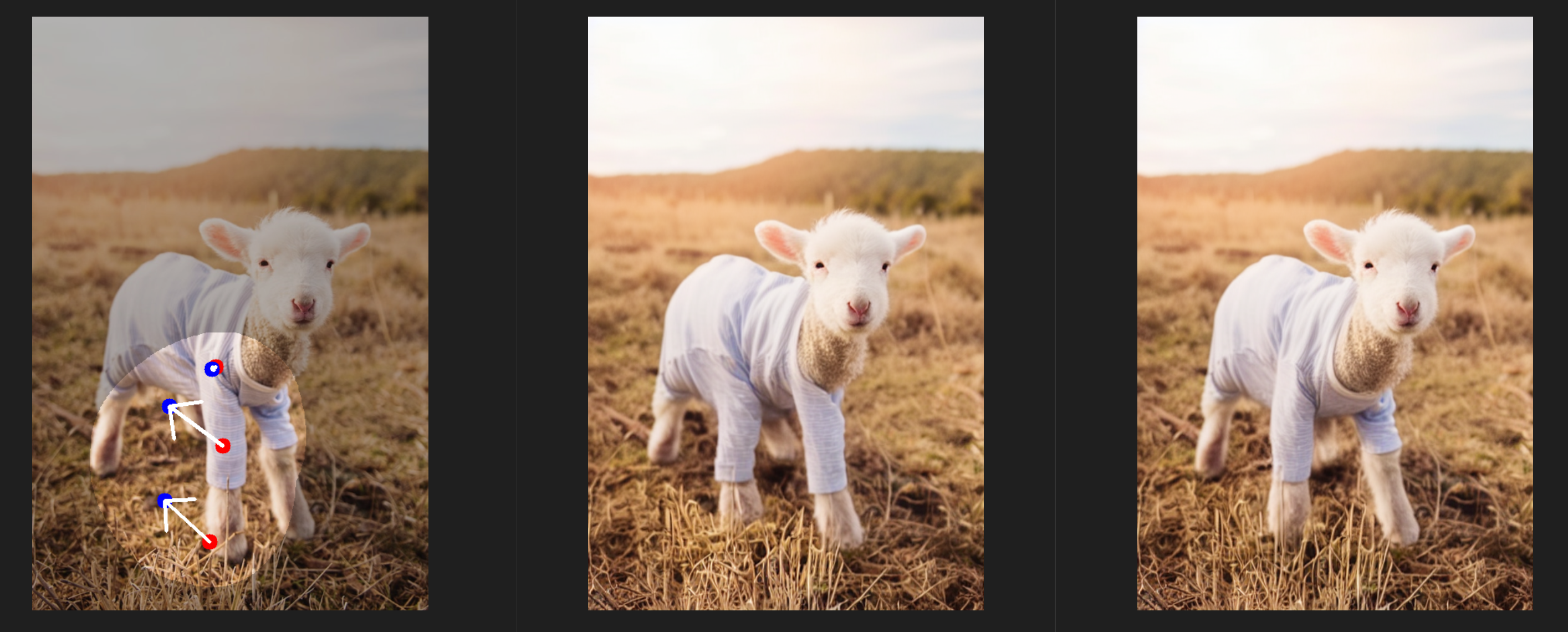

doc/对比1.jpg

0 → 100644

2.47 MB

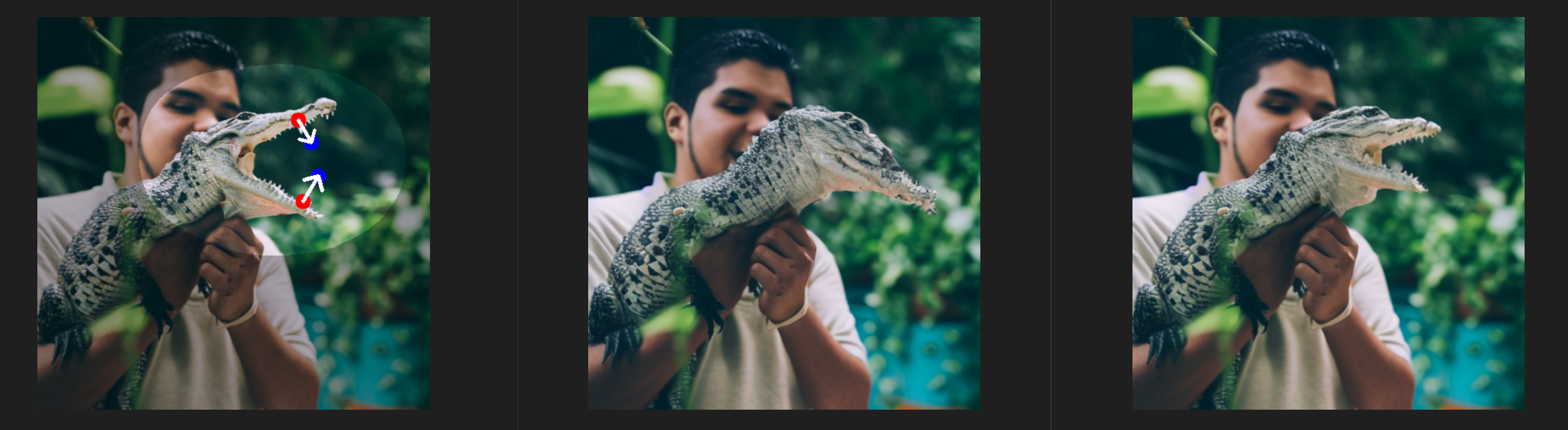

doc/对比2.jpg

0 → 100644

1.83 MB

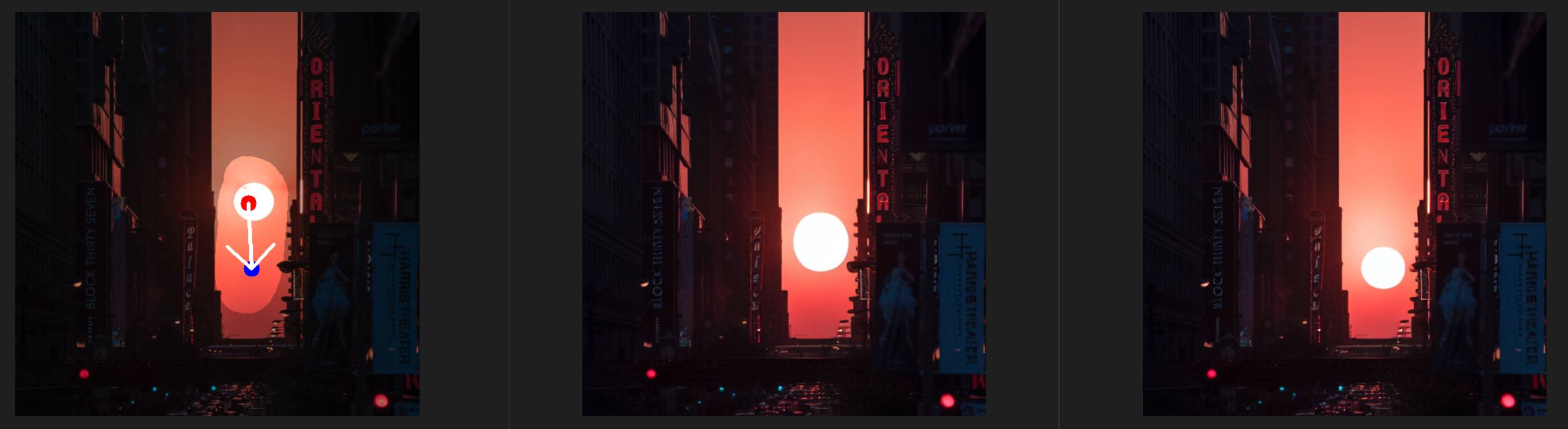

doc/对比3.jpg

0 → 100644

1.45 MB

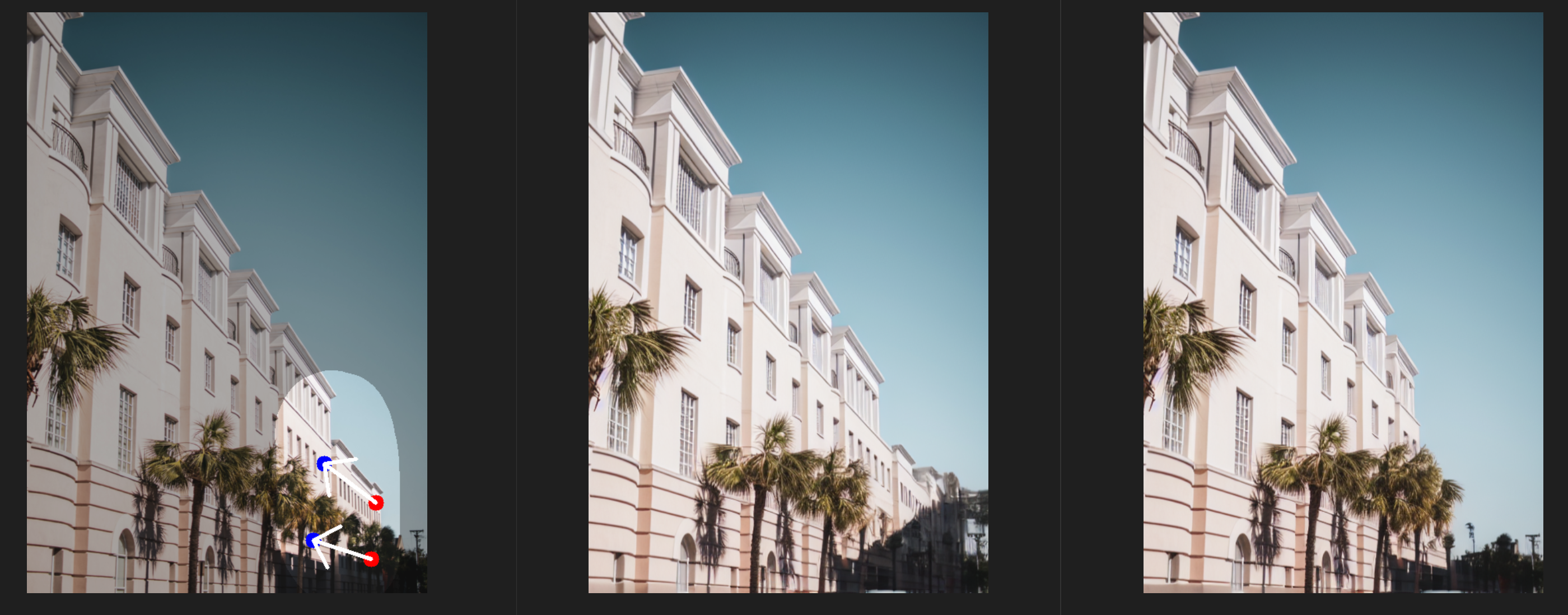

doc/对比4.jpg

0 → 100644

2.49 MB

2.47 MB

1.83 MB

1.45 MB

2.49 MB