"tests/vscode:/vscode.git/clone" did not exist on "d70beba762c3b151edb8578f1dfbdce01c0dfa73"

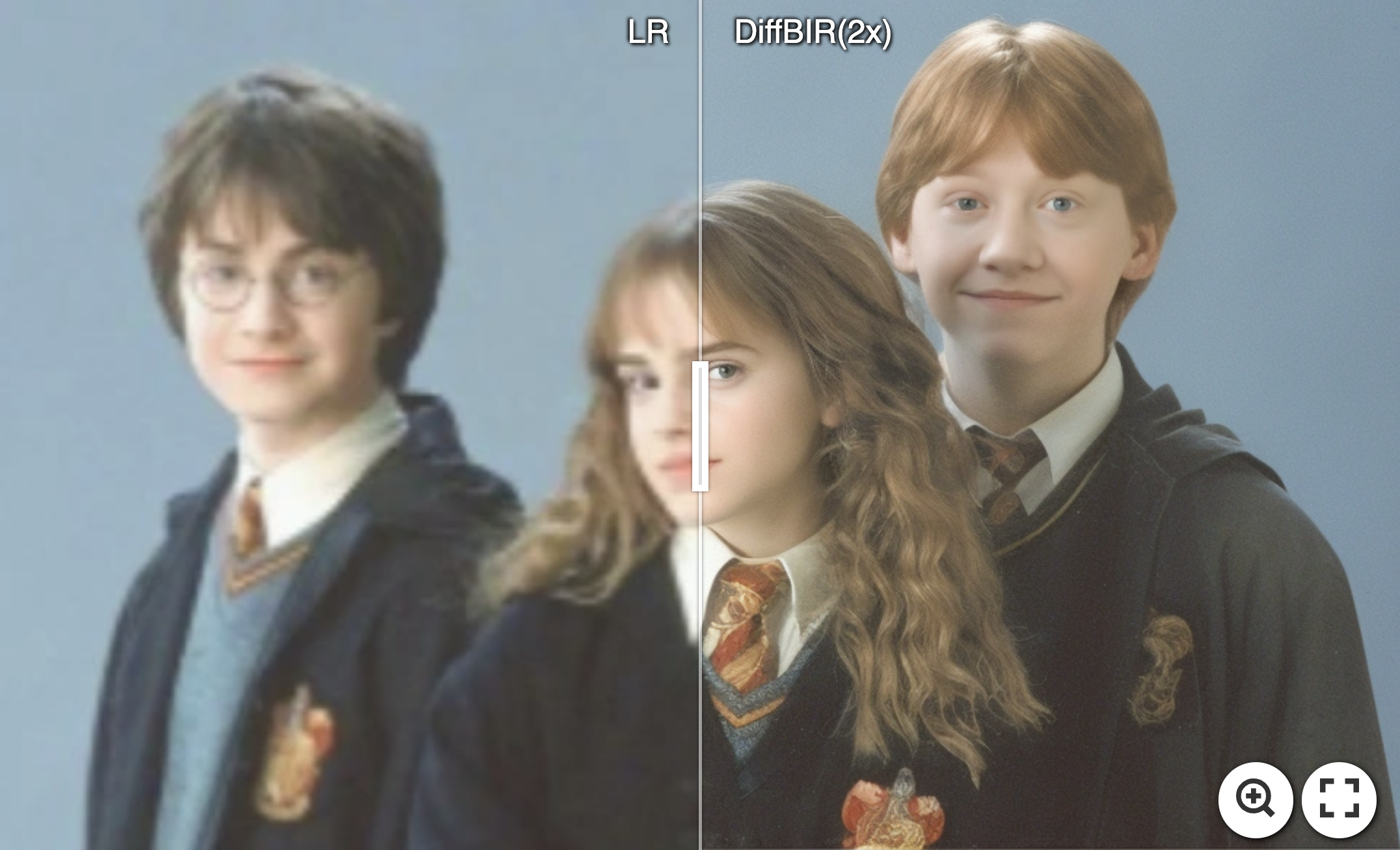

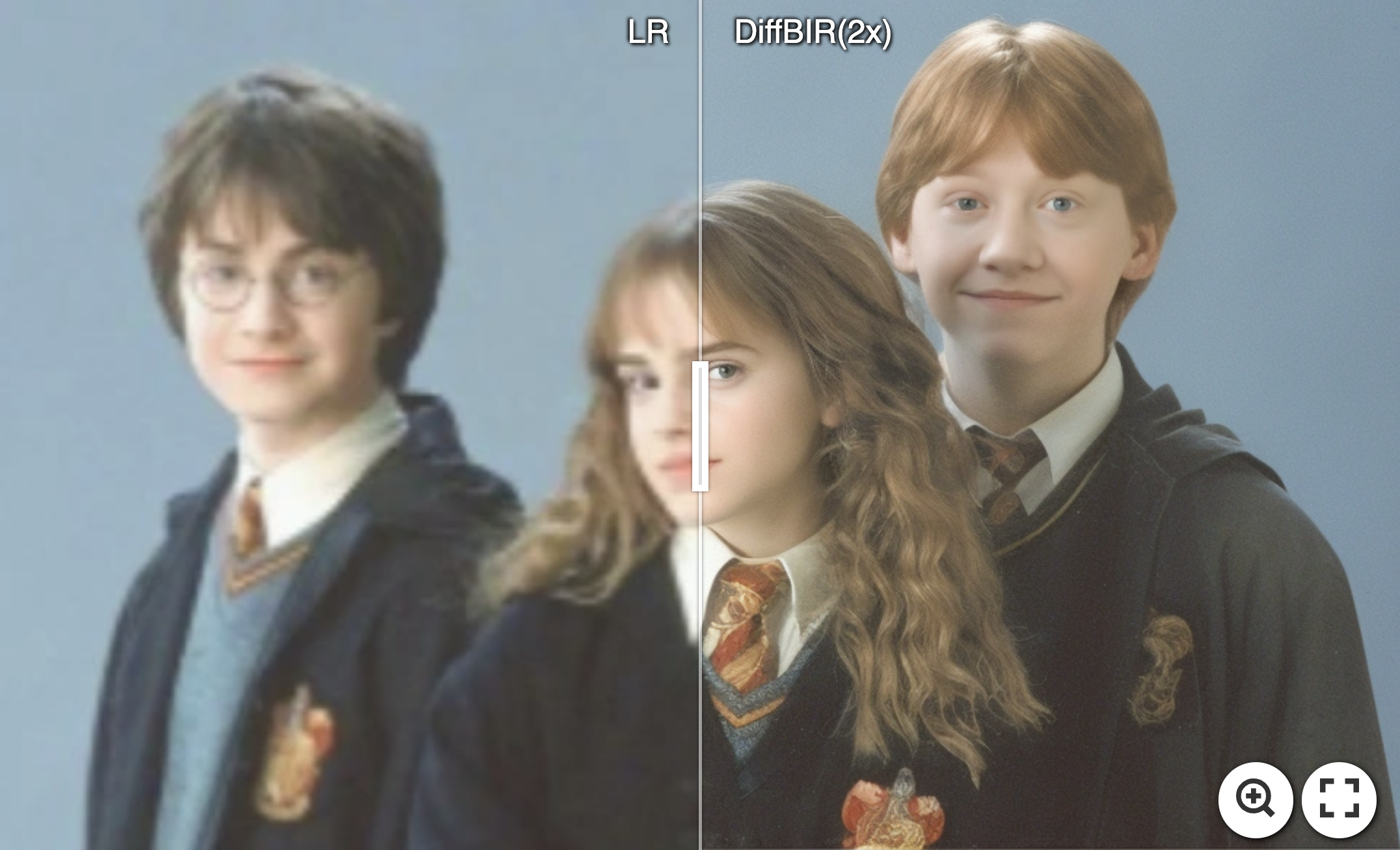

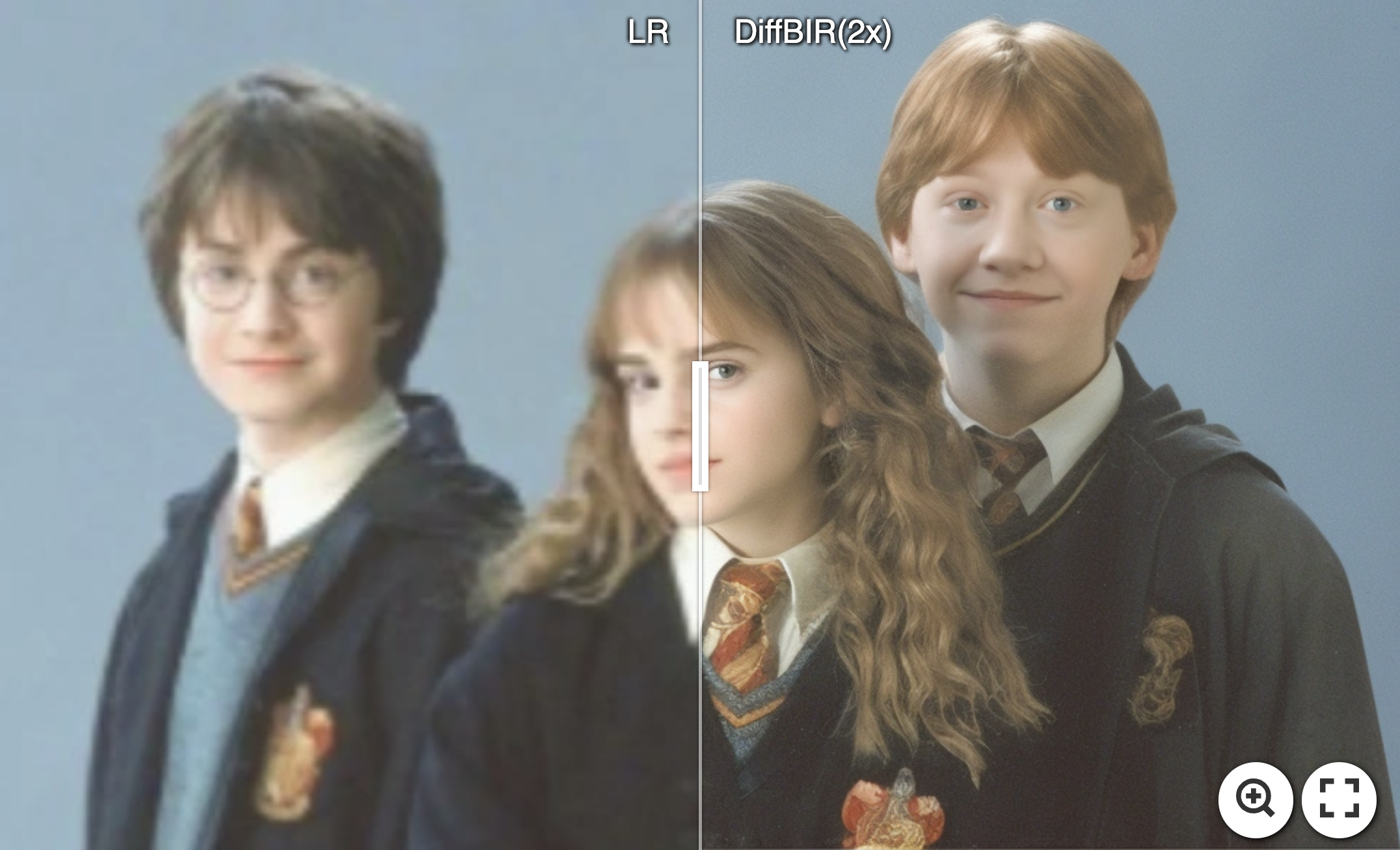

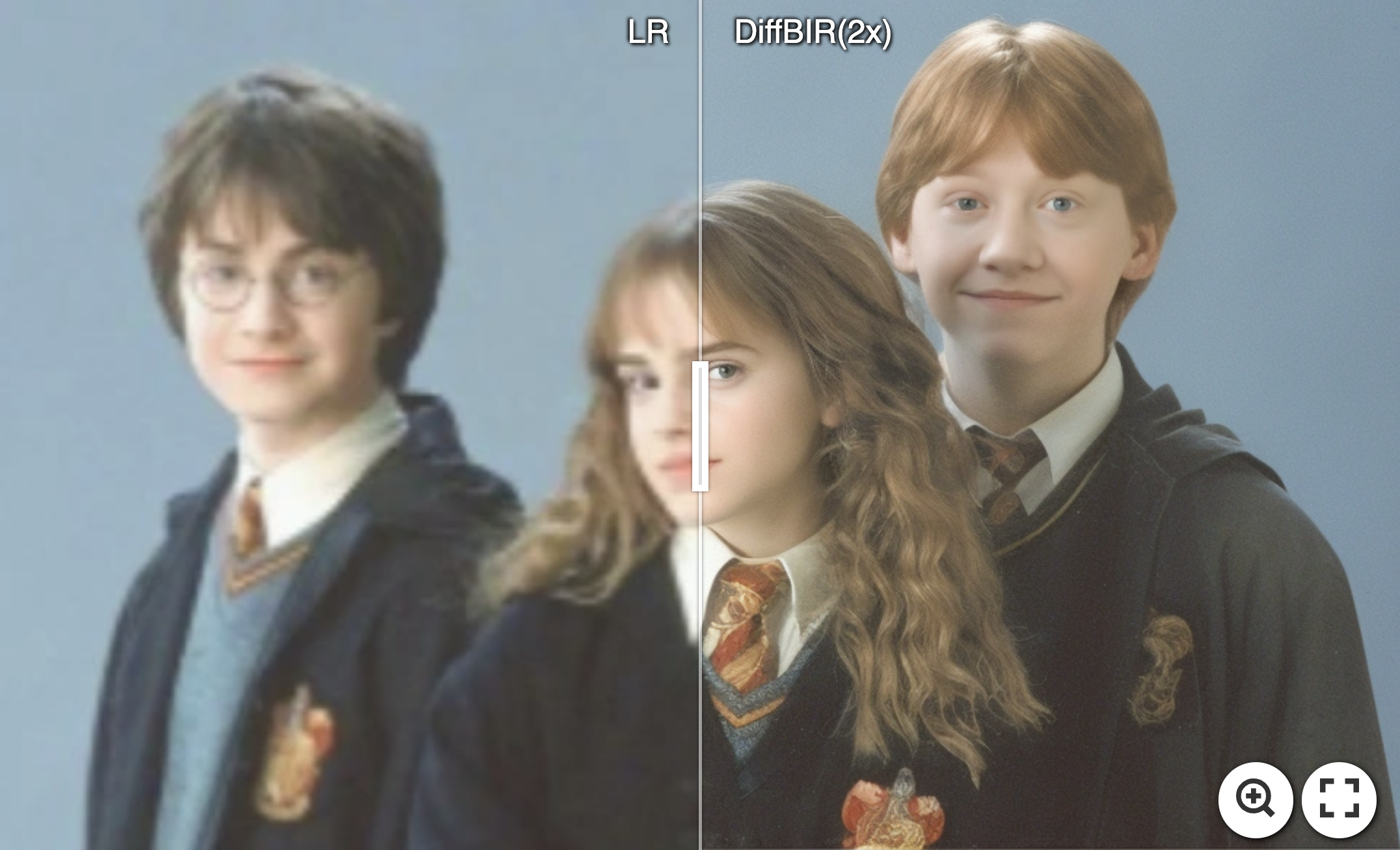

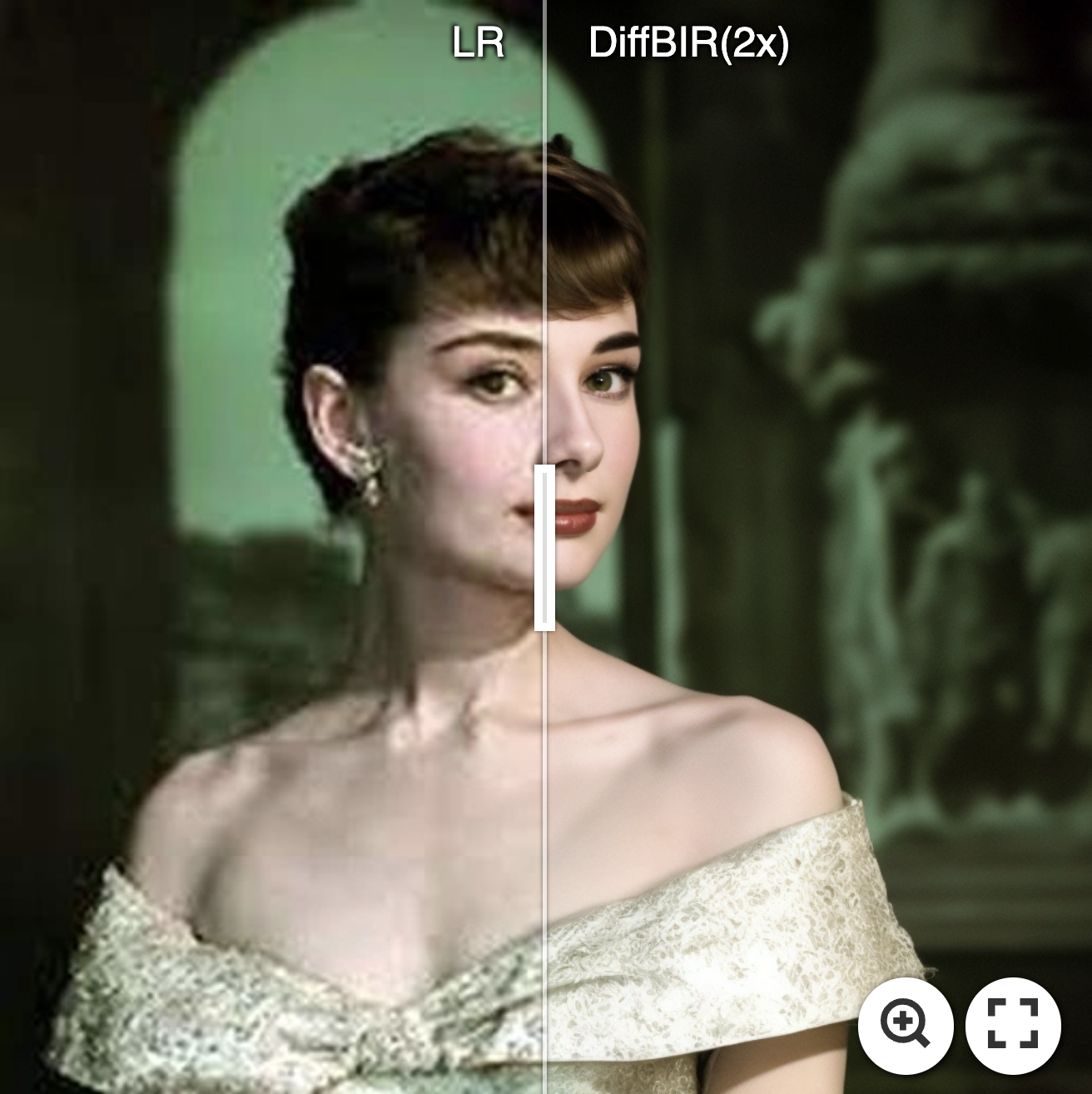

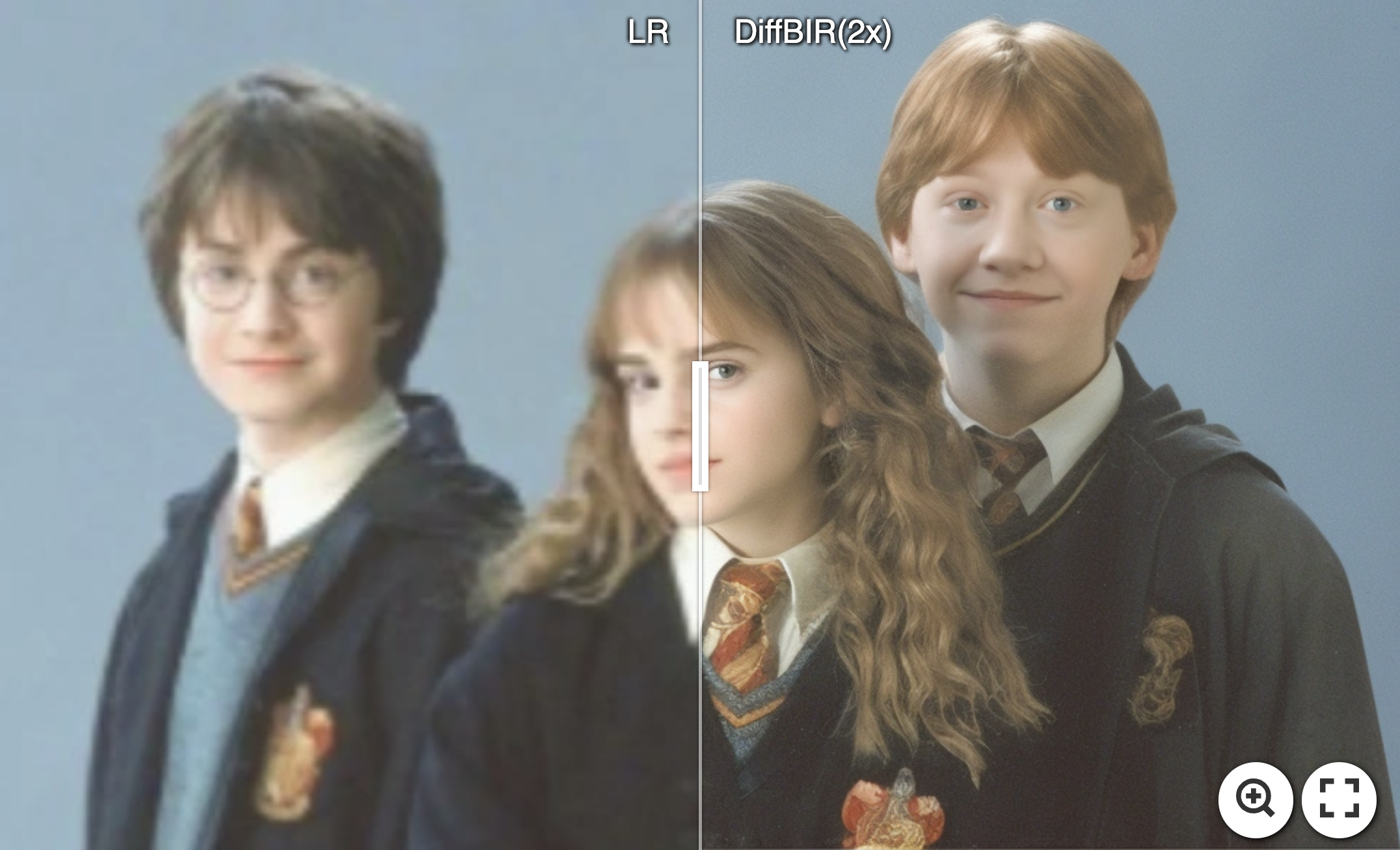

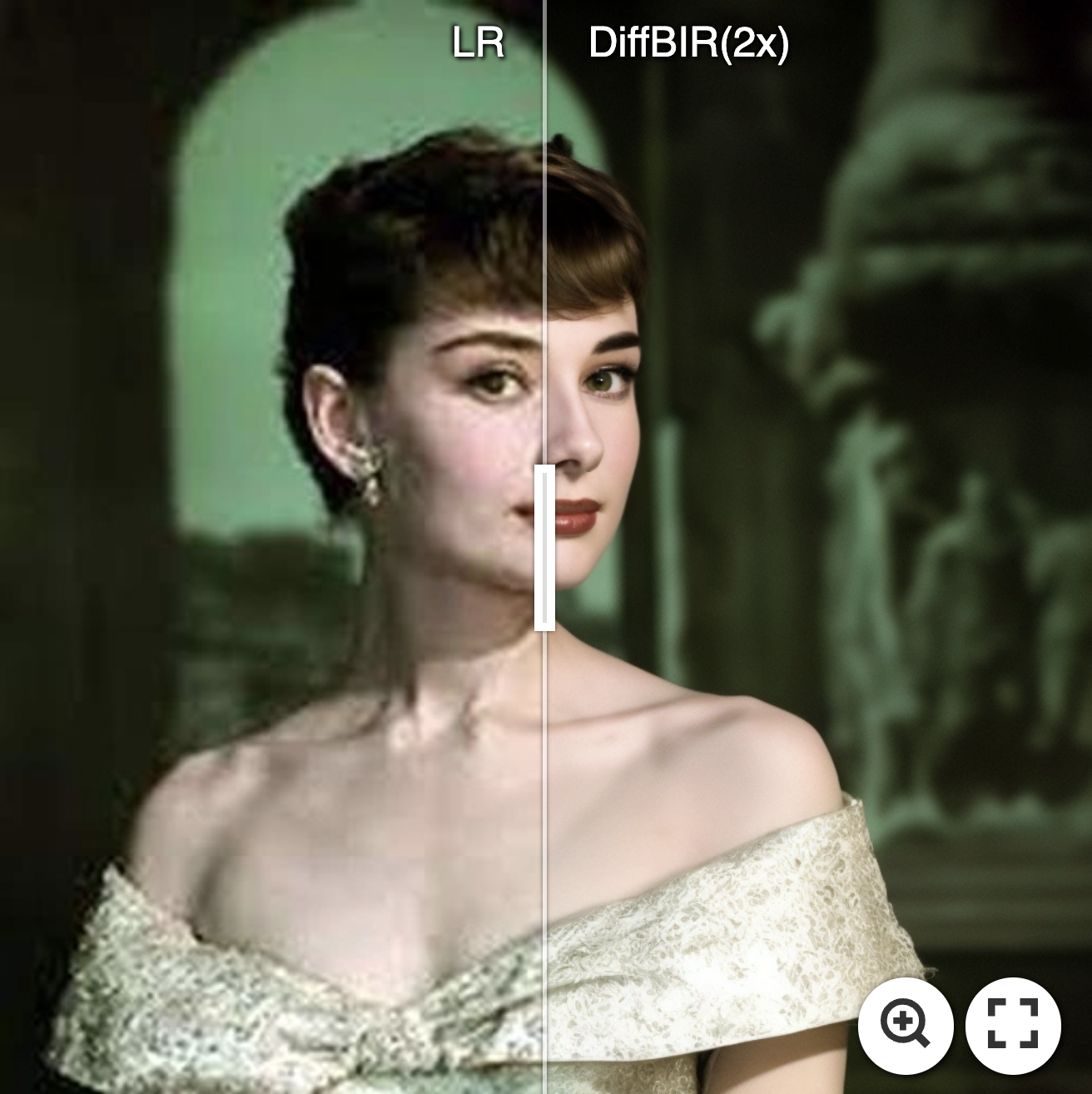

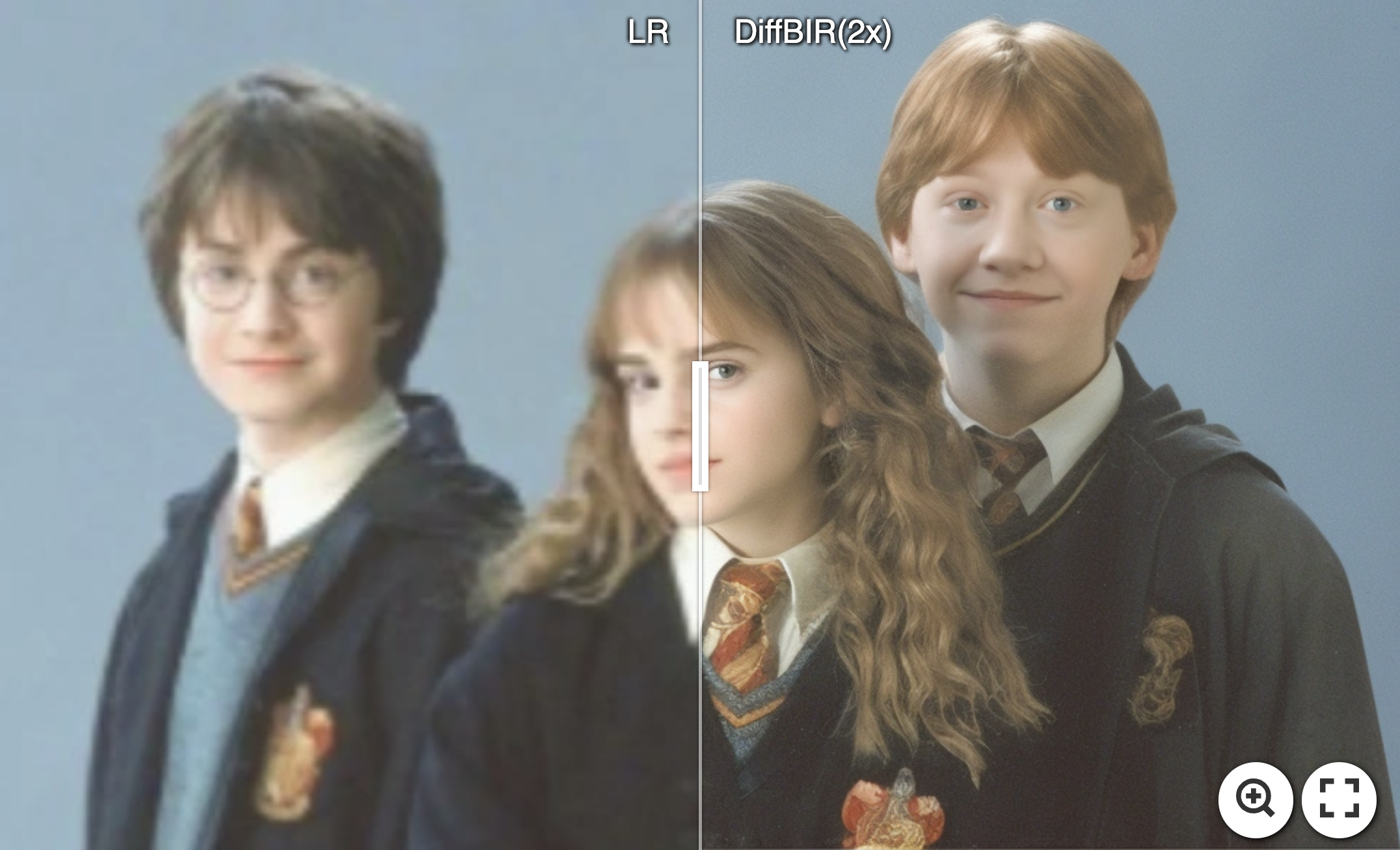

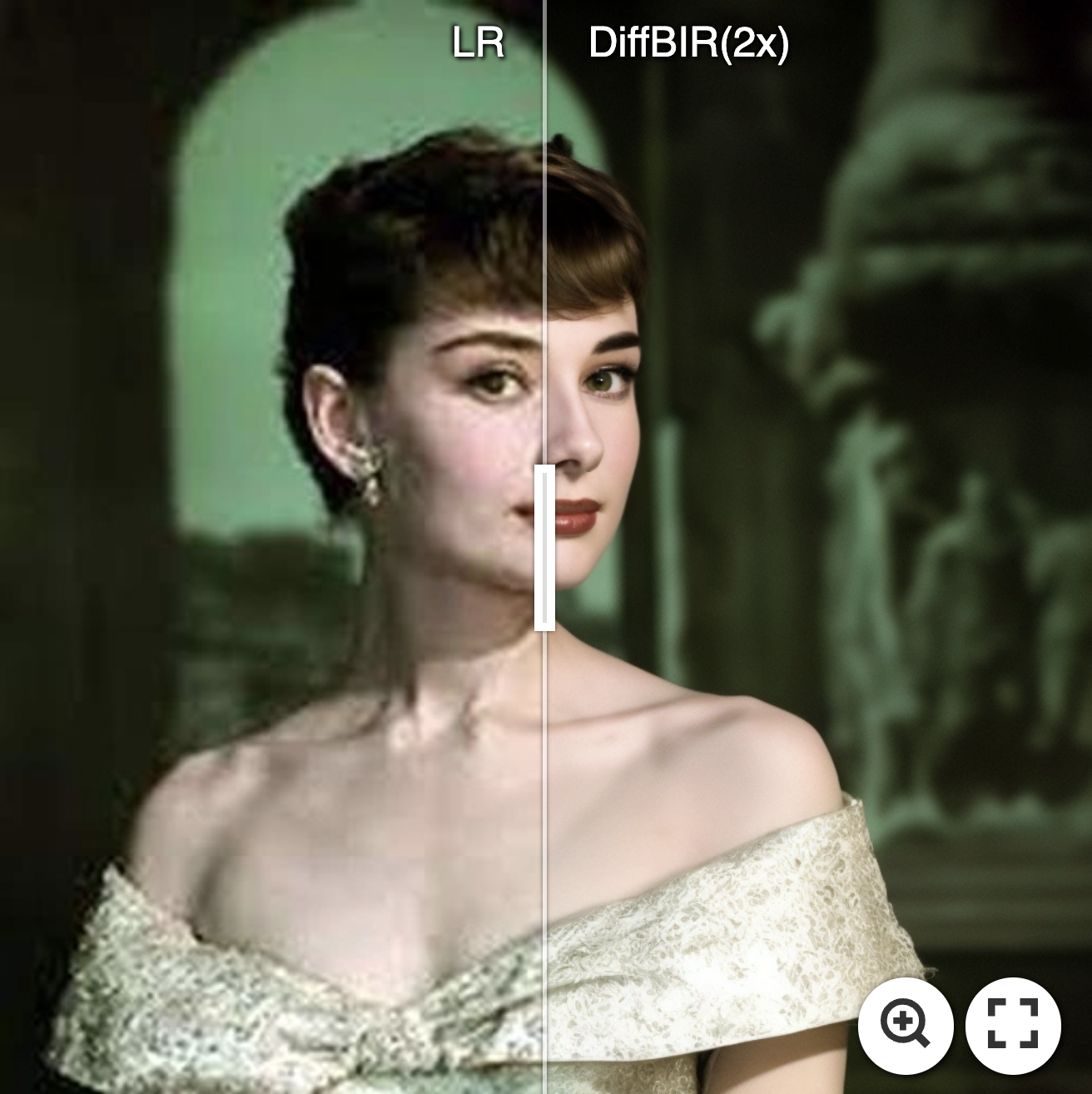

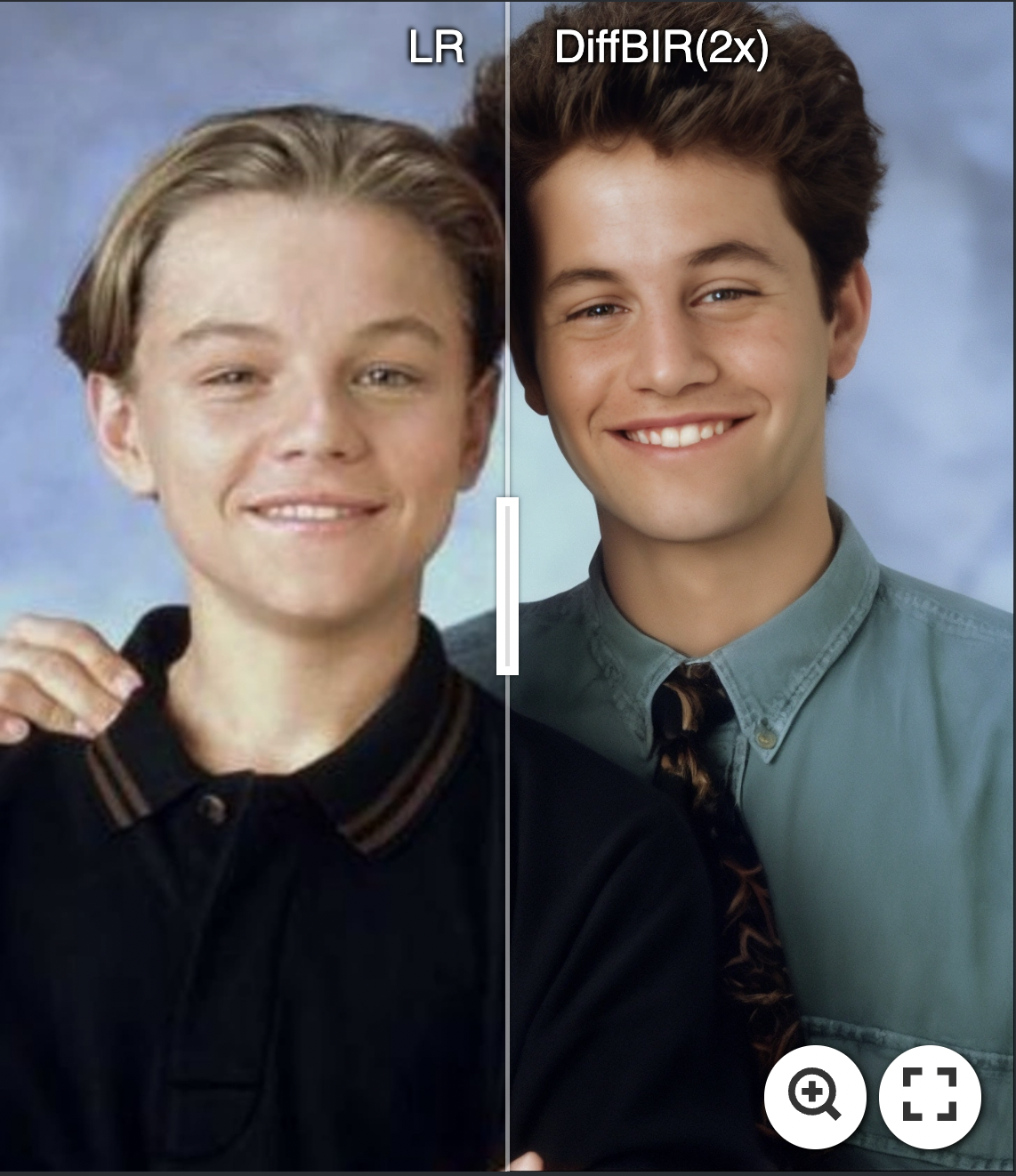

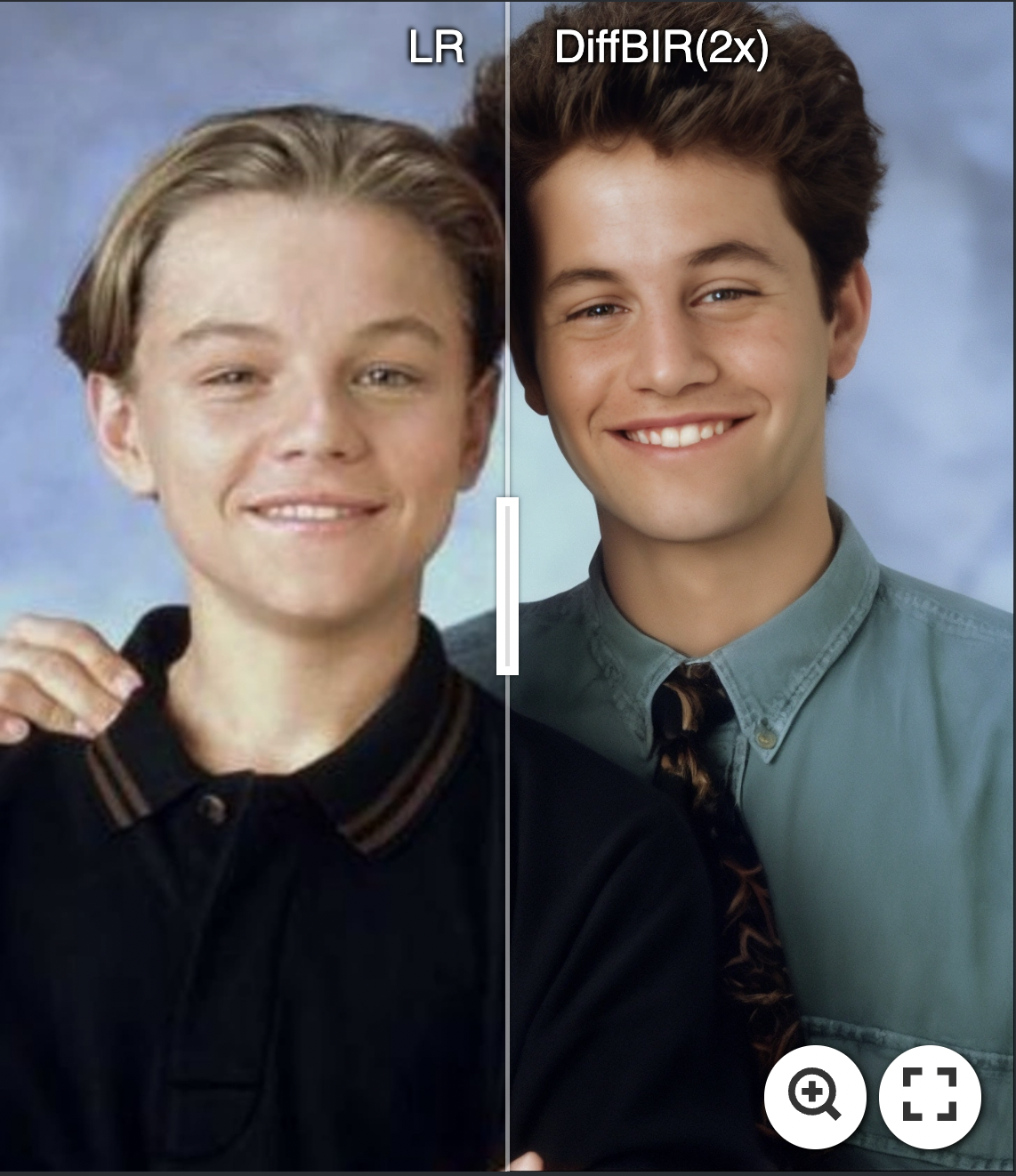

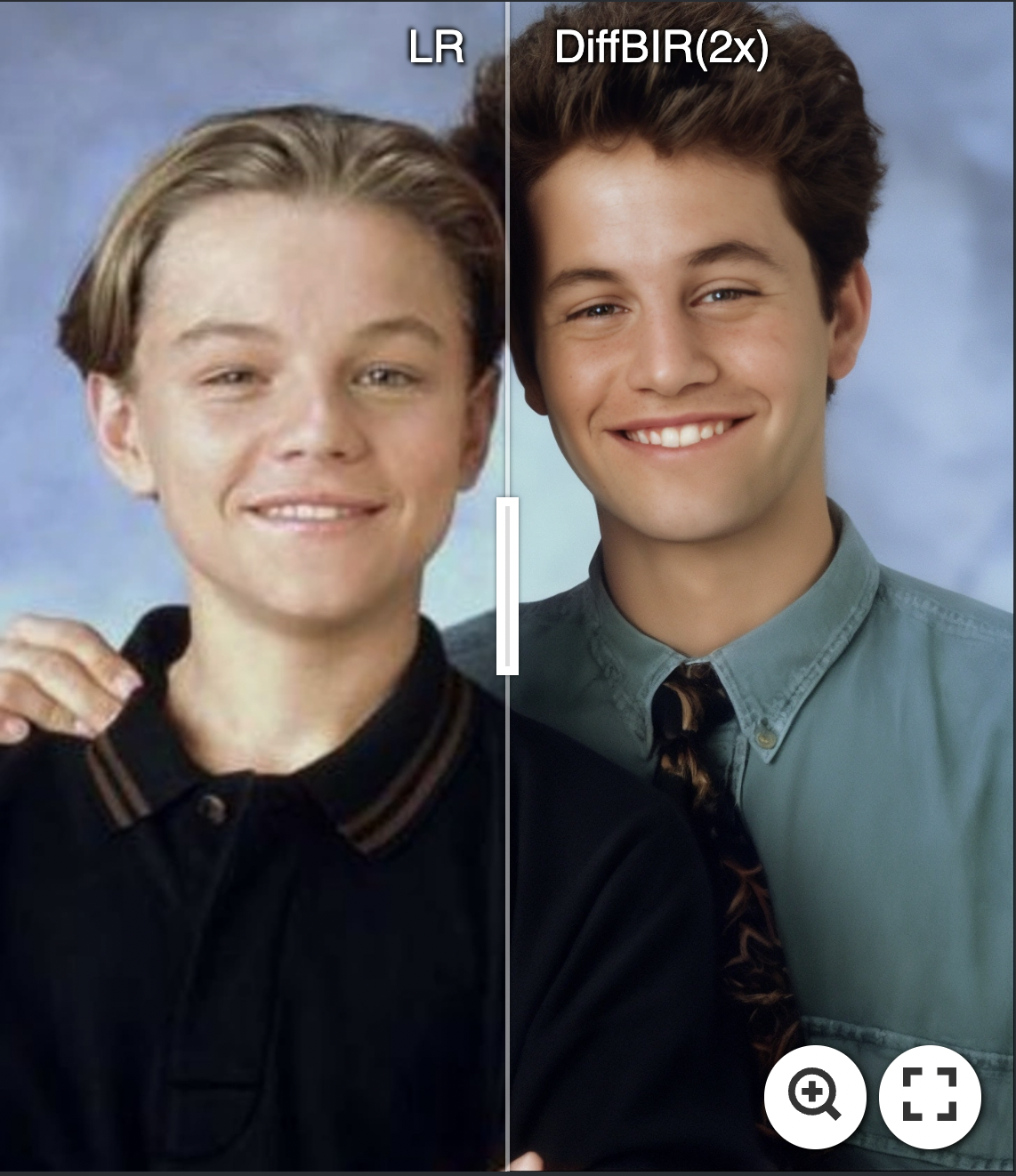

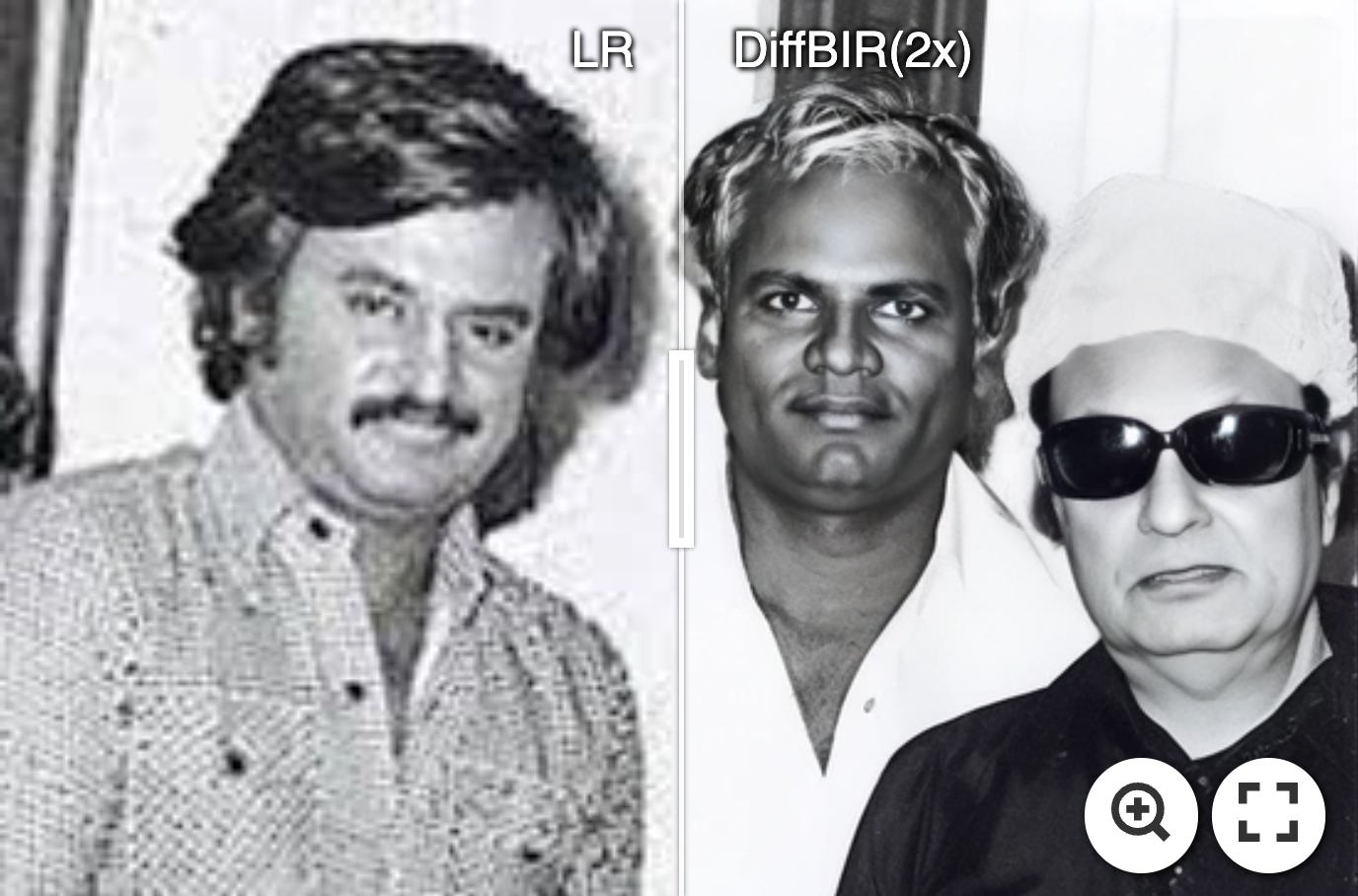

fix bugs in face enhancement

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

1.99 MB

3.95 MB | W: | H:

2.54 MB | W: | H:

2.57 MB | W: | H:

2.34 MB | W: | H:

1.99 MB