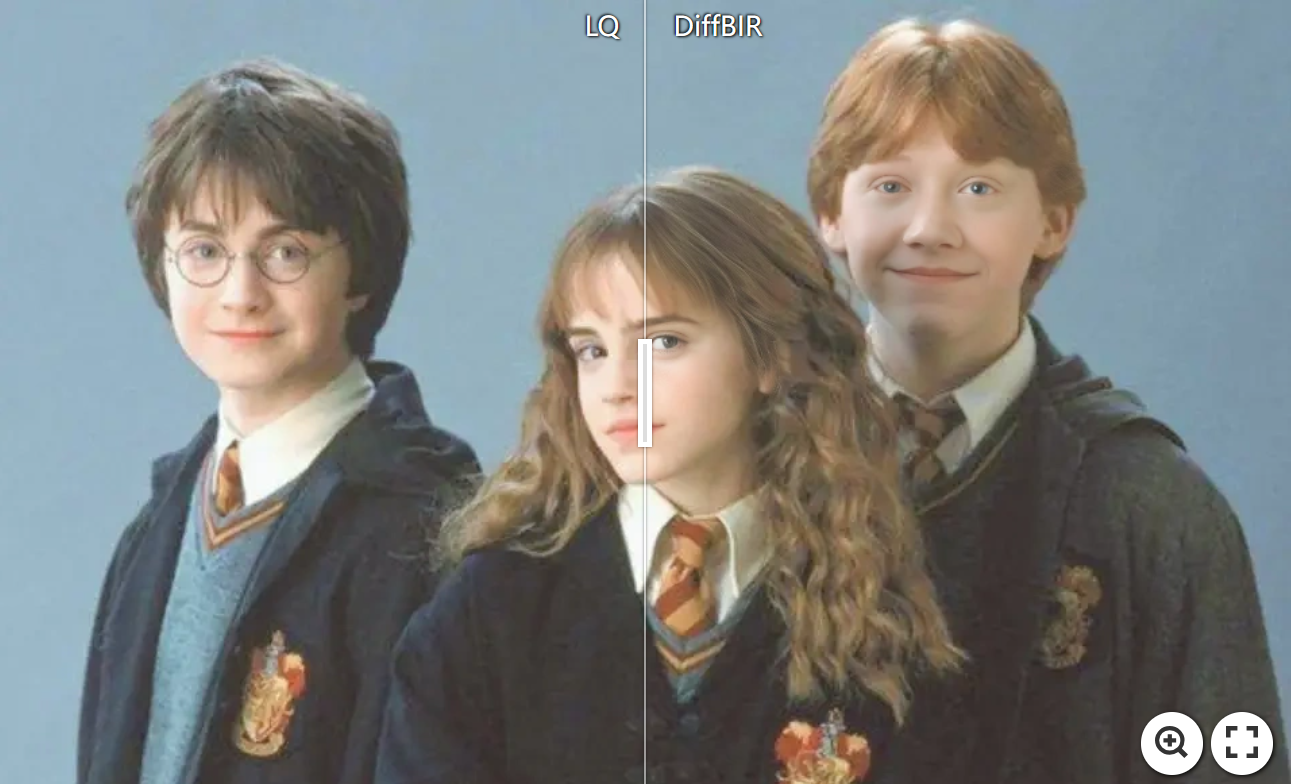

add support to background upsampler for face enhancement

Showing

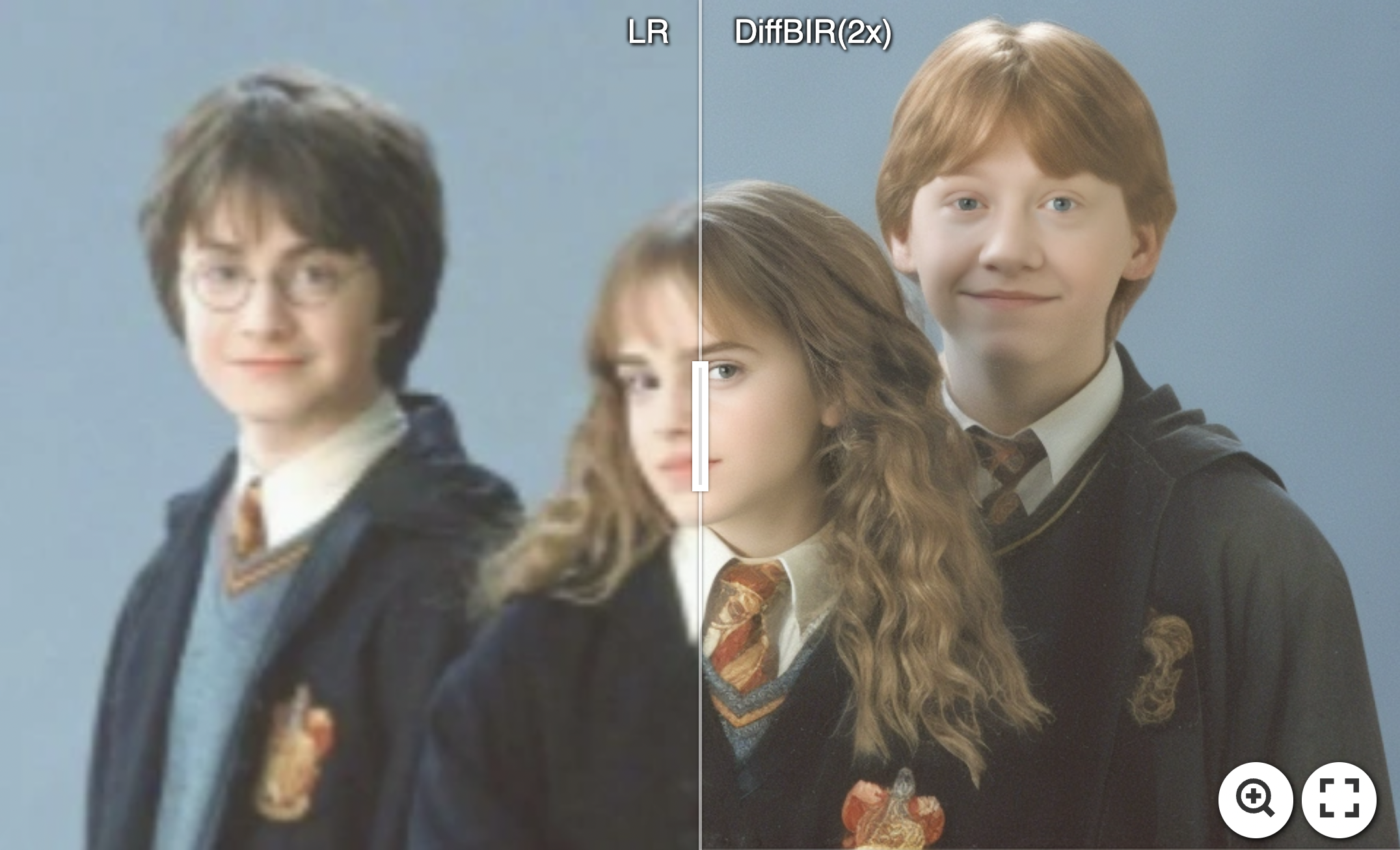

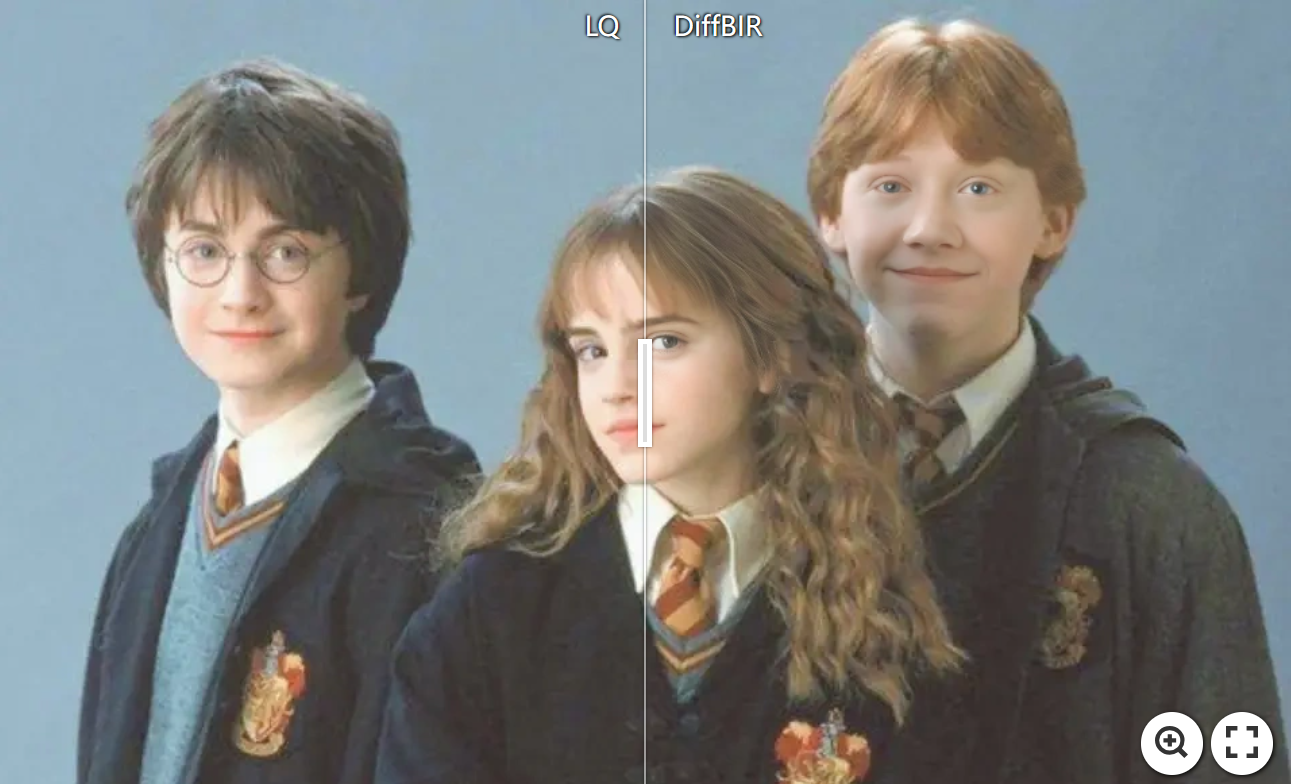

| W: | H:

| W: | H:

2.57 MB

1.98 MB

32.9 KB

24 KB

utils/realesrgan_utils.py

0 → 100644

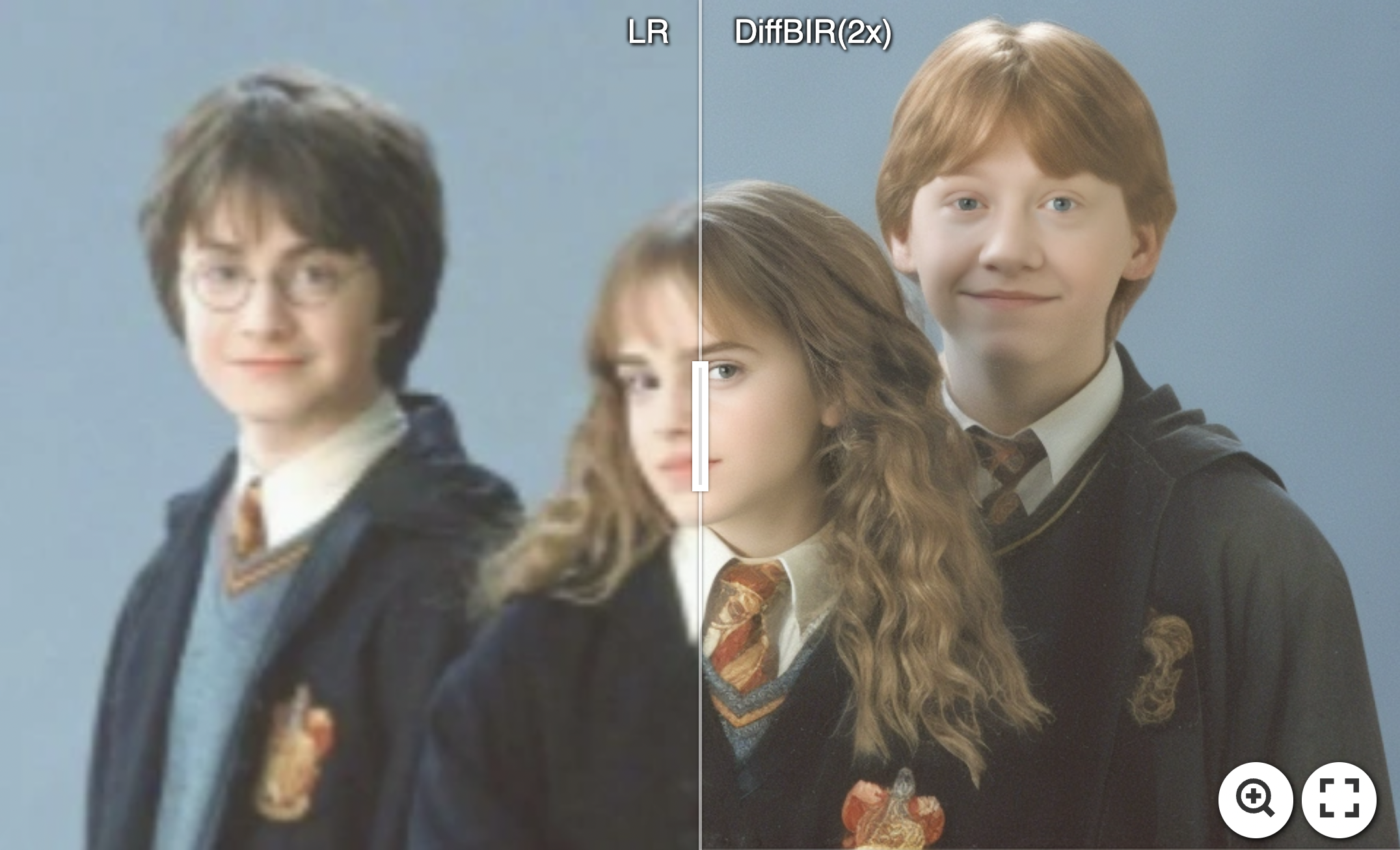

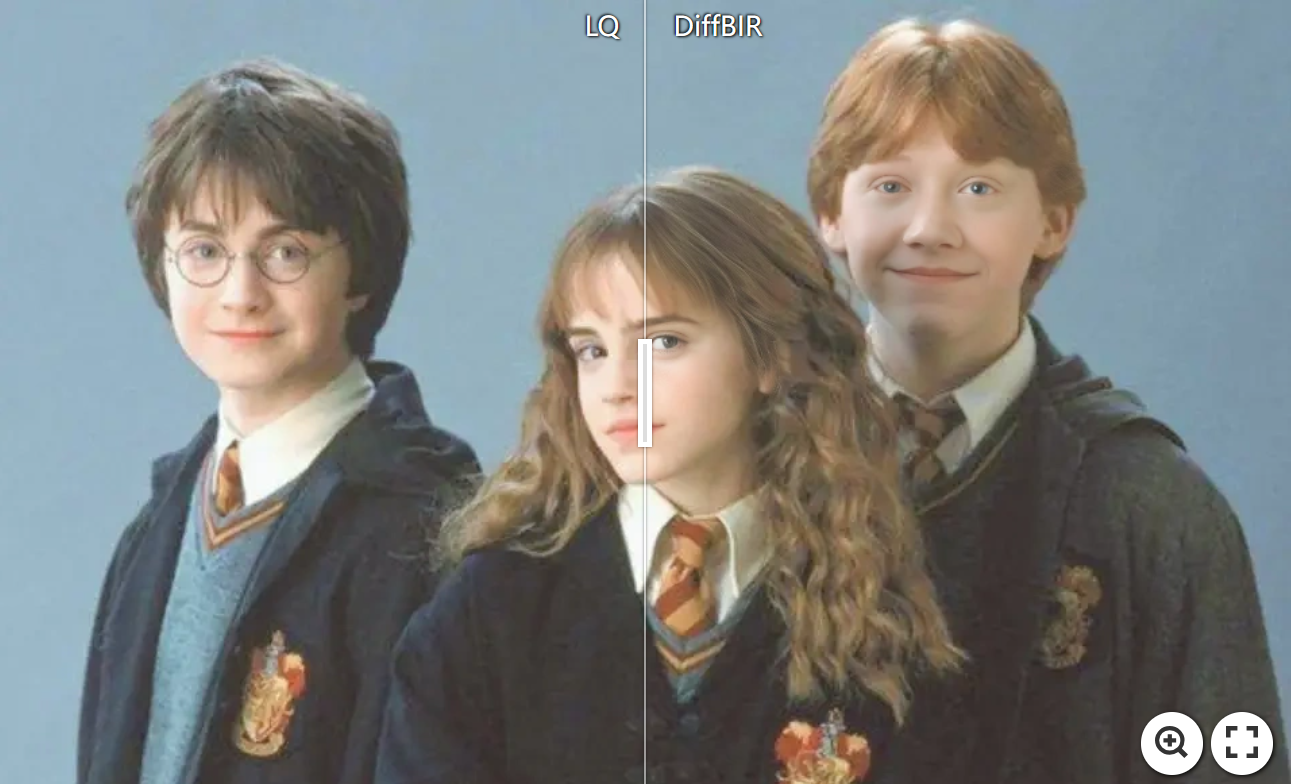

954 KB | W: | H:

3.95 MB | W: | H:

2.57 MB

1.98 MB

32.9 KB

24 KB