"vscode:/vscode.git/clone" did not exist on "13e8fdecda91e27e40b15fa8a8f456ade773e6eb"

first commit

Showing

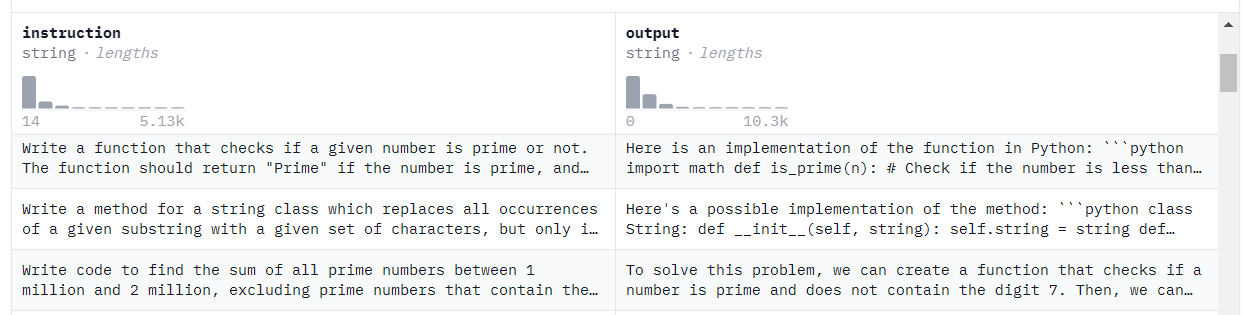

assets/dataset.png

0 → 100644

26.6 KB

finetune/train_ft.sh

0 → 100644

inference.py

0 → 100644