dalle2_pytorch

Showing

517 KB

26.8 KB

examples/landscapes.png

0 → 100644

258 KB

908 KB

examples/variations/jeep.png

0 → 100644

220 KB

262 KB

gradio_inference.py

0 → 100644

icon.png

0 → 100644

68.4 KB

113 KB

96.8 KB

71.5 KB

142 KB

91.8 KB

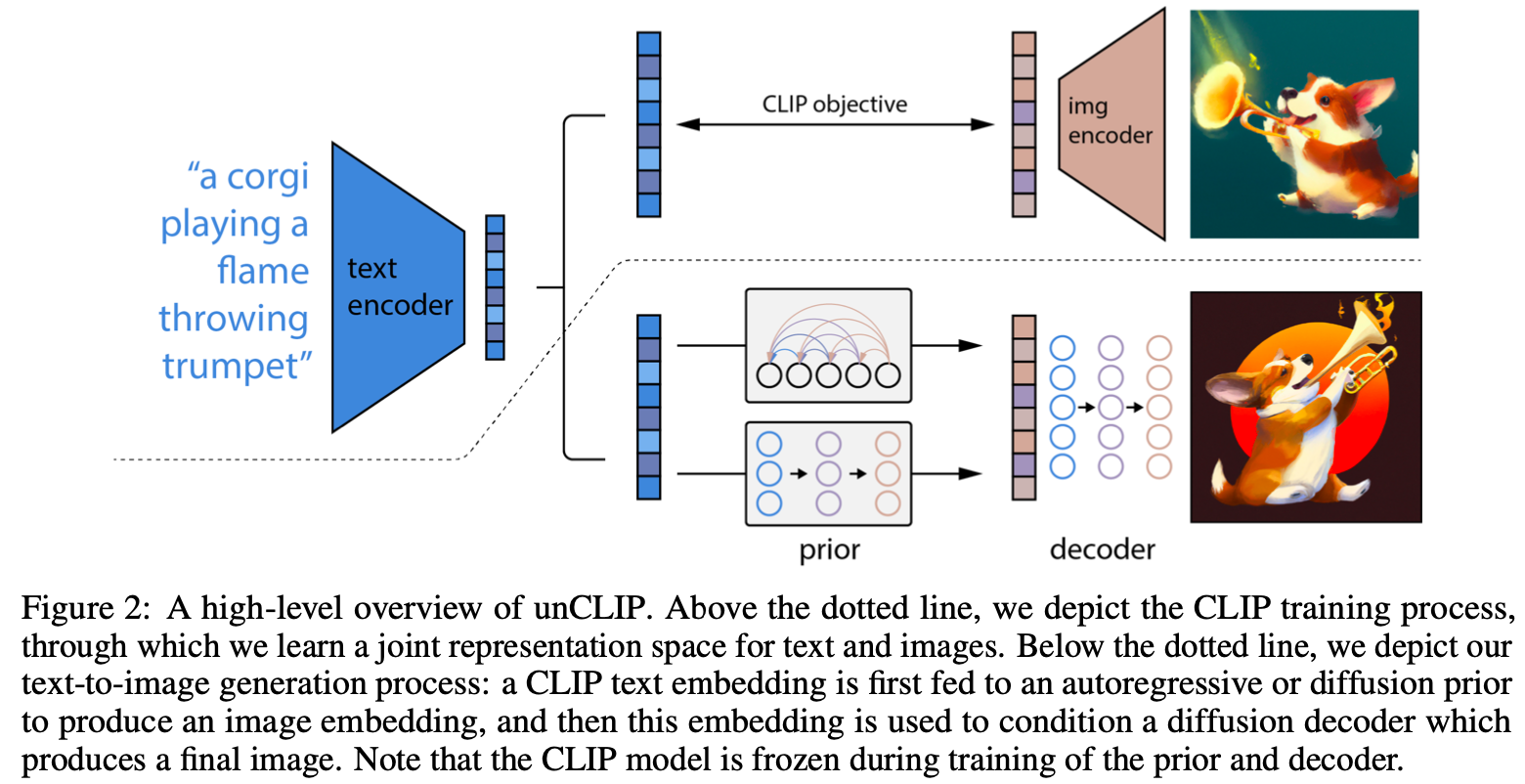

images/dalle2.png

0 → 100644

425 KB

requirements.txt

0 → 100644

| pydantic==1.10.6 | ||

| dalle2-pytorch==1.1.0 | ||

| datasets |

setup.py

0 → 100644

train_decoder.py

0 → 100644

train_prior.py

0 → 100644