update codes

Showing

4.23 MB

4.27 MB

88.3 KB

local_mode/services/chat.py

0 → 100644

metric/README.md

0 → 100644

metric/README_zh.md

0 → 100644

metric/pics/Bigcodebench.png

0 → 100644

213 KB

metric/pics/FunctionCall.png

0 → 100644

72.7 KB

97.8 KB

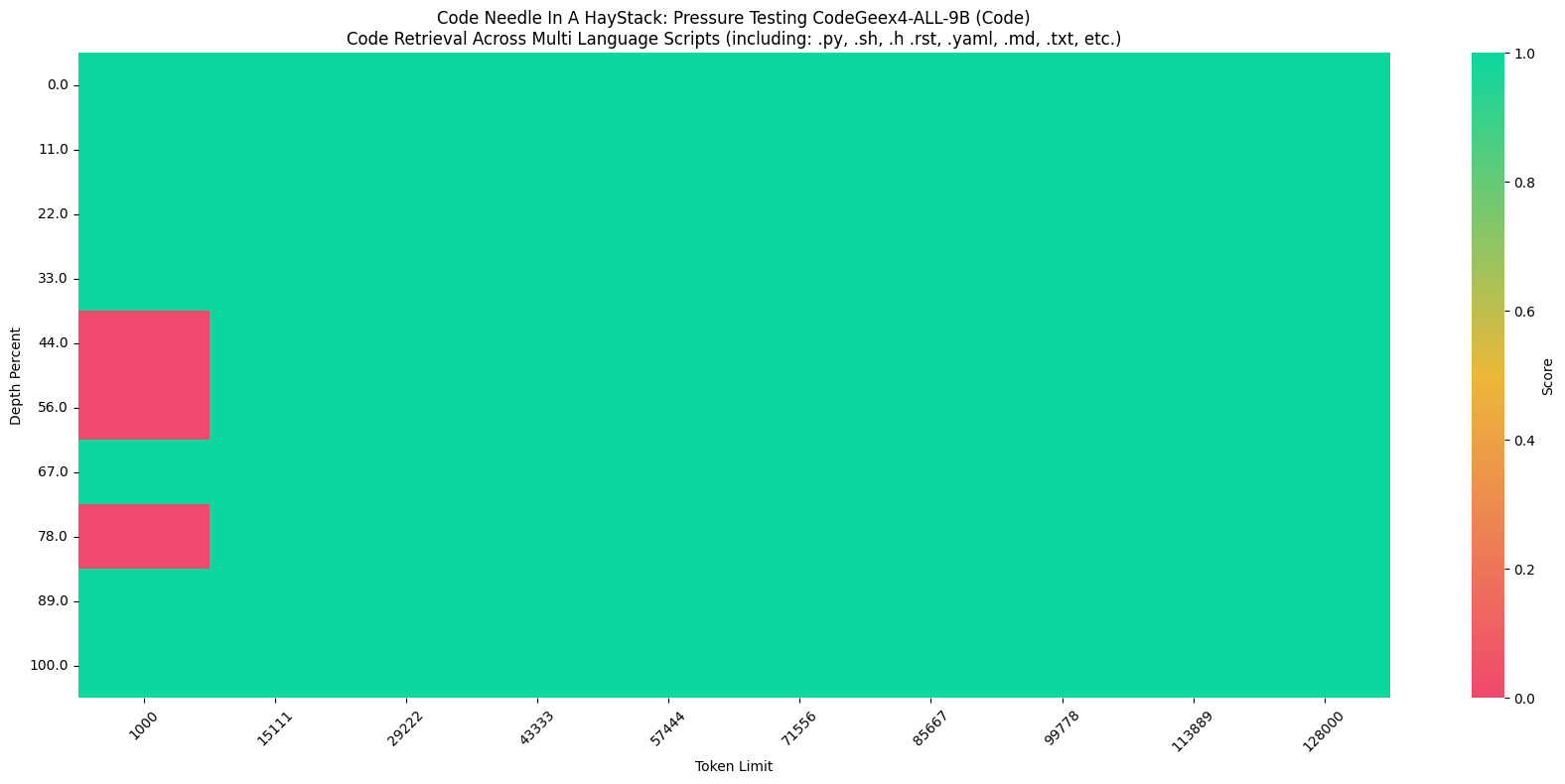

metric/pics/NIAH_ALL.png

0 → 100644

61.4 KB

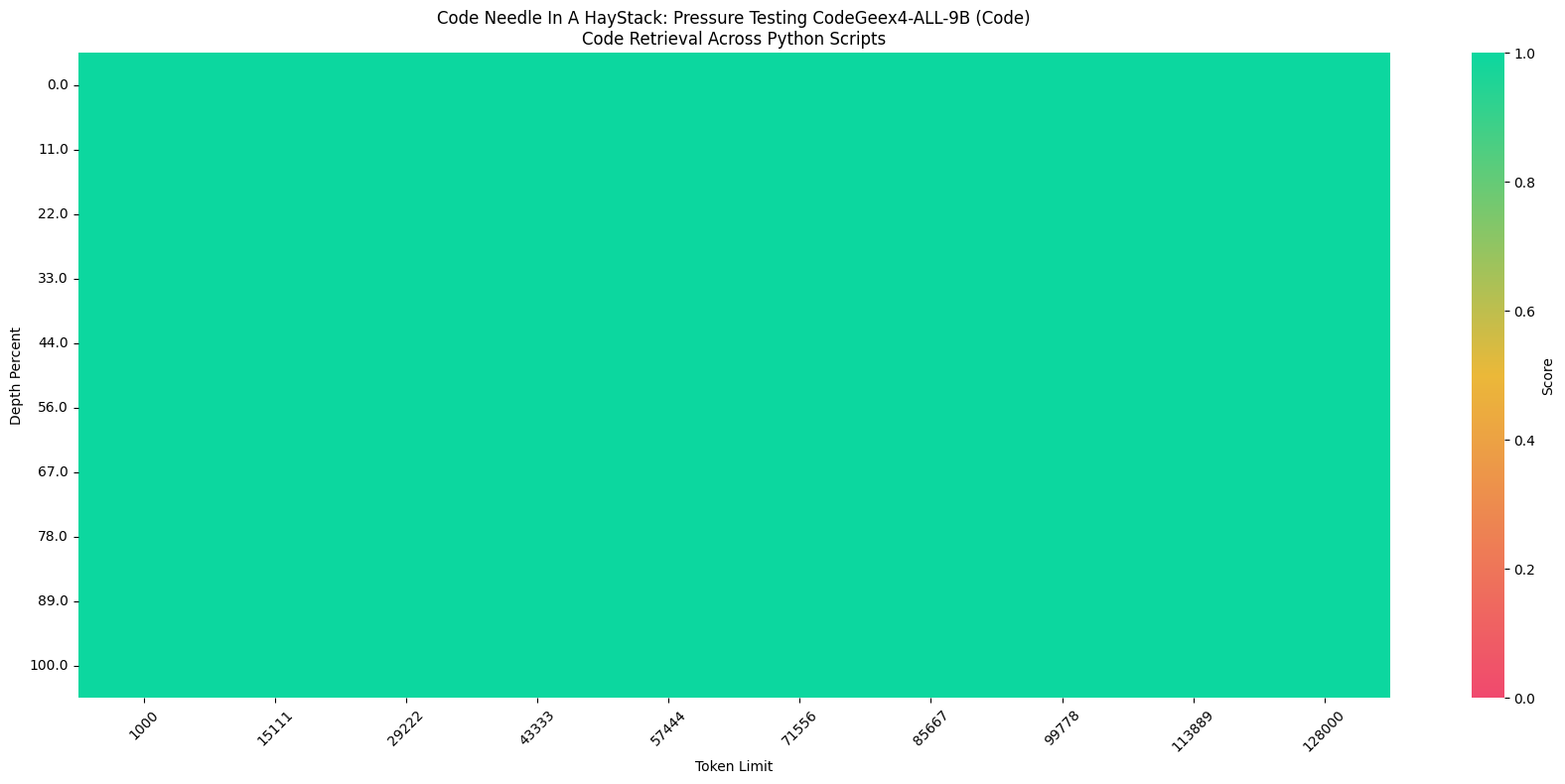

metric/pics/NIAH_PYTHON.png

0 → 100644

57.8 KB

metric/pics/cce.jpg

0 → 100644

136 KB

model.properties

0 → 100644

This diff is collapsed.

This diff is collapsed.

repodemo/.env

0 → 100644

repodemo/chainlit.md

0 → 100644

This diff is collapsed.

repodemo/chainlit_zh-CN.md

0 → 100644

This diff is collapsed.

This diff is collapsed.