update codes

Showing

LICENSE

0 → 100644

MODEL_LICENSE

0 → 100644

README_ori.md

0 → 100644

README_zh.md

0 → 100644

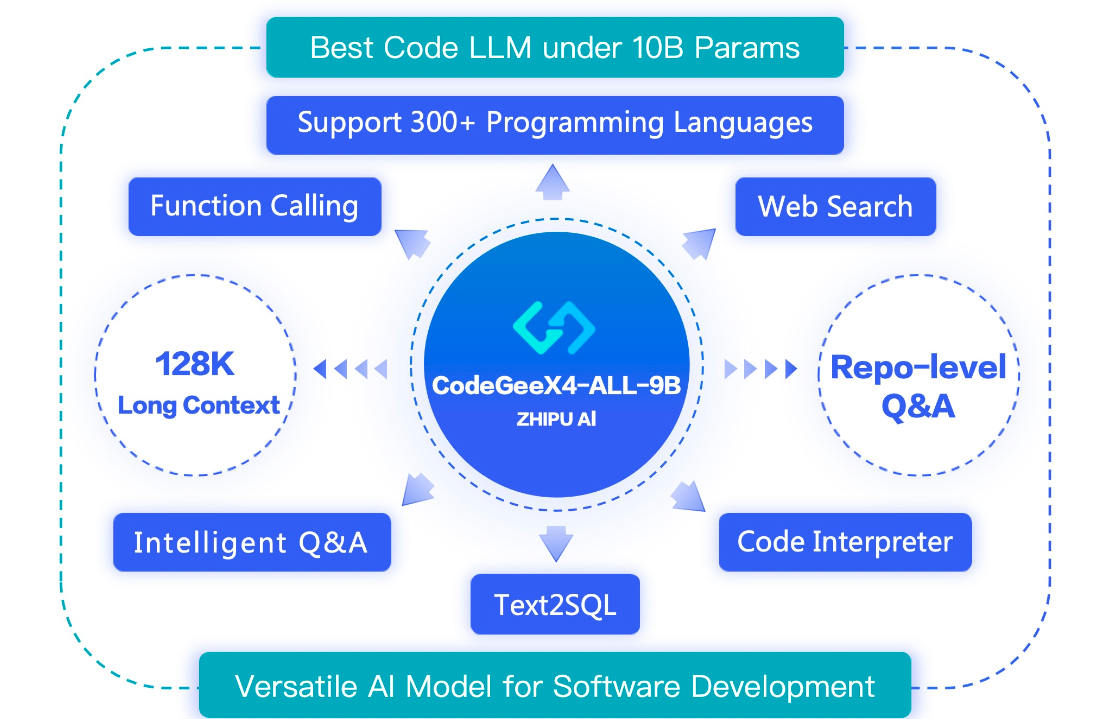

asserts/model.png

0 → 100644

419 KB

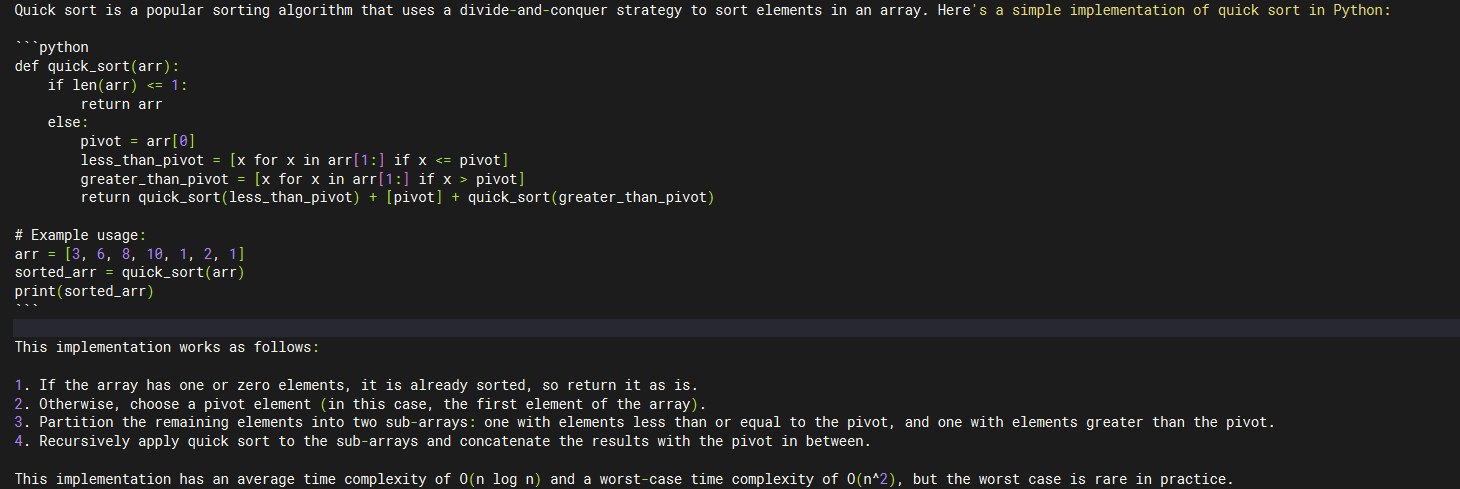

asserts/result.png

0 → 100644

37 KB

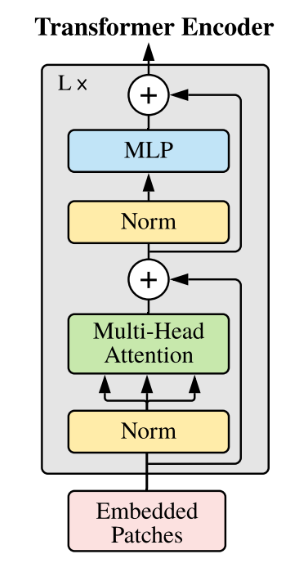

asserts/transformers.png

0 → 100644

31.3 KB

docker/Dockerfile

0 → 100644

function_call_demo/main.py

0 → 100644

icon.png

0 → 100644

62.1 KB

inference.py

0 → 100644