update codes

Showing

web_demo/backend/apis/api.py

0 → 100644

web_demo/main.py

0 → 100644

web_demo/requirements.txt

0 → 100644

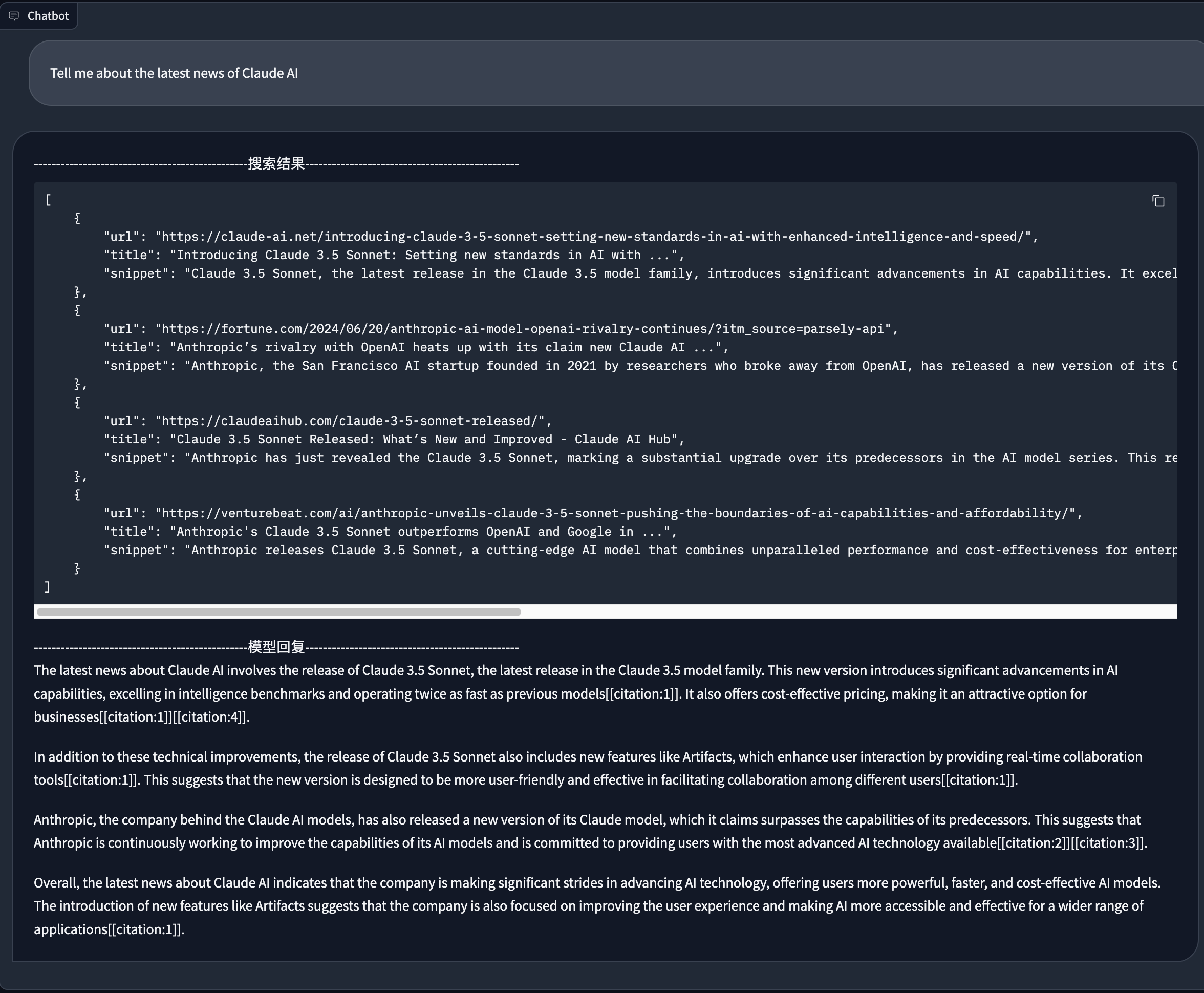

web_demo/resources/demo.png

0 → 100644

514 KB

665 KB