"vscode:/vscode.git/clone" did not exist on "3047dc9b500266d8197139fad5ef3a8a4a459985"

update codes

Showing

interpreter_demo/README.md

0 → 100644

interpreter_demo/SANDBOX.md

0 → 100644

interpreter_demo/app.py

0 → 100644

interpreter_demo/data.csv

0 → 100644

interpreter_demo/image.png

0 → 100644

367 KB

interpreter_demo/sandbox.py

0 → 100644

langchain_demo/README.md

0 → 100644

langchain_demo/README_zh.md

0 → 100644

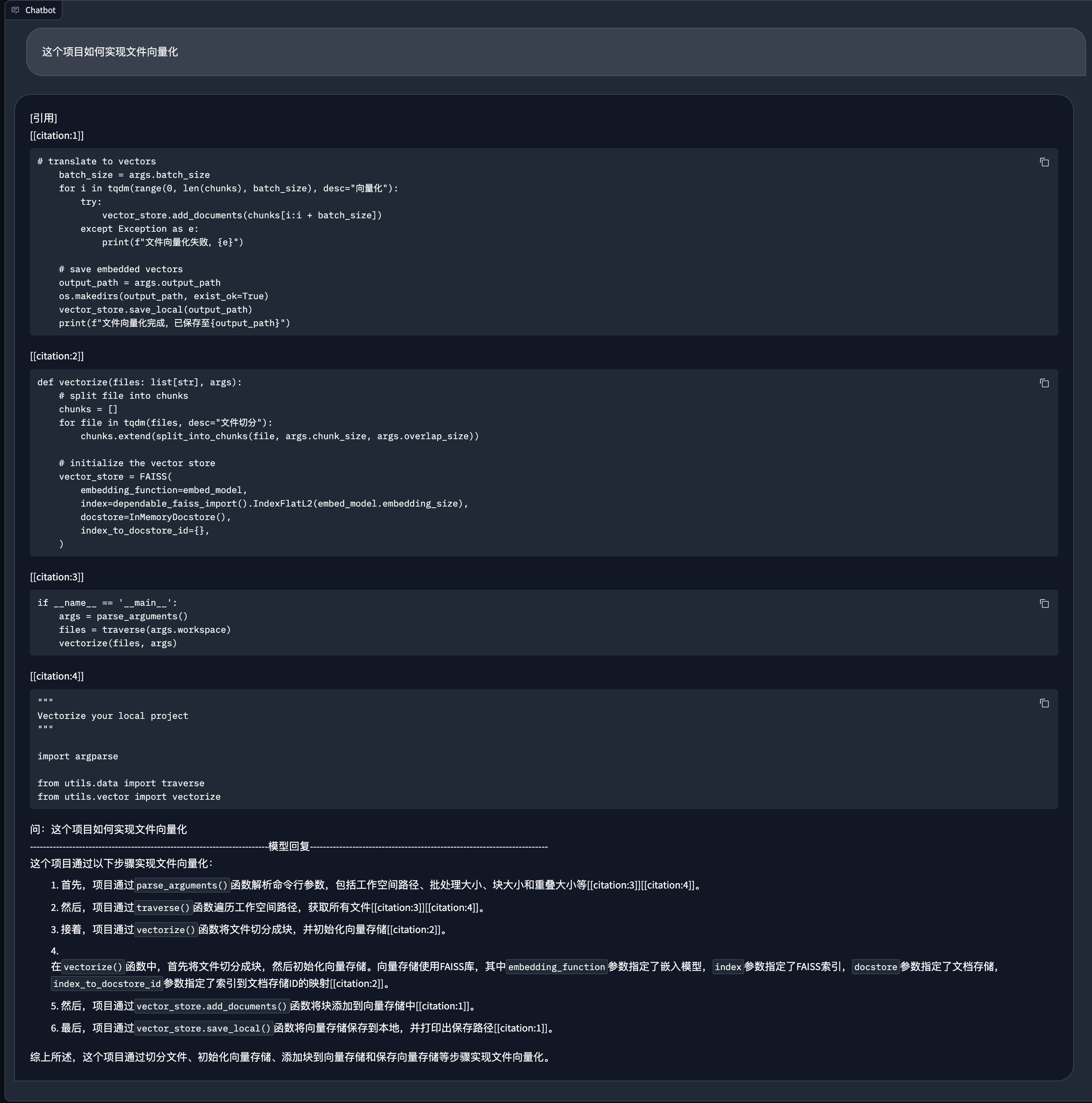

langchain_demo/chat.py

0 → 100644

560 KB

722 KB

langchain_demo/utils/data.py

0 → 100644