codeformer

Showing

inputs/whole_imgs/05.jpg

0 → 100644

7.68 KB

inputs/whole_imgs/06.png

0 → 100644

668 KB

model.properties

0 → 100644

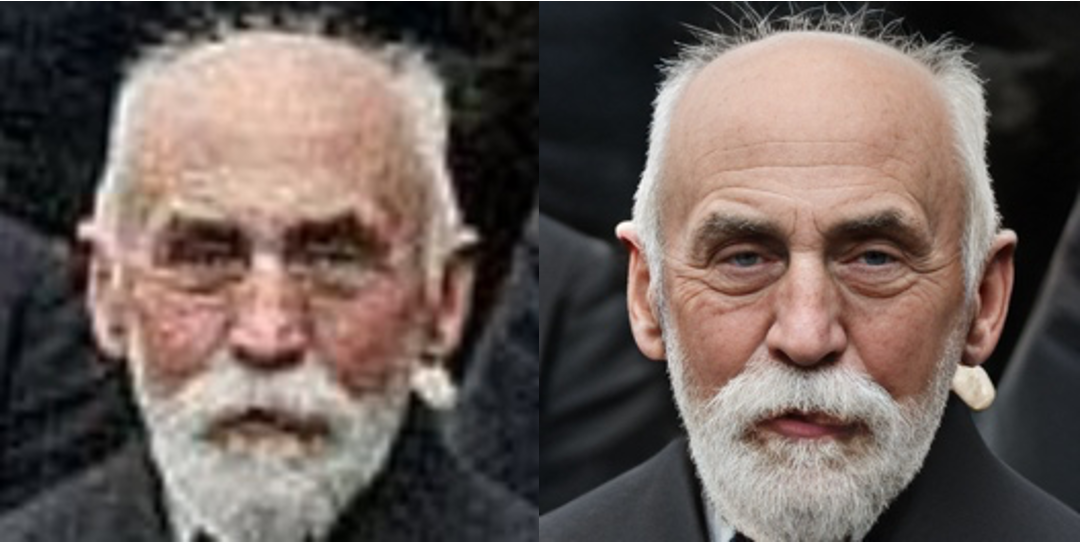

readme_images/image-1.png

0 → 100644

318 KB

readme_images/image-2.png

0 → 100644

252 KB

readme_images/image-3.png

0 → 100644

1.21 MB

requirements.txt

0 → 100644

| addict | ||

| future | ||

| lmdb | ||

| numpy | ||

| opencv-python | ||

| Pillow==9.4.0 | ||

| pyyaml | ||

| requests | ||

| scikit-image | ||

| scipy | ||

| tb-nightly | ||

| torch>=1.7.1 | ||

| torchvision | ||

| tqdm | ||

| yapf | ||

| lpips | ||

| gdown # supports downloading the large file from Google Drive | ||

| dlib | ||

| \ No newline at end of file |

scripts/crop_align_face.py

0 → 100755

scripts/inference_vqgan.py

0 → 100644

web-demos/replicate/cog.yaml

0 → 100644