"tools/docker/ubuntu_2204.dockerfile" did not exist on "ca8a54fe732e725f0e22ebc09187bd71faf131a5"

first commit

Showing

crnn/util.py

0 → 100644

dbnet/.DS_Store

0 → 100644

File added

File added

File added

dbnet/dbnet_infer.py

0 → 100644

dbnet/decode.py

0 → 100644

dbnet/test.jpg

0 → 100644

155 KB

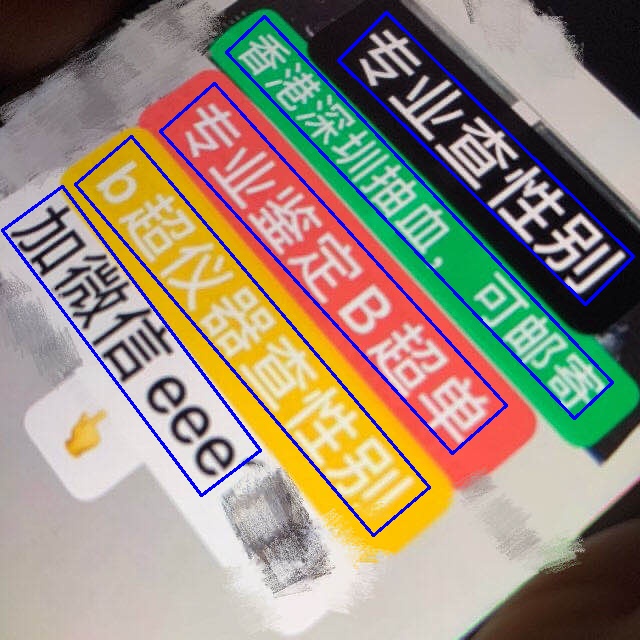

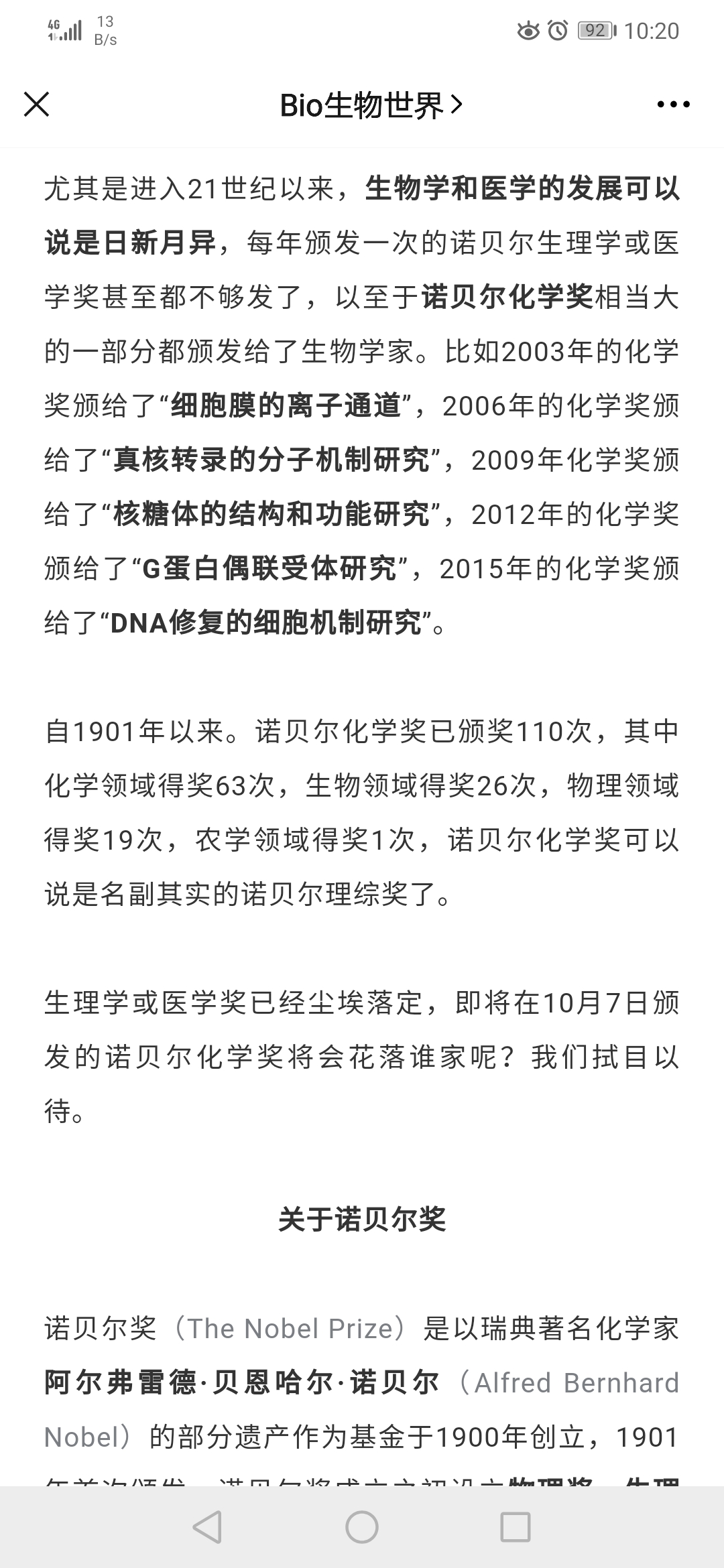

images/1.jpg

0 → 100644

60.4 KB

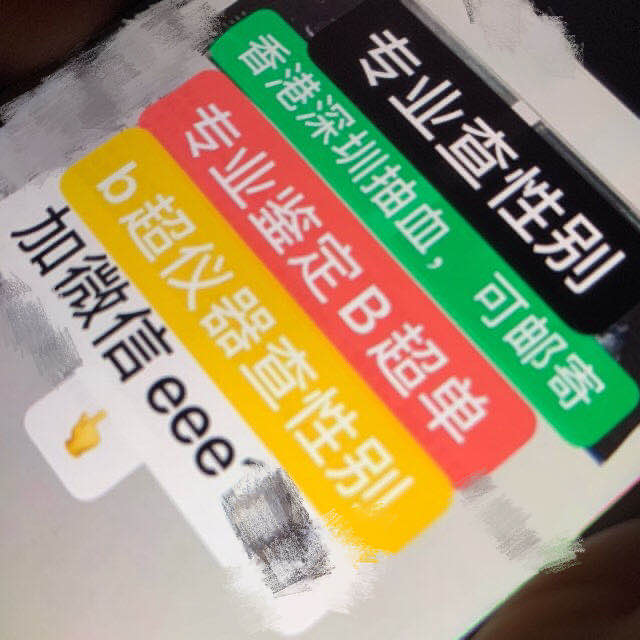

images/2.jpg

0 → 100644

31.2 KB

images/3.jpg

0 → 100644

79.6 KB

images/4.jpg

0 → 100644

68.7 KB

images/5.jpg

0 → 100644

172 KB

images/6.jpg

0 → 100644

555 KB

images/7.jpg

0 → 100644

560 KB

images/8.jpg

0 → 100644

197 KB

images/clear.cmd

0 → 100755

images/clear.sh

0 → 100755

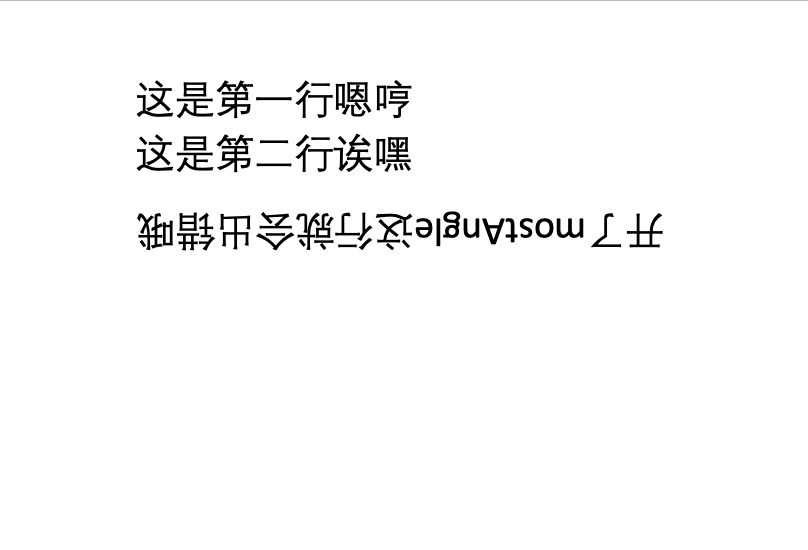

images/mostAngle.jpg

0 → 100644

64.7 KB

main.py

0 → 100644

models/angle_net.onnx

0 → 100755

File added