first commit

Showing

tools/infer/utility.py

0 → 100755

tools/program.py

0 → 100755

utils.py

0 → 100644

warmup_images_5/ArT_0_.jpg

0 → 100644

147 KB

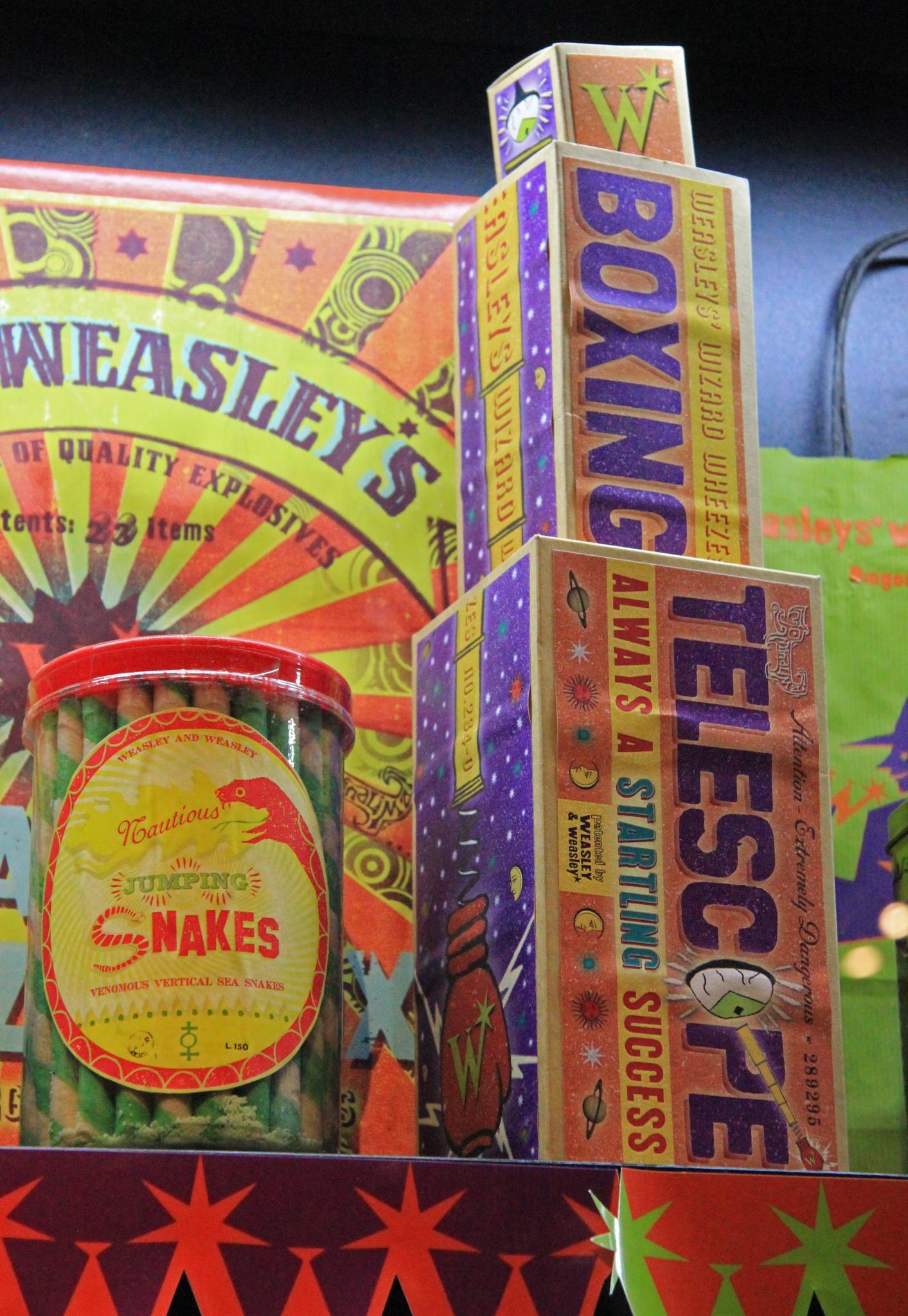

warmup_images_5/ArT_24_.jpg

0 → 100644

798 KB

warmup_images_5/ArT_262_.jpg

0 → 100644

741 KB

warmup_images_5/ArT_5_.jpg

0 → 100644

644 KB

warmup_images_5/ArT_7_.jpg

0 → 100644

224 KB

warmup_images_rec/ArT_0.jpg

0 → 100755

1.22 KB

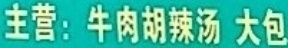

warmup_images_rec/ArT_1.jpg

0 → 100755

8.36 KB

25.5 KB

16.7 KB

warmup_images_rec/ArT_2.jpg

0 → 100755

2.03 KB

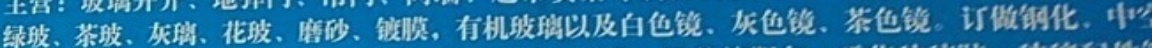

warmup_images_rec/ArT_22.jpg

0 → 100755

12.1 KB

16.6 KB

16.8 KB

16.6 KB

warmup_images_rec/ArT_3.jpg

0 → 100755

2.52 KB