提交chatglm3推理

Showing

README.md

0 → 100644

chatglm_export.py

0 → 100644

cli_demo.py

0 → 100644

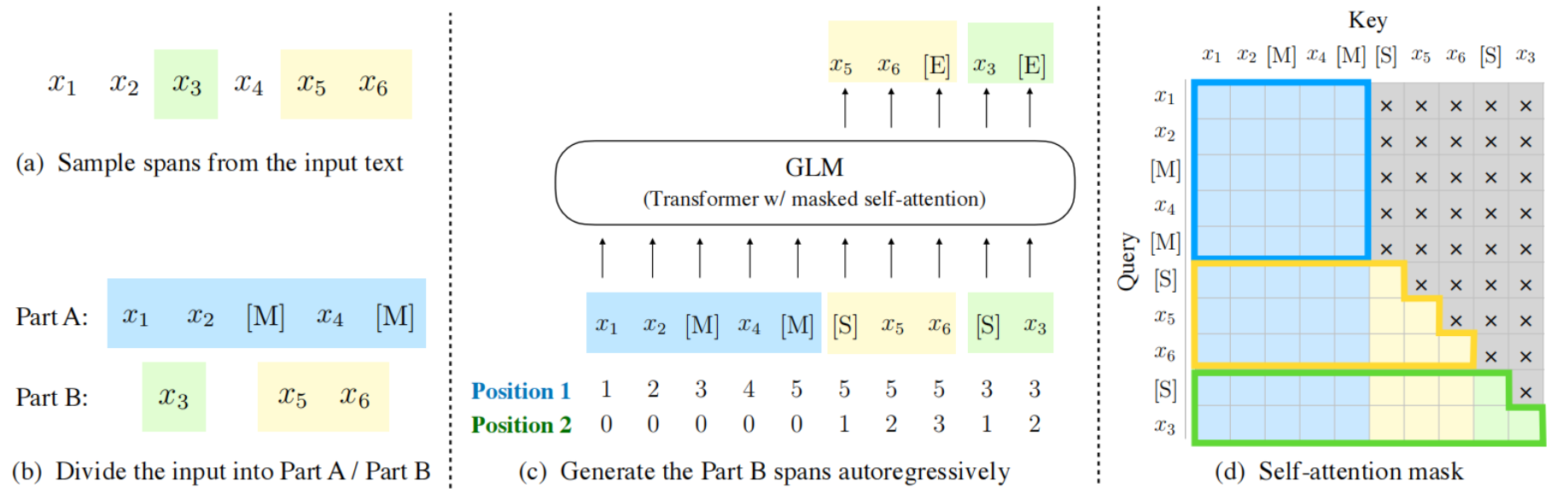

doc/GLM.png

0 → 100644

261 KB

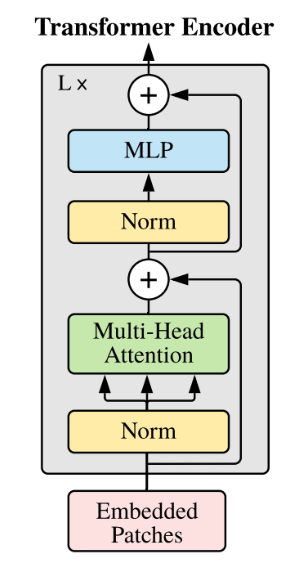

doc/transformers.jpg

0 → 100644

32.7 KB

model.properties

0 → 100644

File added

package/setup.py

0 → 100644

requirements.txt

0 → 100644

| transformers==4.30.2 | ||

| streamlit>=1.24.0 | ||

| sentencepiece | ||

| urllib3==1.26.16 |

web_demo.py

0 → 100644