Revert "Merge branch 'master' into 'master'"

This reverts merge request !2

Showing

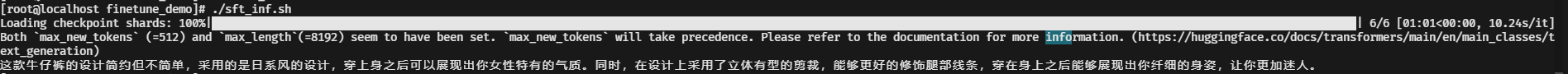

finetune_demo/sft.sh

deleted

100644 → 0

langchain_demo/README.md

0 → 100644

langchain_demo/utils.py

0 → 100644

media/result1.jpg

deleted

100644 → 0

31.1 KB

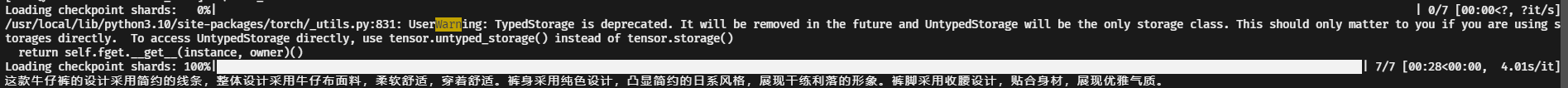

media/result2.jpg

deleted

100644 → 0

37.9 KB

model.properties

0 → 100644