bloom

Showing

Too many changes to show.

To preserve performance only 332 of 332+ files are displayed.

libai/utils/logger.py

0 → 100644

libai/utils/timer.py

0 → 100644

model.properties

0 → 100644

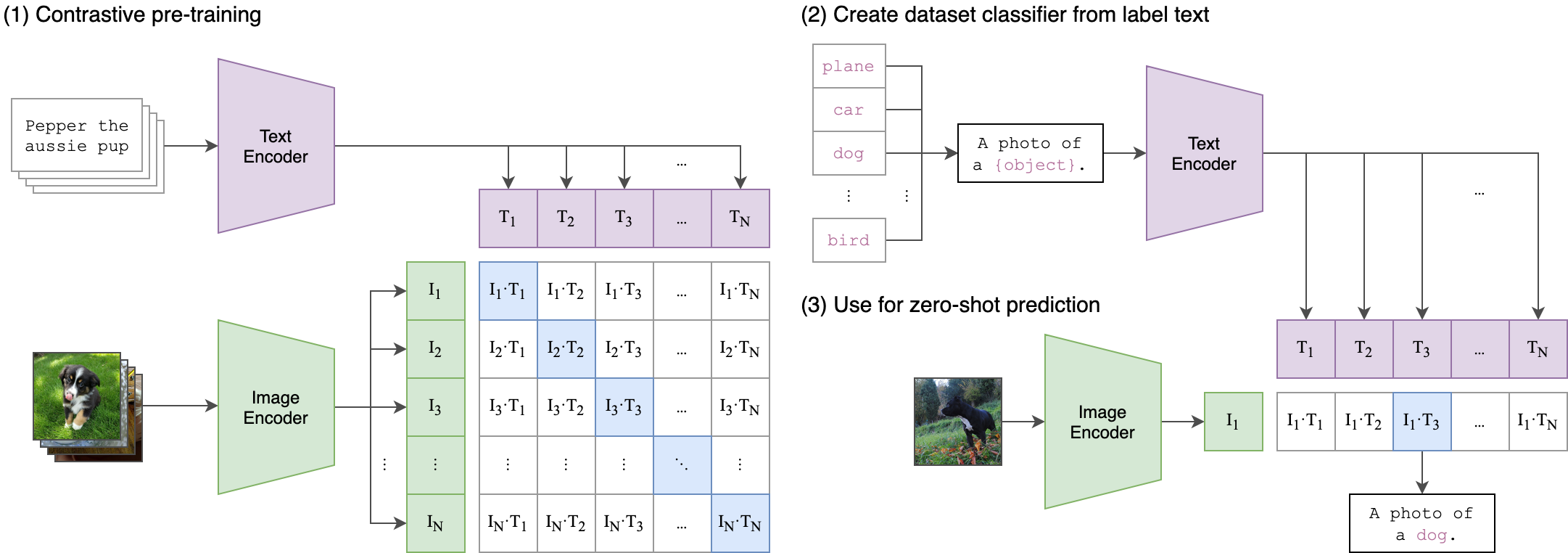

projects/CLIP/CLIP.png

0 → 100644

247 KB

projects/CLIP/README.md

0 → 100644

projects/CLIP/clip/clip.py

0 → 100644

projects/CLIP/clip/model.py

0 → 100644

projects/CLIP/clip/ops.py

0 → 100644