Merge branch 'dtk24.04.1'

Showing

alphafold/version.py

0 → 100644

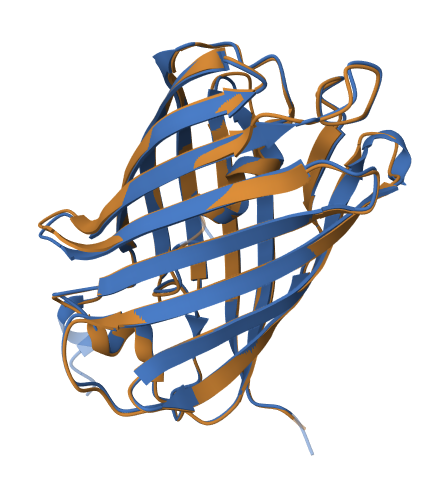

image.png

0 → 100644

130 KB

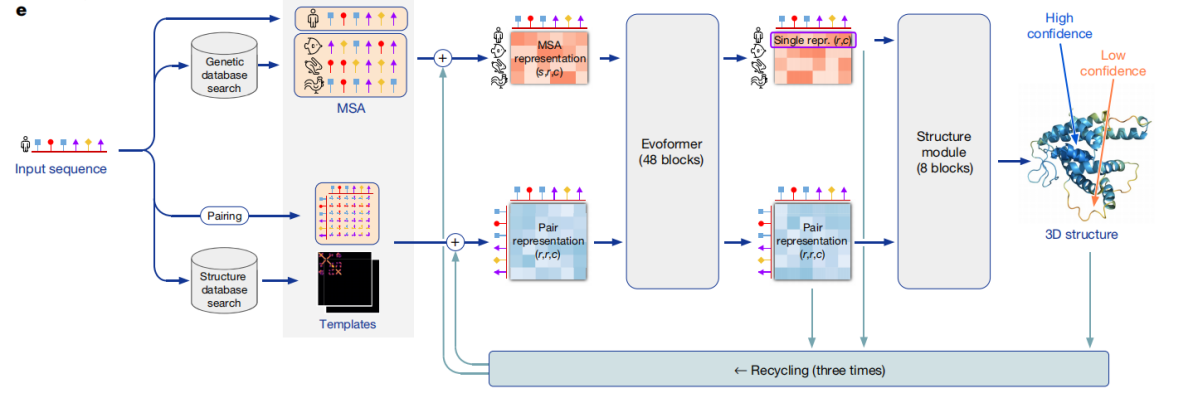

readme_imgs/alphafold2.png

0 → 100644

130 KB

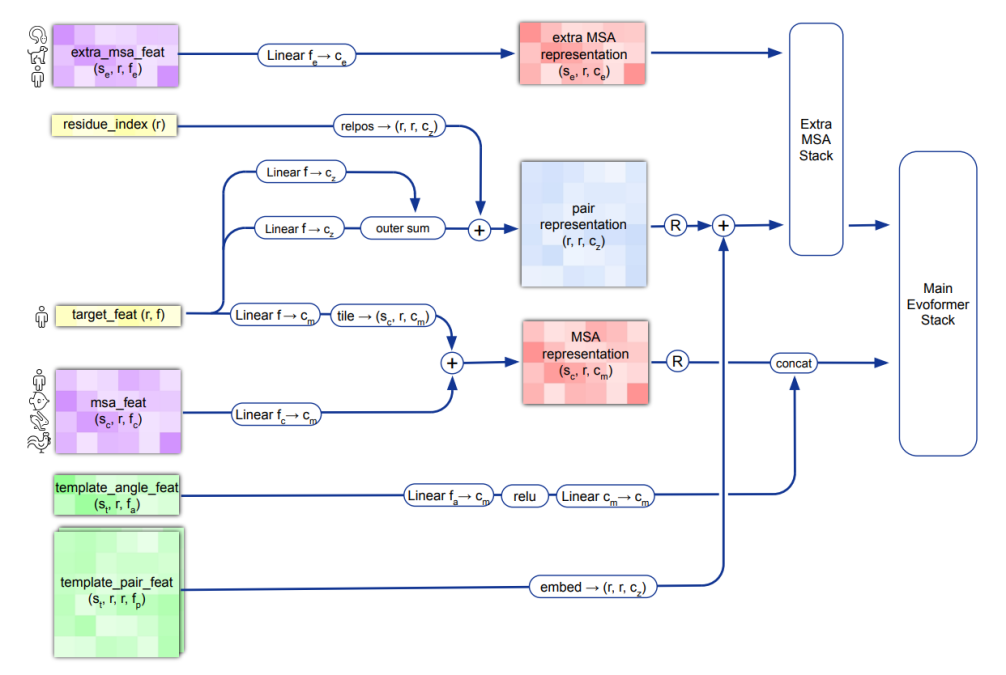

readme_imgs/alphafold2_1.png

0 → 100644

134 KB

| absl-py==1.0.0 | ||

| biopython==1.79 | ||

| chex==0.0.7 | ||

| dm-haiku==0.0.9 | ||

| dm-tree==0.1.6 | ||

| # docker==5.0.0 | ||

| chex==0.1.86 | ||

| dm-haiku==0.0.12 | ||

| dm-tree==0.1.8 | ||

| docker==5.0.0 | ||

| immutabledict==2.0.0 | ||

| # jax==0.3.25 | ||

| jax==0.4.26 | ||

| ml-collections==0.1.0 | ||

| numpy==1.21.6 | ||

| pandas==1.3.4 | ||

| scipy==1.7.0 | ||

| # tensorflow-cpu==2.11.0 | ||

| numpy==1.24.3 | ||

| pandas==2.0.3 | ||

| scipy==1.11.1 | ||

| tensorflow-cpu==2.16.1 |

requirements_dcu.txt

0 → 100644