"git@developer.sourcefind.cn:modelzoo/solov2-pytorch.git" did not exist on "a8ec6fb3a7f23d8fd98fa906bfe5de3fd58a8f75"

omniparser

Showing

.gitignore

0 → 100644

Dockerfile

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_official.md

0 → 100644

SECURITY.md

0 → 100644

demo.ipynb

0 → 100644

demo.py

0 → 100644

docs/Evaluation.md

0 → 100644

eval/logs_sspro_omniv2.json

0 → 100644

This source diff could not be displayed because it is too large. You can view the blob instead.

eval/ss_pro_gpt4o_omniv2.py

0 → 100755

gradio_demo.py

0 → 100644

icon.png

0 → 100644

77.3 KB

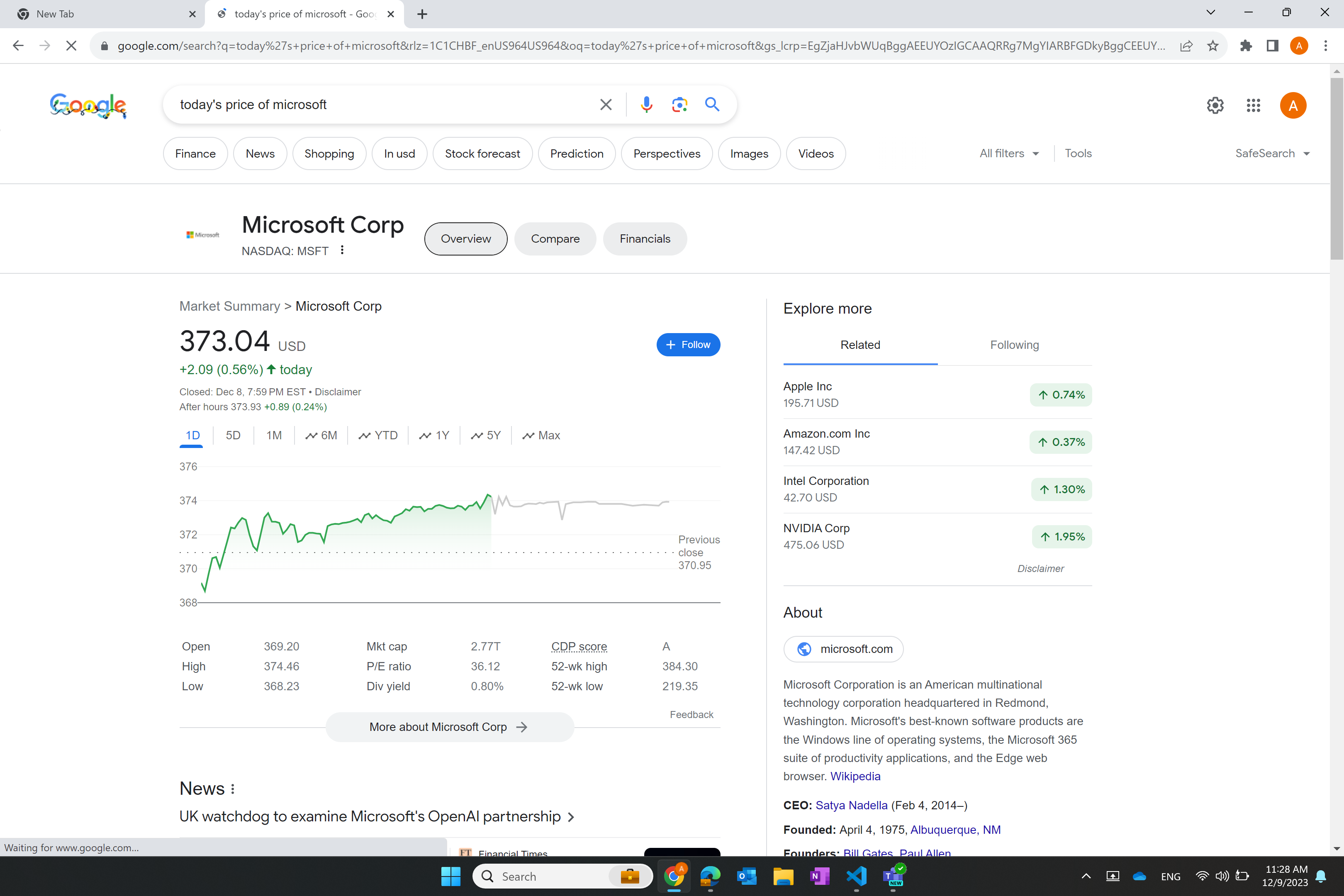

imgs/demo_image.jpg

0 → 100644

560 KB

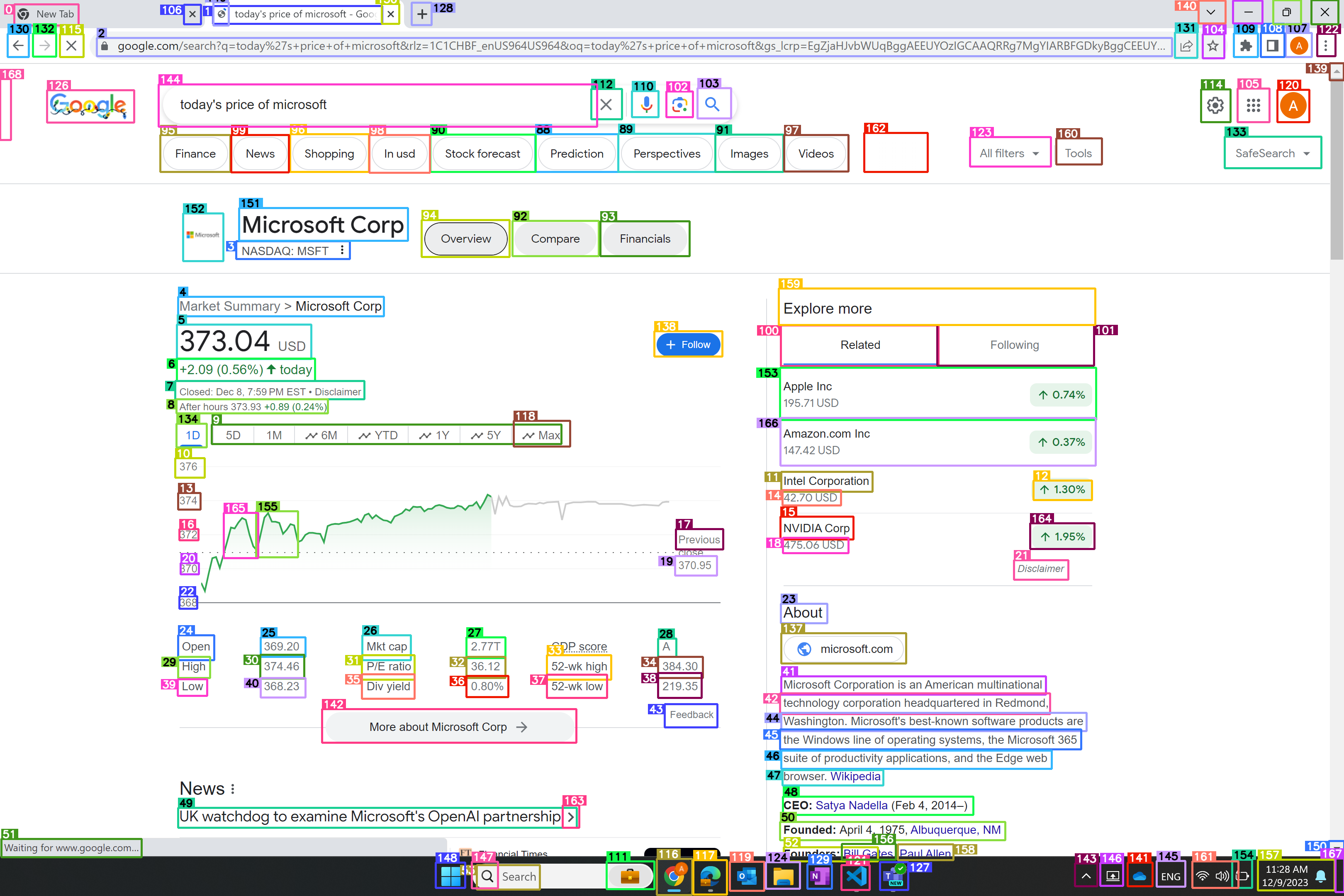

imgs/demo_image_som.jpg

0 → 100644

720 KB

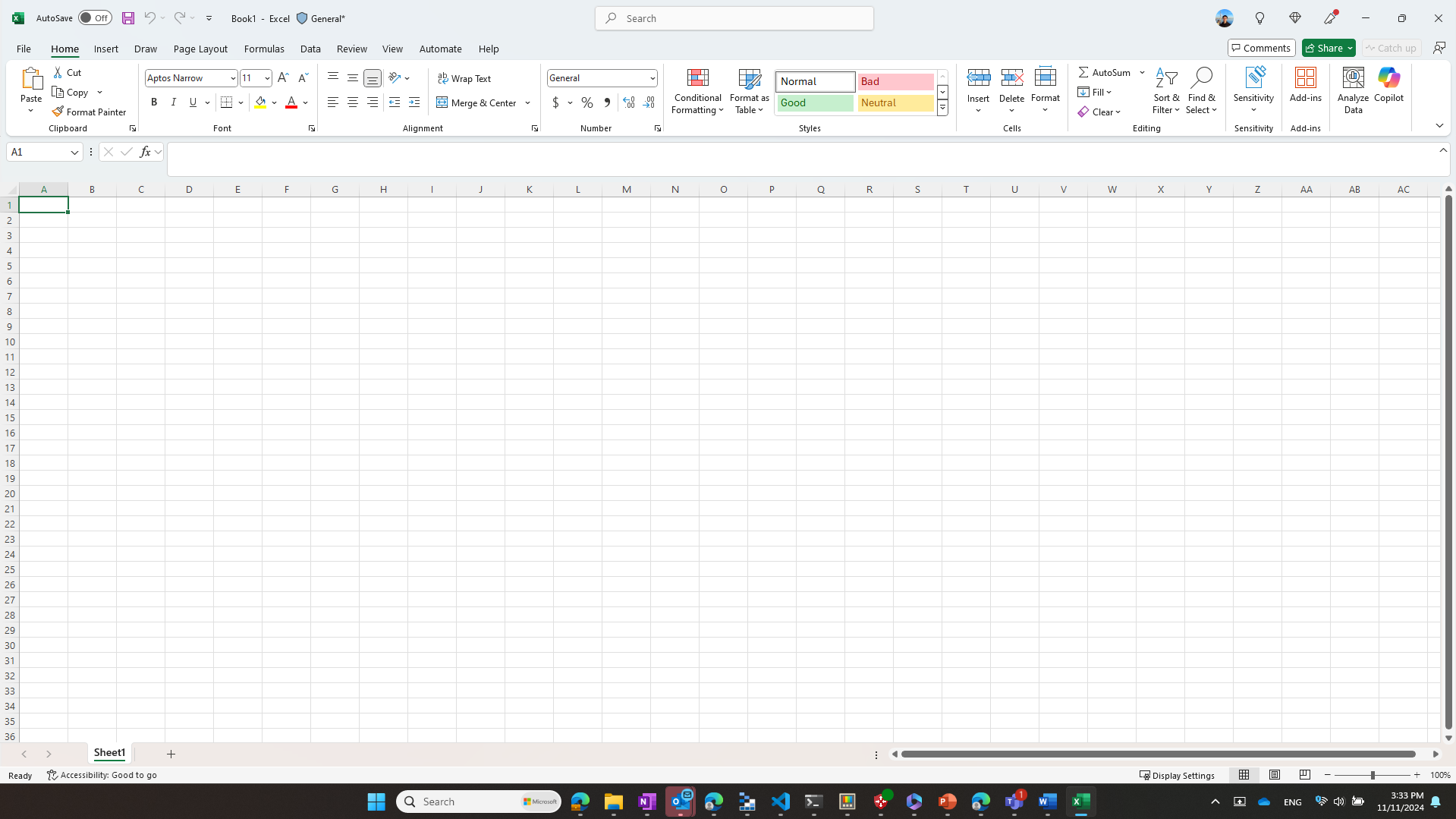

imgs/excel.png

0 → 100644

150 KB

imgs/google_page.png

0 → 100644

324 KB

imgs/gradioicon.png

0 → 100644

32.9 KB

imgs/header_bar.png

0 → 100644

251 KB

imgs/header_bar_thin.png

0 → 100644

85.6 KB