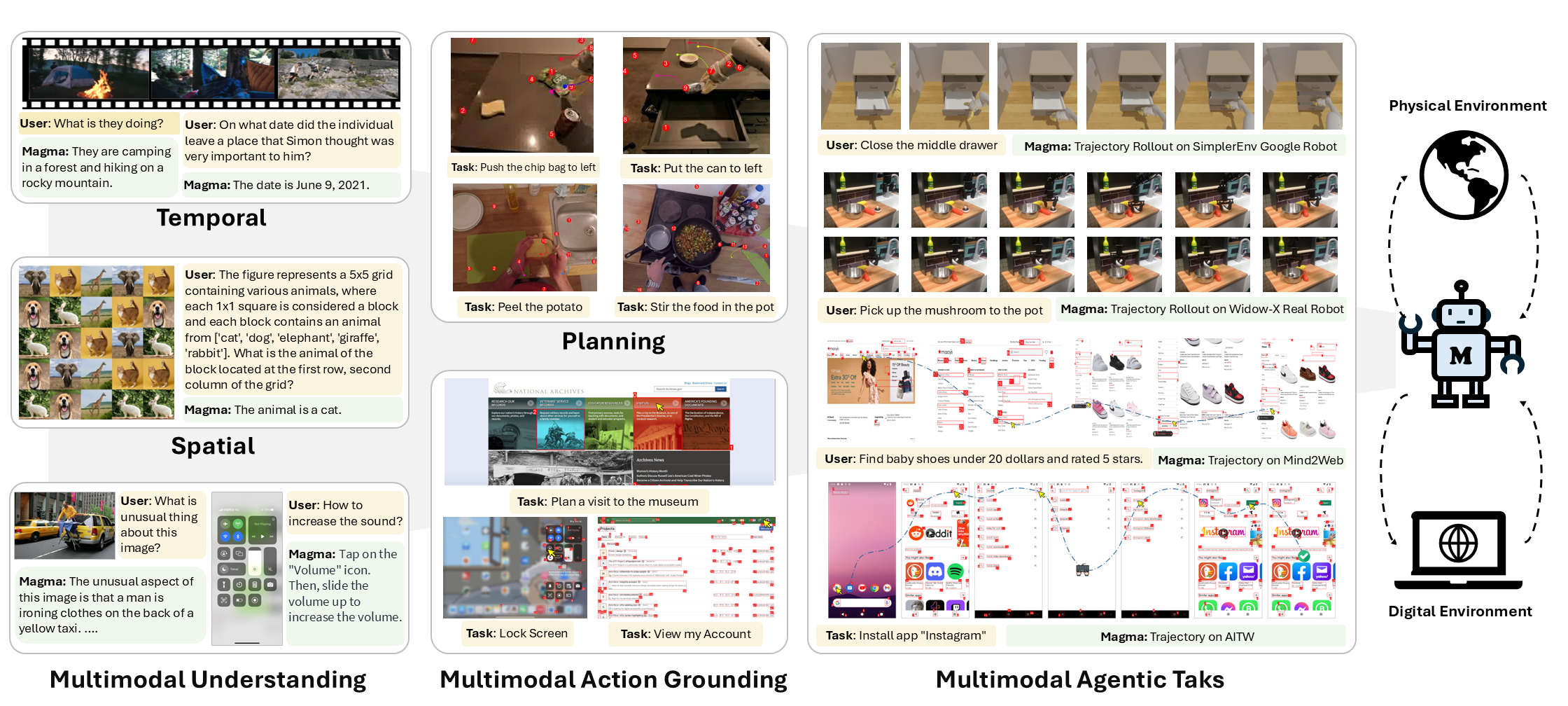

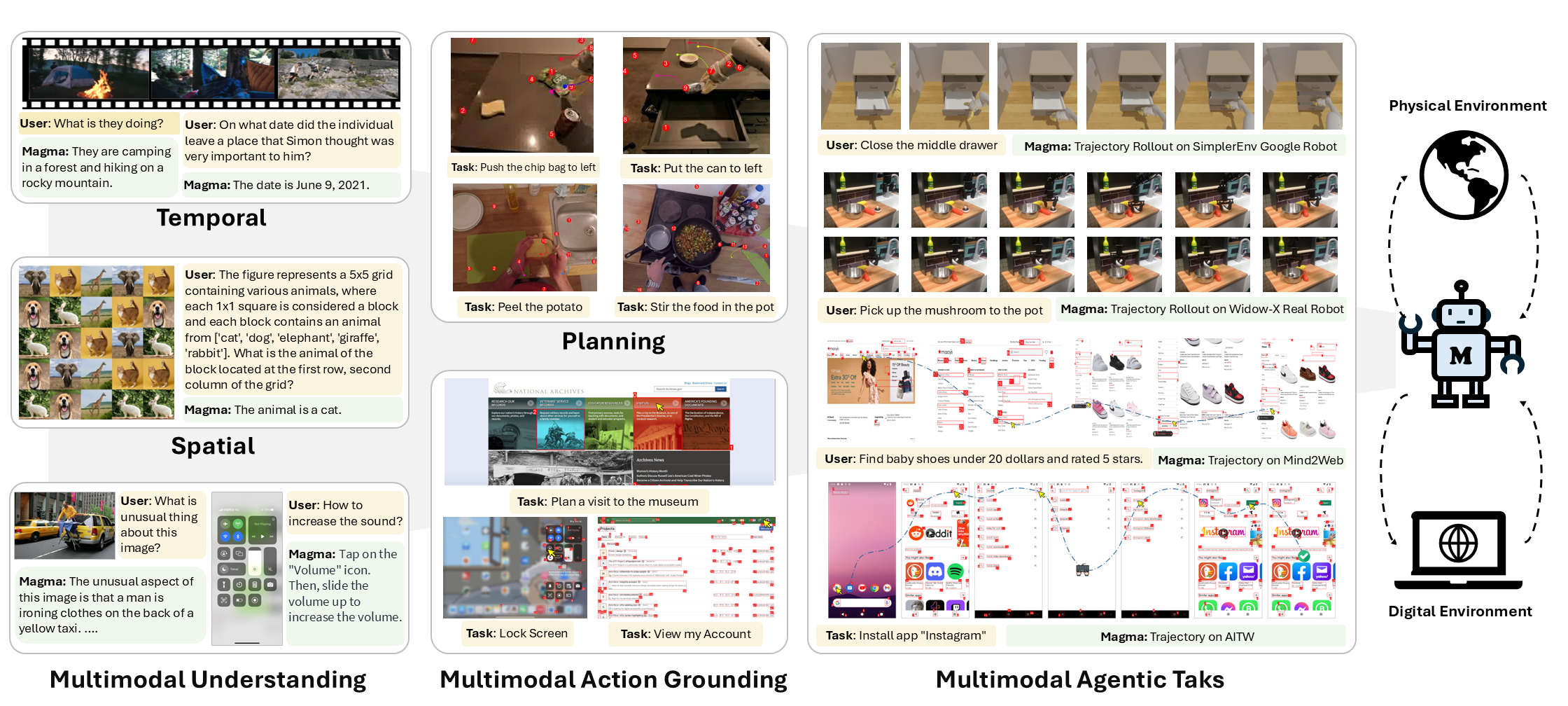

🤖 Magma: A Foundation Model for Multimodal AI Agents

[Jianwei Yang](https://jwyang.github.io/)

*1†

[Reuben Tan](https://cs-people.bu.edu/rxtan/)

1†

[Qianhui Wu](https://qianhuiwu.github.io/)

1†

[Ruijie Zheng](https://ruijiezheng.com/)

2‡

[Baolin Peng](https://scholar.google.com/citations?user=u1CNjgwAAAAJ&hl=en&oi=ao)

1‡

[Yongyuan Liang](https://cheryyunl.github.io)

2‡

[Yu Gu](http://yu-gu.me/)

1

[Mu Cai](https://pages.cs.wisc.edu/~mucai/)

3

[Seonghyeon Ye](https://seonghyeonye.github.io/)

4

[Joel Jang](https://joeljang.github.io/)

5

[Yuquan Deng](https://scholar.google.com/citations?user=LTC0Q6YAAAAJ&hl=en)

5

[Lars Liden](https://sites.google.com/site/larsliden)

1

[Jianfeng Gao](https://www.microsoft.com/en-us/research/people/jfgao/)

1▽

1 Microsoft Research;

2 University of Maryland;

3 University of Wisconsin-Madison

4 KAIST;

5 University of Washington

* Project lead

† First authors

‡ Second authors

▽ Leadership

To Appear at CVPR 2025