v1.0

parents

Showing

agents/ui_agent/util/som.py

0 → 100644

assets/images/apple.png

0 → 100644

795 KB

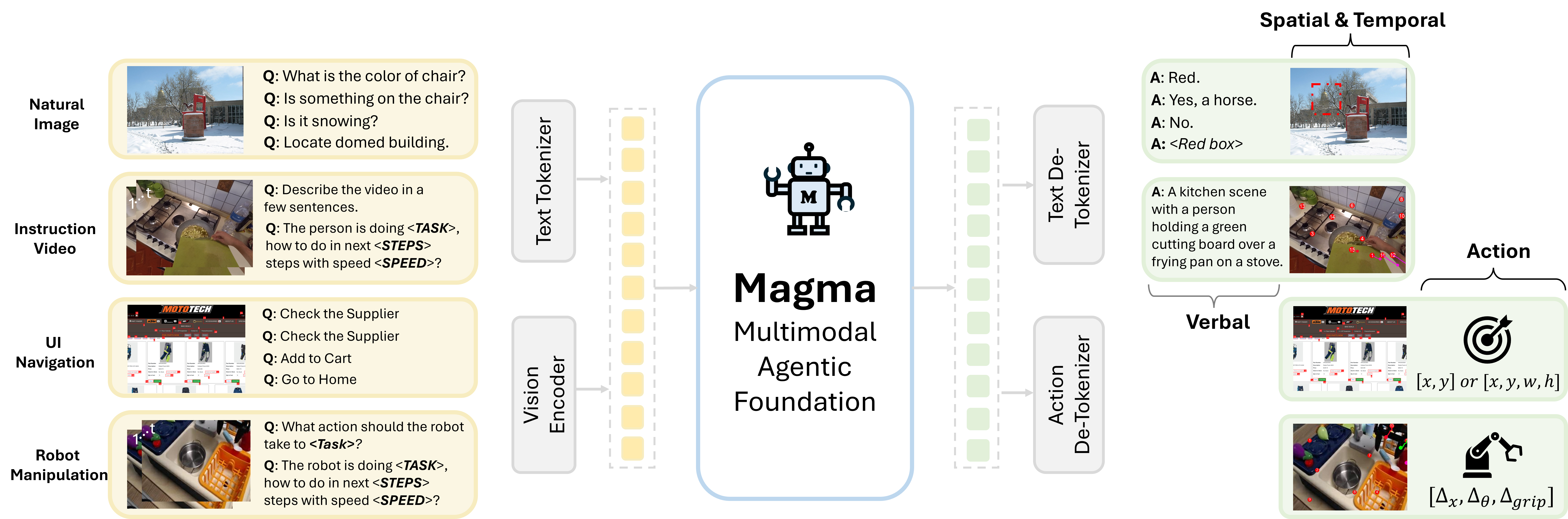

assets/images/magma_game.png

0 → 100644

17.1 KB

84.9 KB

assets/images/magma_logo.jpg

0 → 100644

35 KB

4.98 MB

1.49 MB

6.16 MB

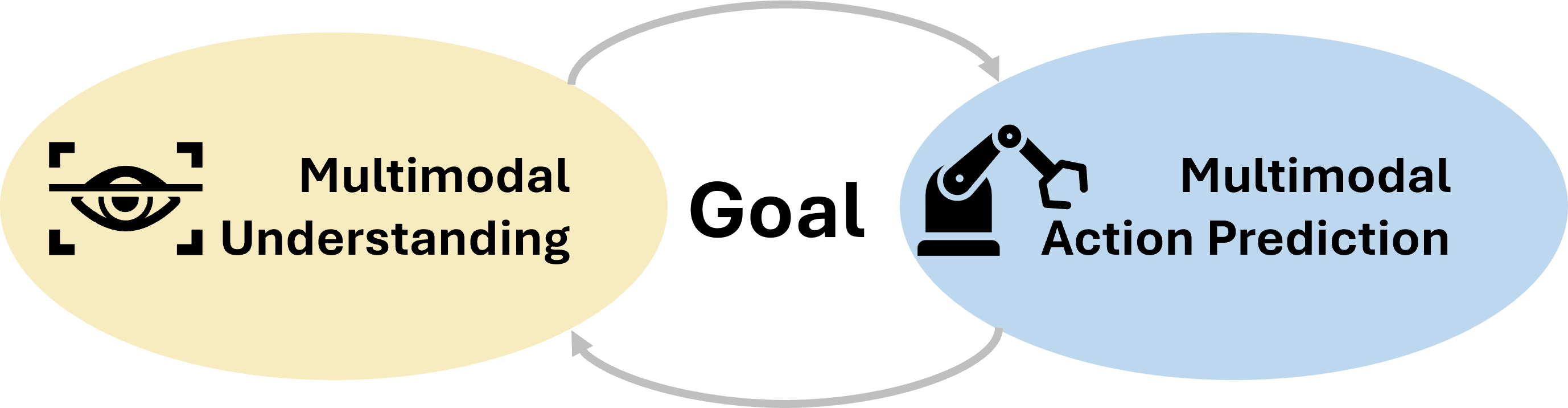

assets/images/tom_fig.png

0 → 100644

9.01 MB

533 KB

File added

File added

File added

assets/videos/ui_mobile.mp4

0 → 100644

File added

data/__init__.py

0 → 100644

File added