v1.0

Showing

LICENSE

0 → 100644

README.md

0 → 100644

README_origin.md

0 → 100644

app.py

0 → 100644

1.08 MB

assets/examples/man.jpg

0 → 100644

259 KB

assets/examples/man_pose.jpg

0 → 100644

865 KB

assets/examples/woman.jpg

0 → 100644

472 KB

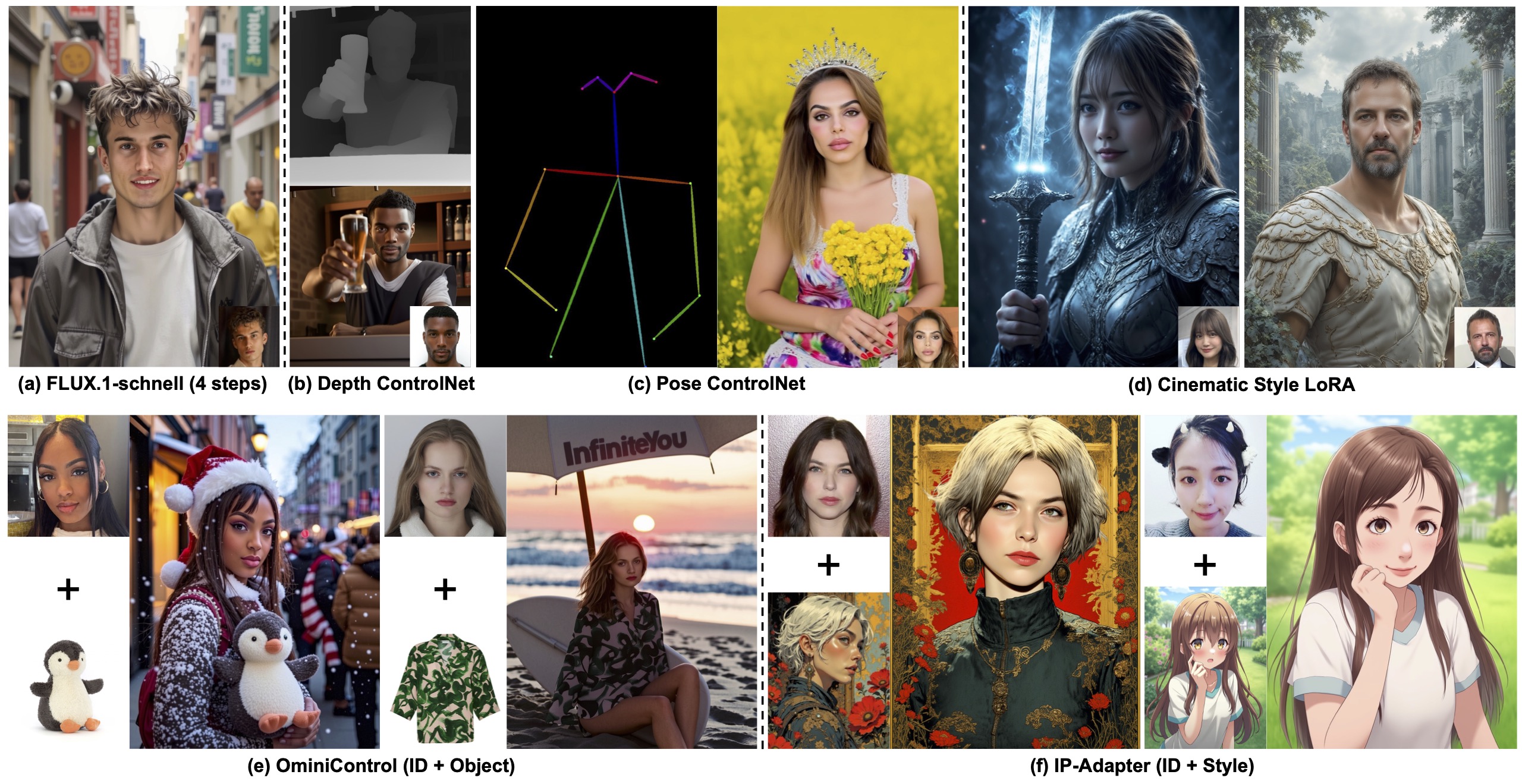

assets/plug_and_play.jpg

0 → 100644

813 KB

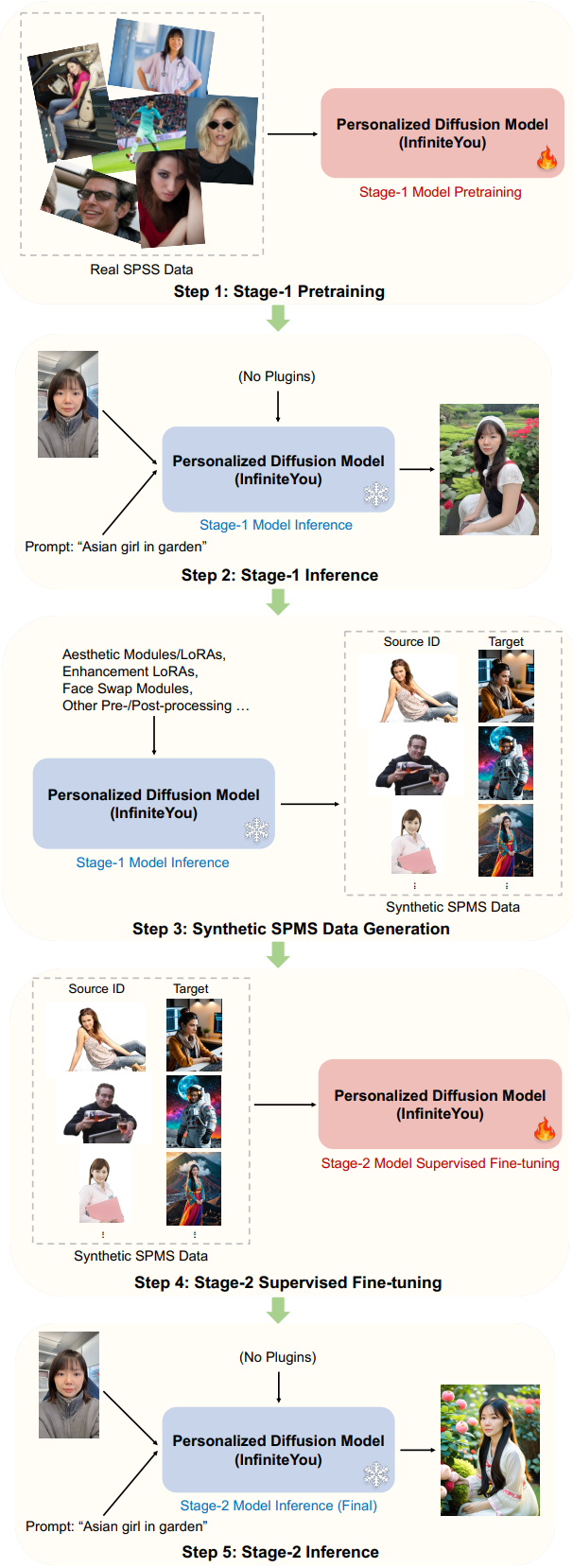

assets/teaser.jpg

0 → 100644

592 KB

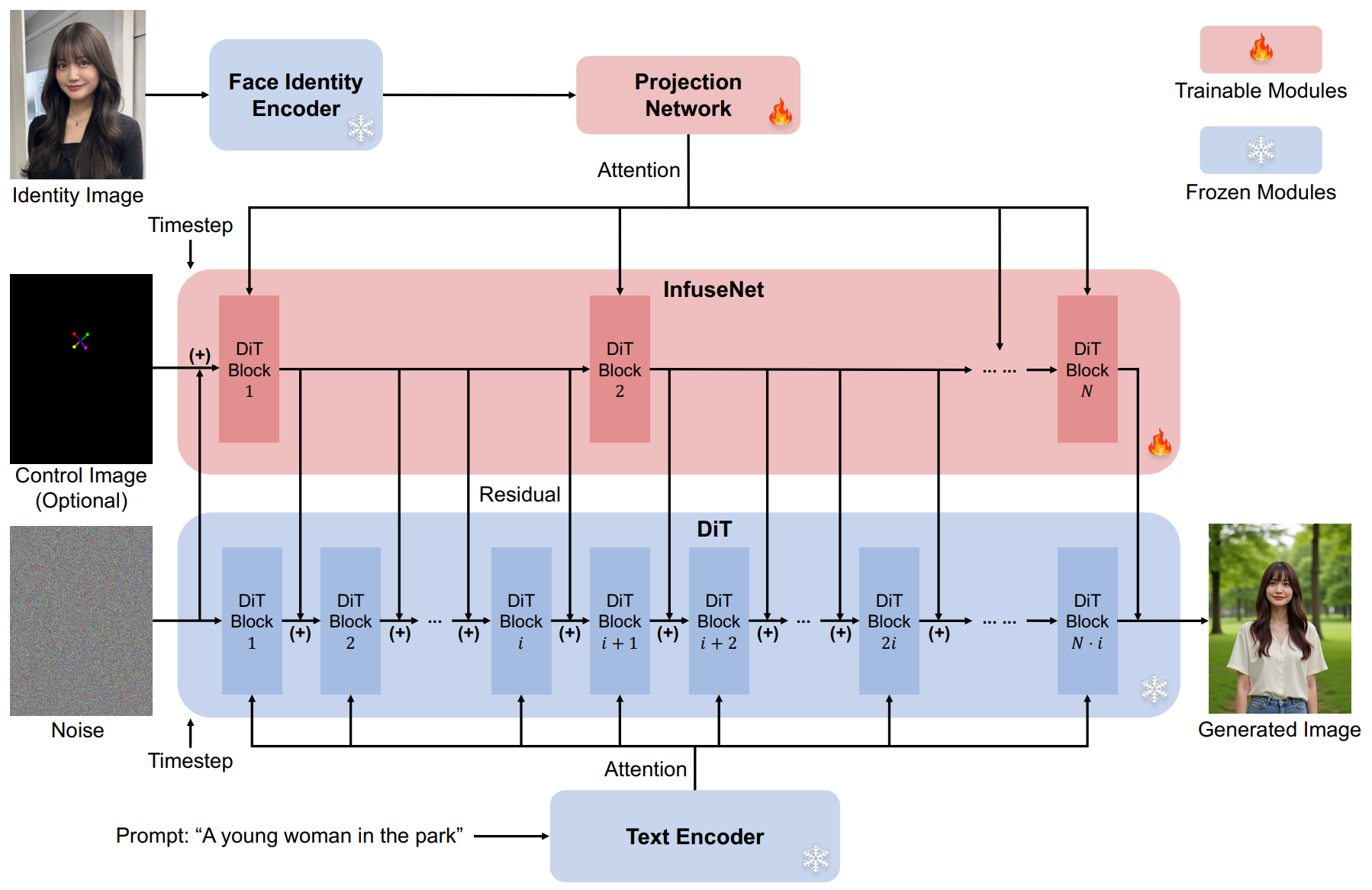

doc/algorithm.png

0 → 100644

439 KB

doc/input.png

0 → 100644

1.65 MB

doc/result.png

0 → 100644

850 KB

doc/structure.png

0 → 100644

405 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

icon.png

0 → 100644

68.4 KB

model.properties

0 → 100644