v1.0

Showing

code/REC/utils/enum_type.py

0 → 100644

code/REC/utils/logger.py

0 → 100644

code/REC/utils/utils.py

0 → 100644

code/main.py

0 → 100644

code/overall/ID.yaml

0 → 100644

code/overall/LLM_ddp.yaml

0 → 100644

code/pt_to_bin.sh

0 → 100644

code/run.py

0 → 100644

dataset/readme.md

0 → 100644

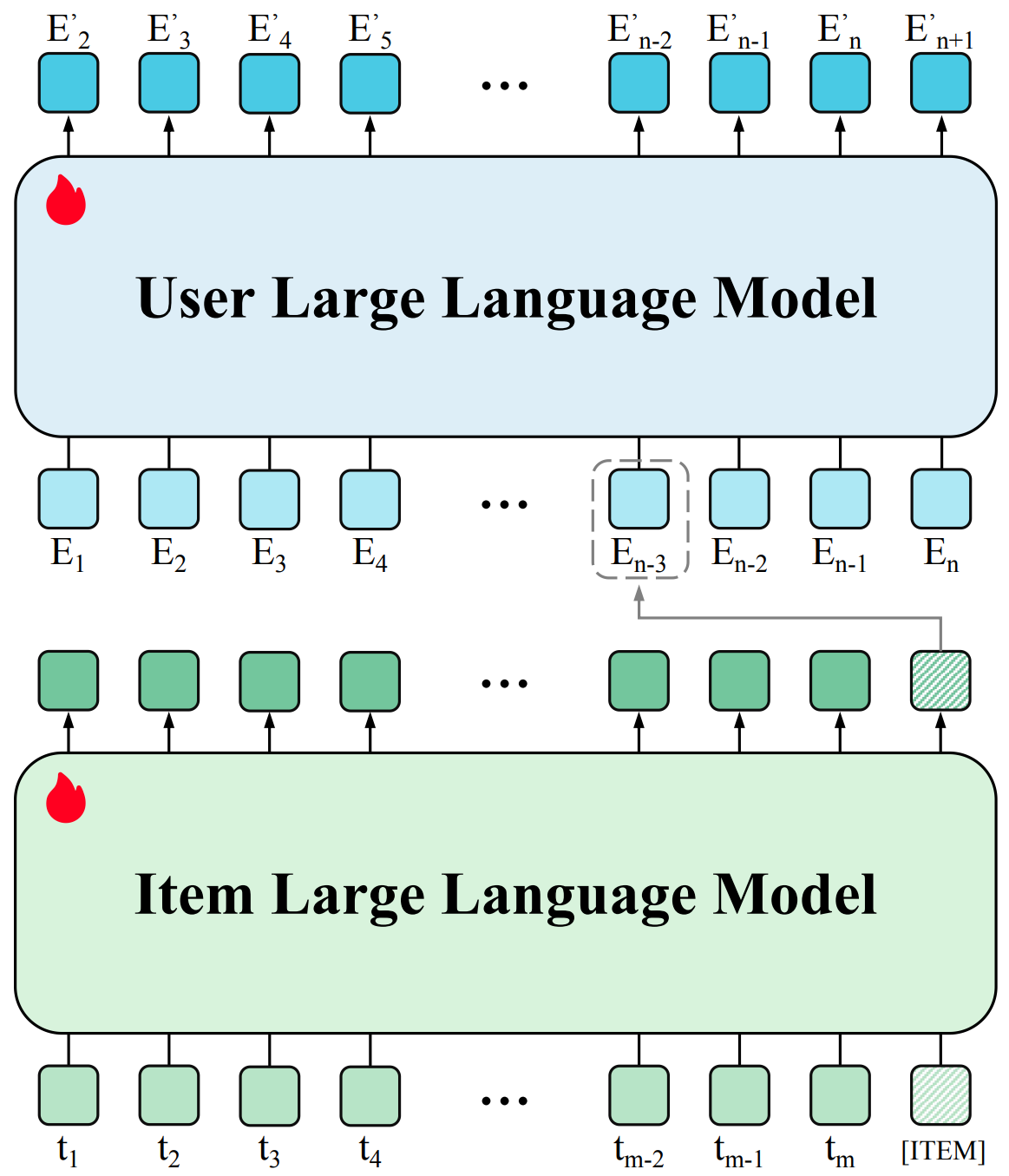

doc/algorithm.png

0 → 100644

94.9 KB

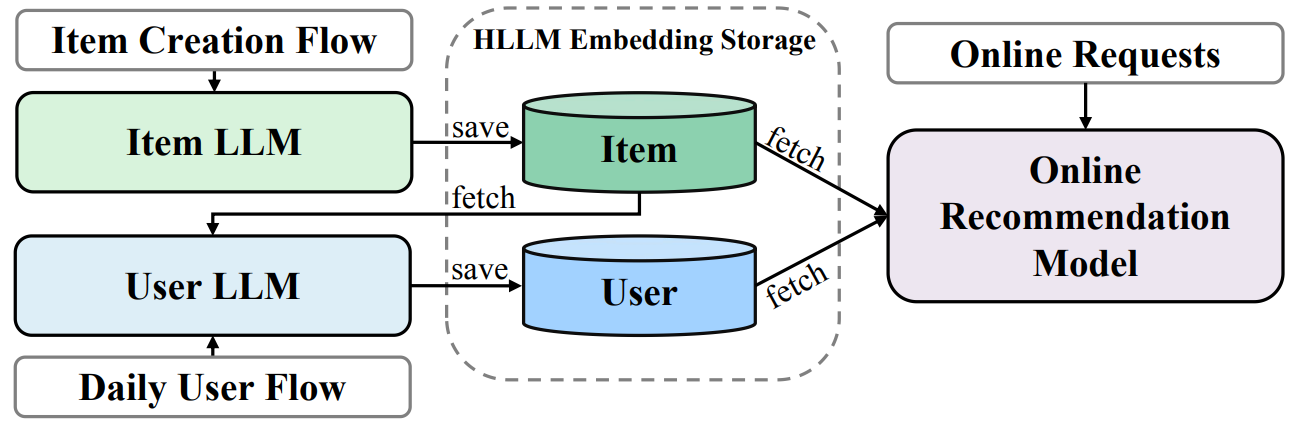

doc/structure.png

0 → 100644

119 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

infer.sh

0 → 100644