v1.0

parents

Showing

File added

README.md

0 → 100644

candy.JPG

0 → 100644

2.18 MB

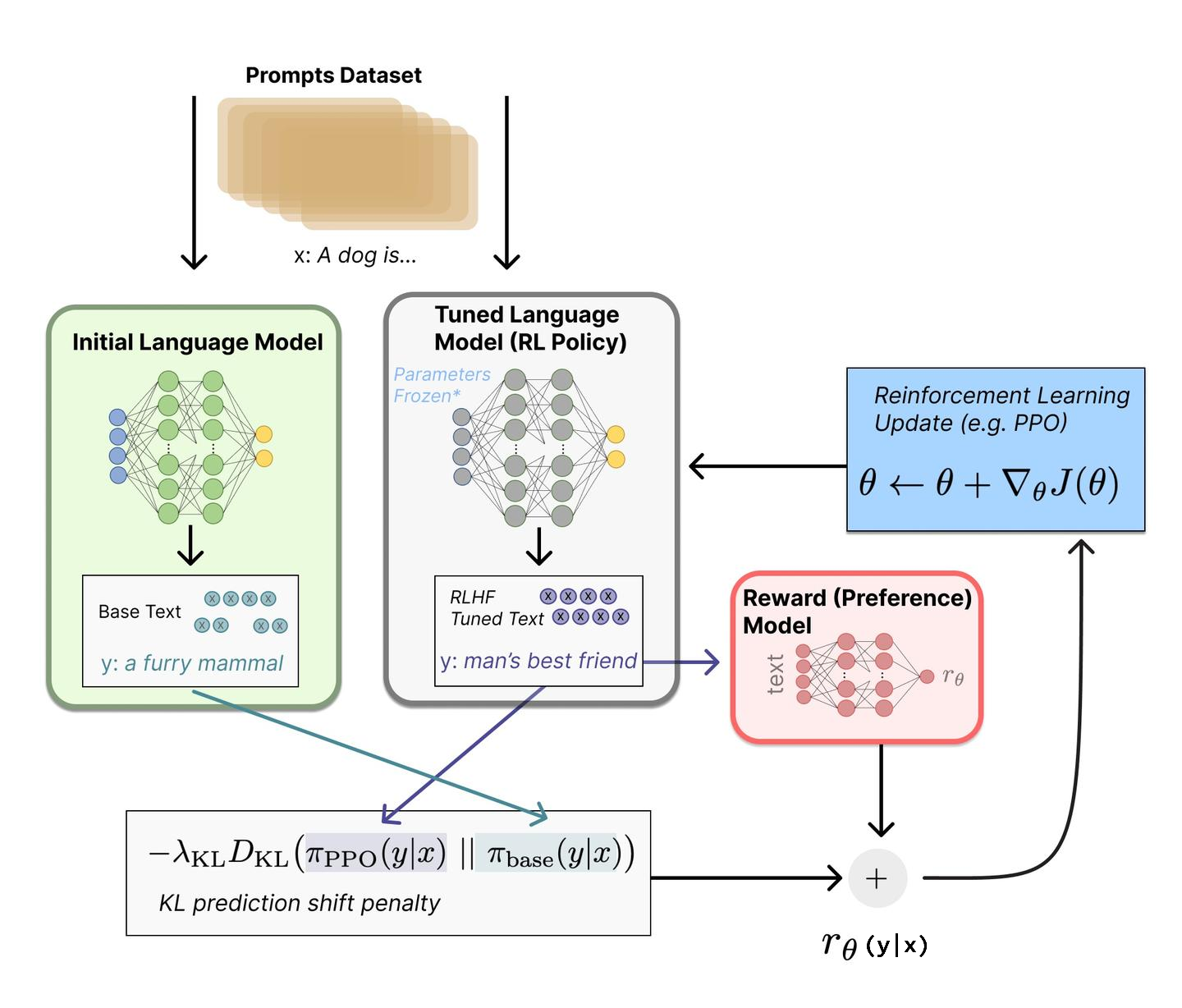

doc/RLHF.png

0 → 100644

639 KB

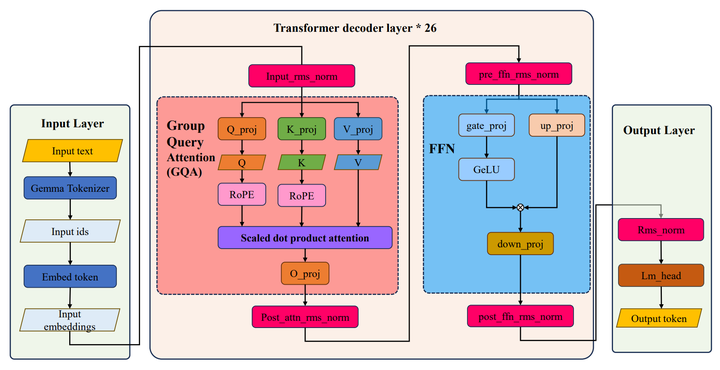

doc/gemma2.png

0 → 100644

110 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

This diff is collapsed.

icon.png

0 → 100644

53.8 KB

infer_transformers.py

0 → 100644

model.properties

0 → 100644