v1.0

Showing

Too many changes to show.

To preserve performance only 675 of 675+ files are displayed.

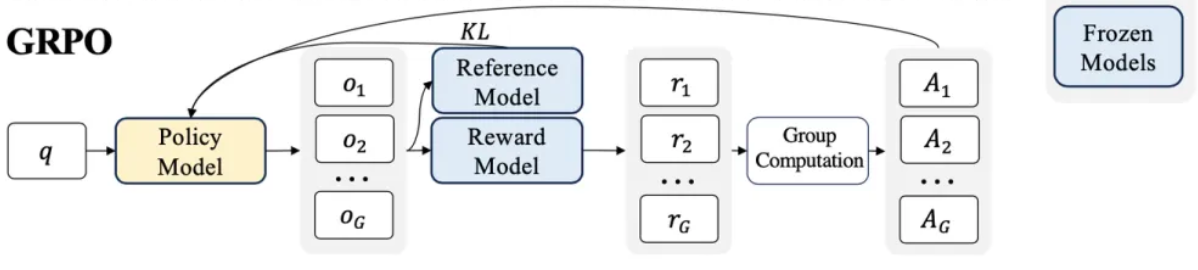

doc/GRPO.png

0 → 100644

108 KB

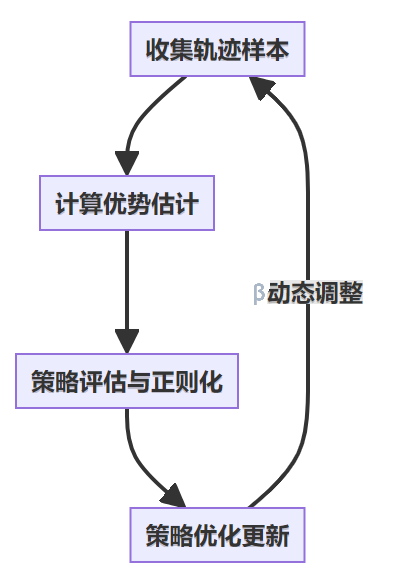

doc/GRPO_flow.png

0 → 100644

36.1 KB

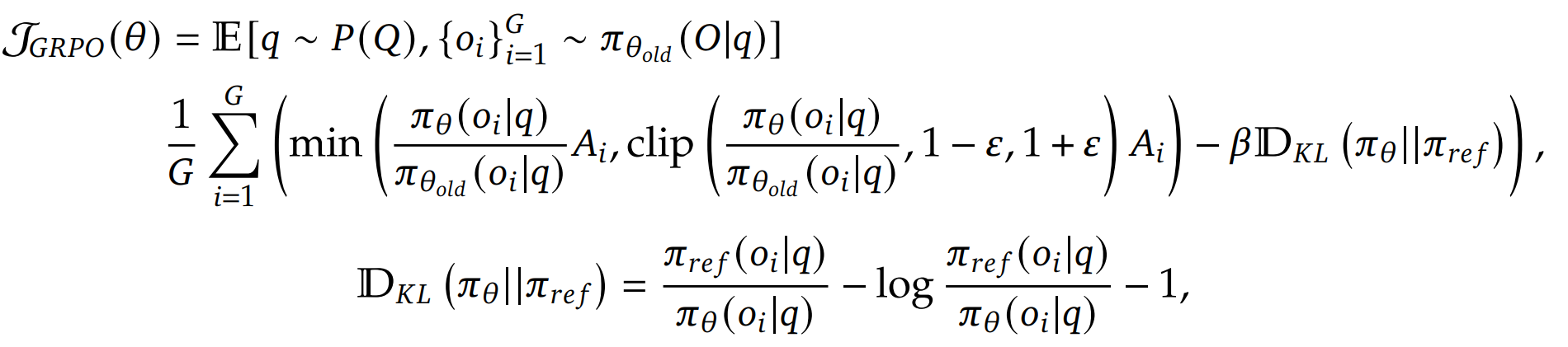

doc/algorithm.png

0 → 100644

97.7 KB

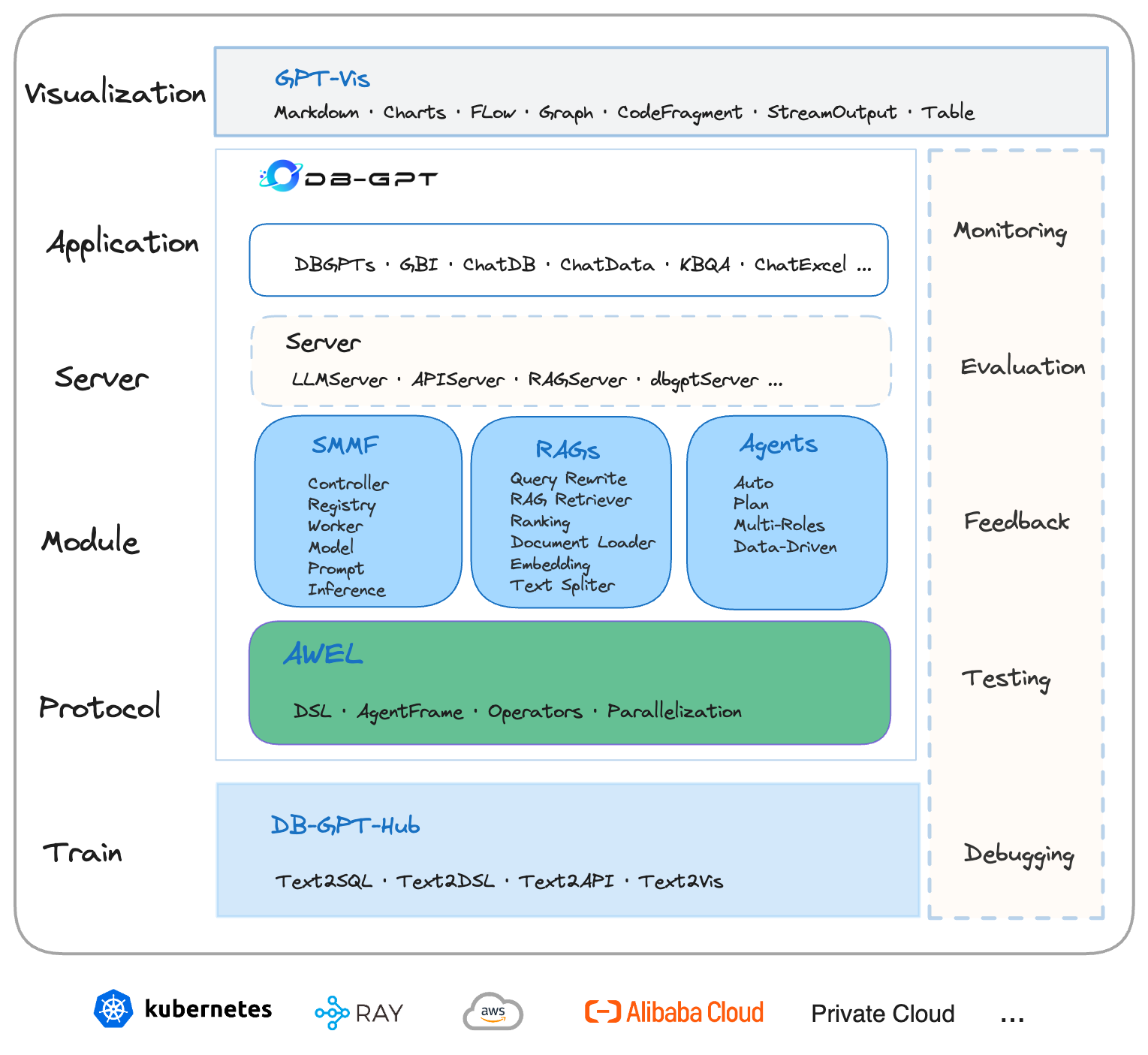

doc/dbgpt.png

0 → 100644

244 KB

doc/page1.png

0 → 100644

190 KB

doc/page2.png

0 → 100644

146 KB

doc/page3.png

0 → 100644

545 KB

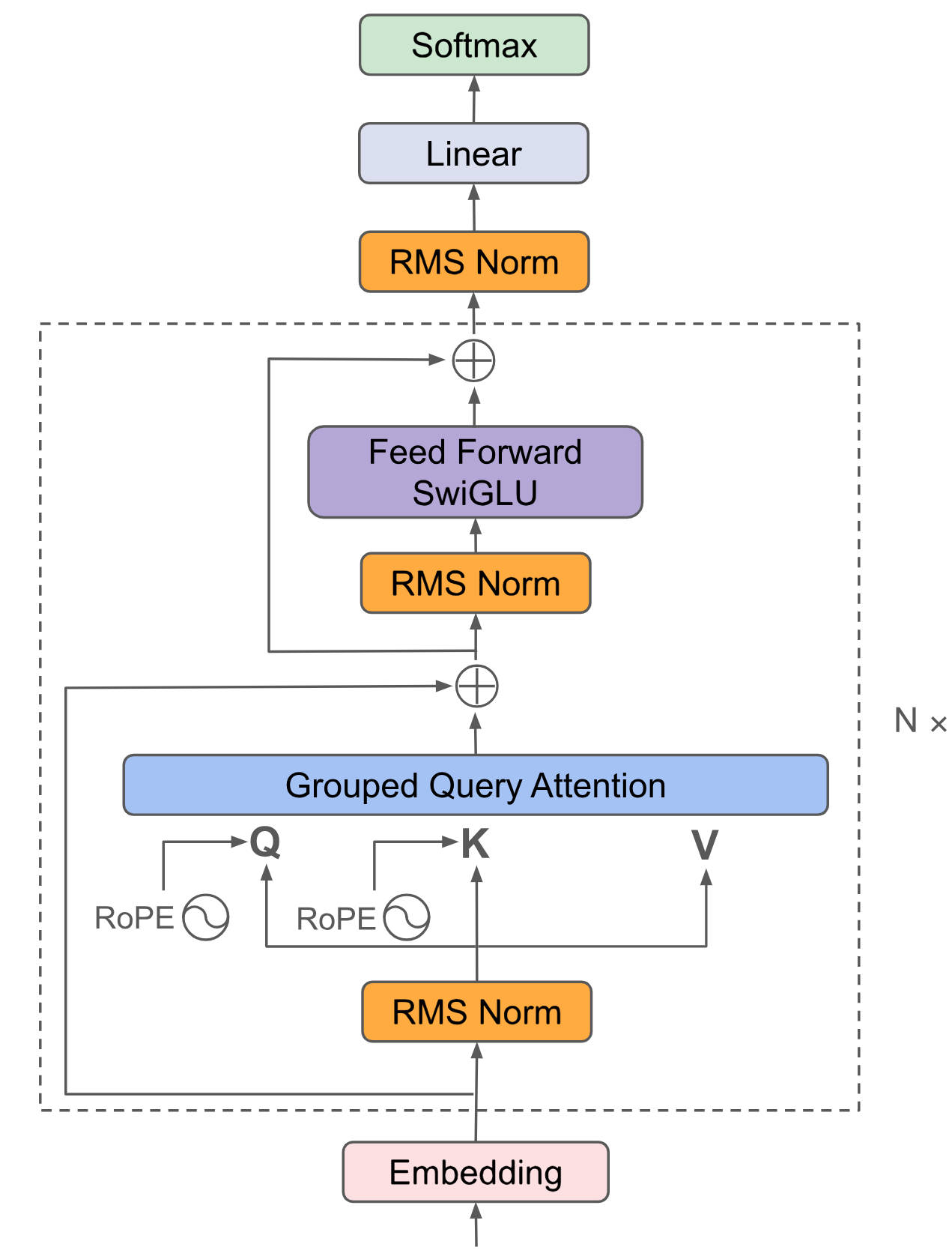

doc/qwen.png

0 → 100644

356 KB

docker-compose.yml

0 → 100644

docker/Dockerfile

0 → 100644