# 环境配置

1. 创建容器,安装基础依赖包

docker run --name=libo_mlperf_test --hostname=node72 --user=root --volume=/mnt/fs/user/llama:/mnt/fs/user/llama --volume=/root/.ssh:/root/.ssh --volume=/opt/hyhal:/opt/hyhal:ro --cap-add=SYS_PTRACE --shm-size 80g --network=host --privileged --device /dev/kfd:/dev/kfd --device /sys/kernel/debug/dri:/sys/kernel/debug/dri --device /dev/dri:/dev/dri --runtime=runc --detach=true -t b8d662003405 /bin/bash

docker exec -it libo_mlperf_test bash

cd /mnt/fs/user/llama/custom_model/libo_test/mlperf_test

git clone https://github.com/mlperf/logging.git mlperf-logging

cd mlperf-logging/

pip install -e .

git clone http://10.6.10.68/libo11/mlperf_transformer_v0.7.git

2. 数据集下载及处理

#(进入目录)

cd /mnt/fs/user/llama/custom_model/libo_test/mlperf_test/transformer/implementations/pytorch

#更改run_preprocessing.sh :

vim run_preprocessing.sh

#更改为以下内容 :

#!/bin/bash

set -e

SEED=$1

mkdir -p /workspace/translation/examples/translation/wmt14_en_de

mkdir -p /workspace/translation/examples/translation/wmt14_en_de/utf8

# TODO: Add SEED to process_data.py since this uses a random generator (future PR)

#export PYTHONPATH=/research/transformer/transformer:${PYTHONPATH}

# Add compliance to PYTHONPATH

# export PYTHONPATH=/mlperf/training/compliance:${PYTHONPATH}

cp ./reference_dictionary.ende.txt /workspace/translation/

cd /workspace/translation

cp /workspace/translation/reference_dictionary.ende.txt /workspace/translation/examples/translation/wmt14_en_de/dict.en.txt

cp /workspace/translation/reference_dictionary.ende.txt /workspace/translation/examples/translation/wmt14_en_de/dict.de.txt

sed -i "1s/^/\'\'\n/" /workspace/translation/examples/translation/wmt14_en_de/dict.en.txt

sed -i "1s/^/\'\'\n/" /workspace/translation/examples/translation/wmt14_en_de/dict.de.txt

# TODO: make code consistent to not look in two places (allows temporary hack above for preprocessing-vs-training)

cp /workspace/translation/reference_dictionary.ende.txt /workspace/translation/examples/translation/wmt14_en_de/utf8/dict.en.txt

cp /workspace/translation/reference_dictionary.ende.txt /workspace/translation/examples/translation/wmt14_en_de/utf8/dict.de.txt

#wget https://raw.githubusercontent.com/tensorflow/models/master/official/transformer/test_data/newstest2014.en -O /workspace/translation/examples/translation/wmt14_en_de/newstest2014.en

#wget https://raw.githubusercontent.com/tensorflow/models/master/official/transformer/test_data/newstest2014.de -O /workspace/translation/examples/translation/wmt14_en_de/newstest2014.de

cd /mnt/fs/user/llama/custom_model/libo_test/mlperf_test/transformer/implementations/pytorch

cp ./newstest2014.en /workspace/translation

cp ./newstest2014.de /workspace/translation

cp /workspace/translation/newstest2014.en /workspace/translation/examples/translation/wmt14_en_de/newstest2014.en

cp /workspace/translation/newstest2014.de /workspace/translation/examples/translation/wmt14_en_de/newstest2014.de

python3 preprocess.py --raw_dir /raw_data/ --data_dir /workspace/translation/examples/translation/wmt14_en_de

#数据集处理

chmod +x run_preprocessing.sh

chmod +x run_conversion.sh

bash run_preprocessing.sh

bash run_conversion.sh#该过程巨慢

#完成后会在/workspace/translation/examples/translation/wmt14_en_de/utf8里生成几个.bin文件,数据集路径按需修改即可

# 训练

1. 编译apex

#项目中apex适配的版本较老,直接将老版本apex中的optimizers 拷贝到环境中,替换掉原有的包就行

cd /mnt/fs/user/llama/custom_model/libo_test/mlperf_test/transformer

cp ./optimizers.zip /usr/local/lib/python3.10/site-packages/apex/contrib/

cd /usr/local/lib/python3.10/site-packages/apex/contrib

rm -rf optimizers

unzip optimizers.zip

2. 训练指令

source config_DGX1.sh

bash ./run_and_time.sh

# 截图

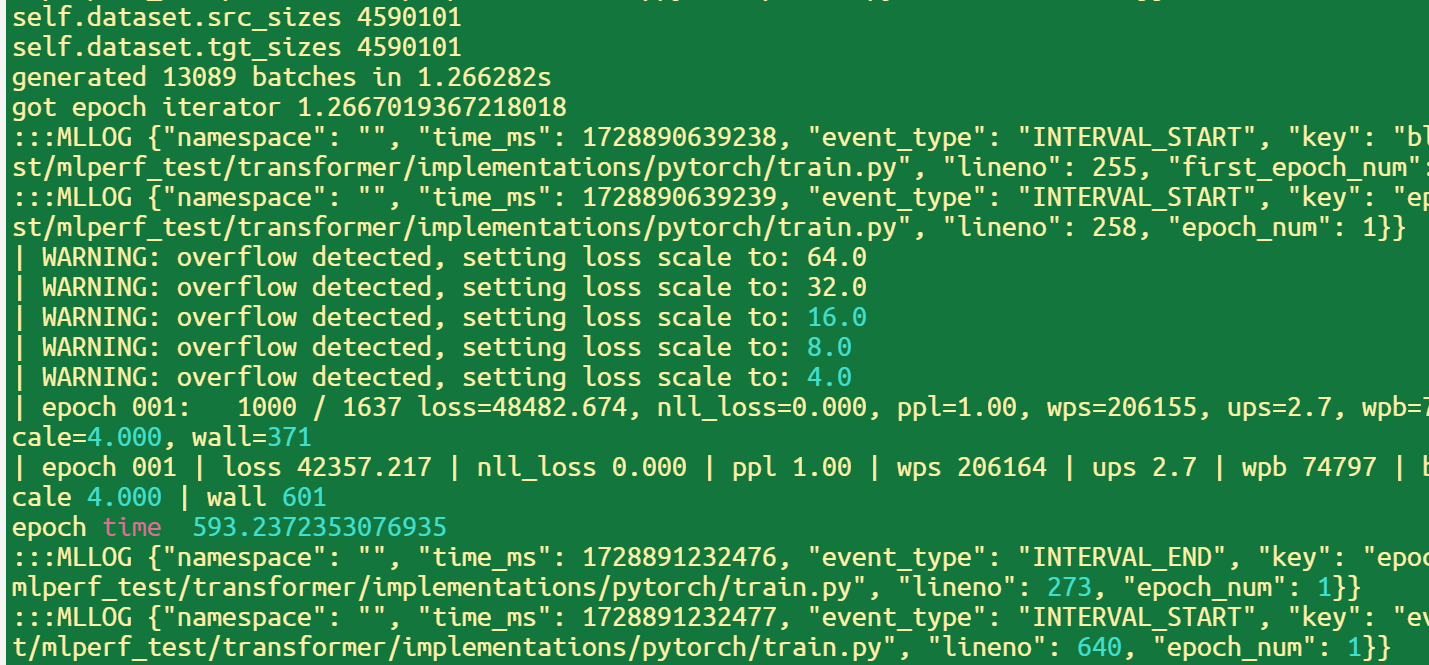

训练

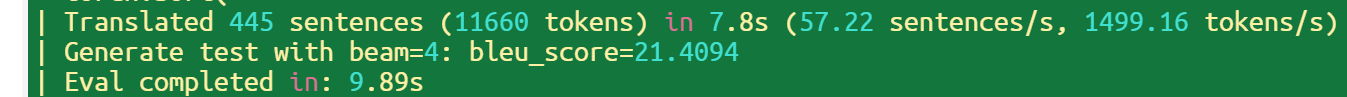

验证

# 详细的bug处理及配置过程可参阅:

https://r0ddbu55vzx.feishu.cn/wiki/ETtMwanKhi77M8kZHmhcdD6Anqc

https://r0ddbu55vzx.feishu.cn/wiki/COKQwN0qci5UrpkMdsMckn7In7d