Initial commit

parents

Showing

codes/tests/test_models.py

0 → 100644

codes/tests/test_ops.py

0 → 100644

codes/tests/test_train.py

0 → 100644

codes/tests/test_waseda.py

0 → 100644

codes/tests/test_zoo.py

0 → 100644

codes/utils/__init__.py

0 → 100644

codes/utils/util.py

0 → 100644

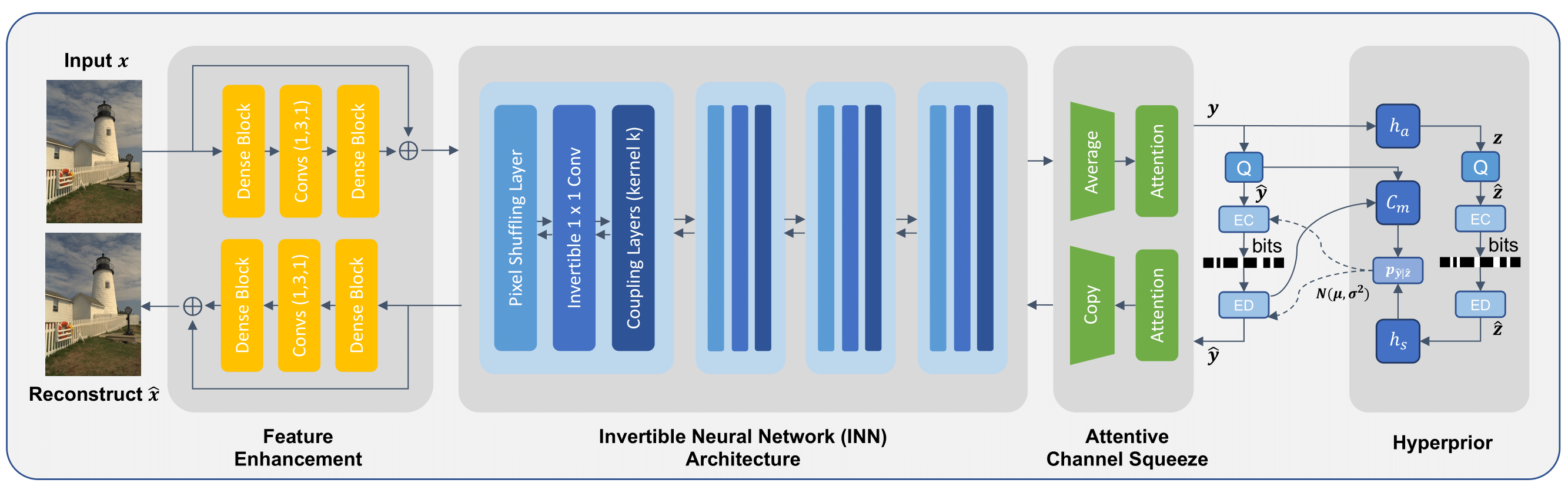

figures/overview.png

0 → 100644

290 KB

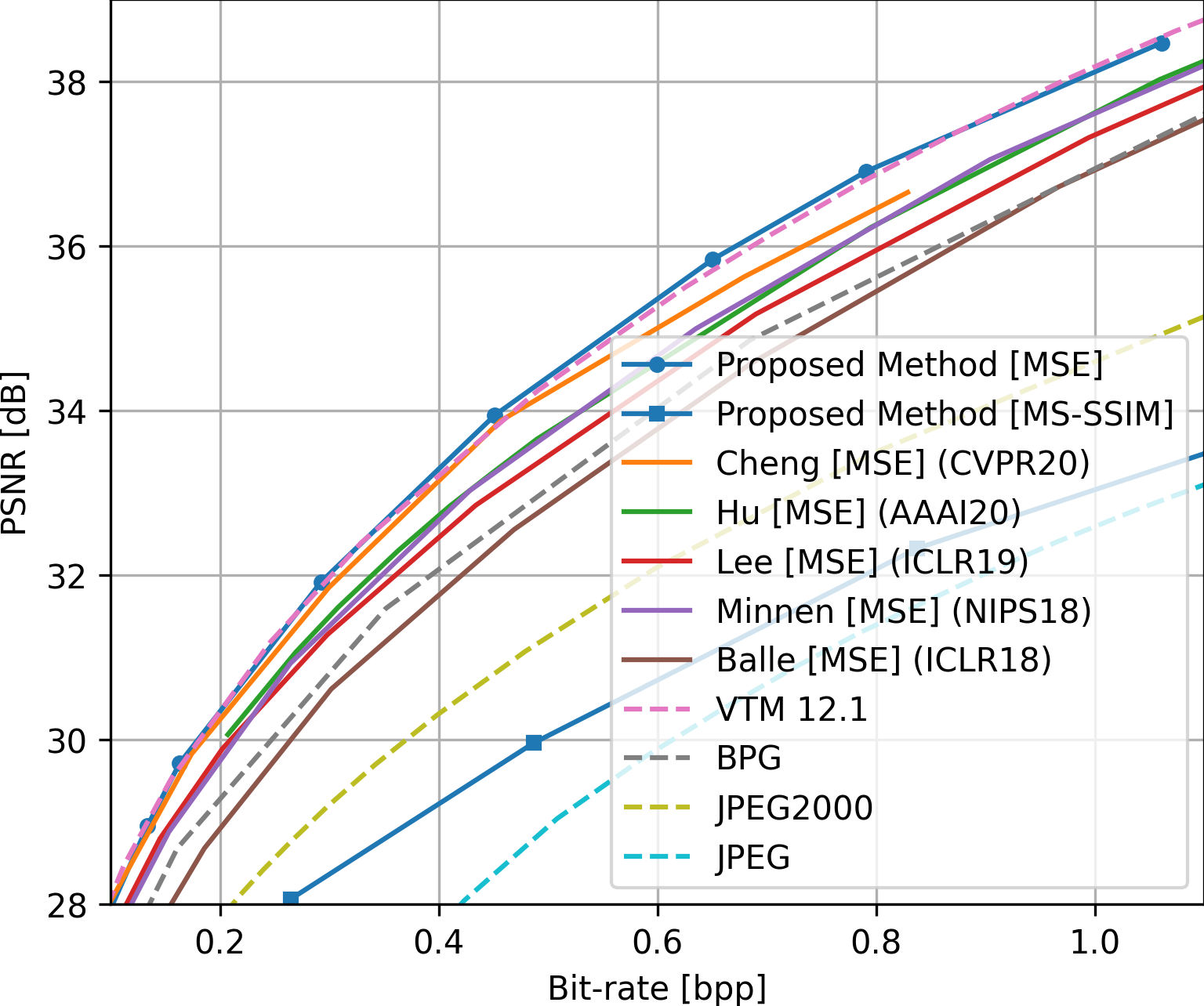

figures/result.png

0 → 100644

323 KB

results/clic_mse.json

0 → 100644

results/kodak_mse.json

0 → 100644

results/tecnick_mse.json

0 → 100644