v1.0

Showing

Too many changes to show.

To preserve performance only 508 of 508+ files are displayed.

examples/MMPT/setup.py

0 → 100644

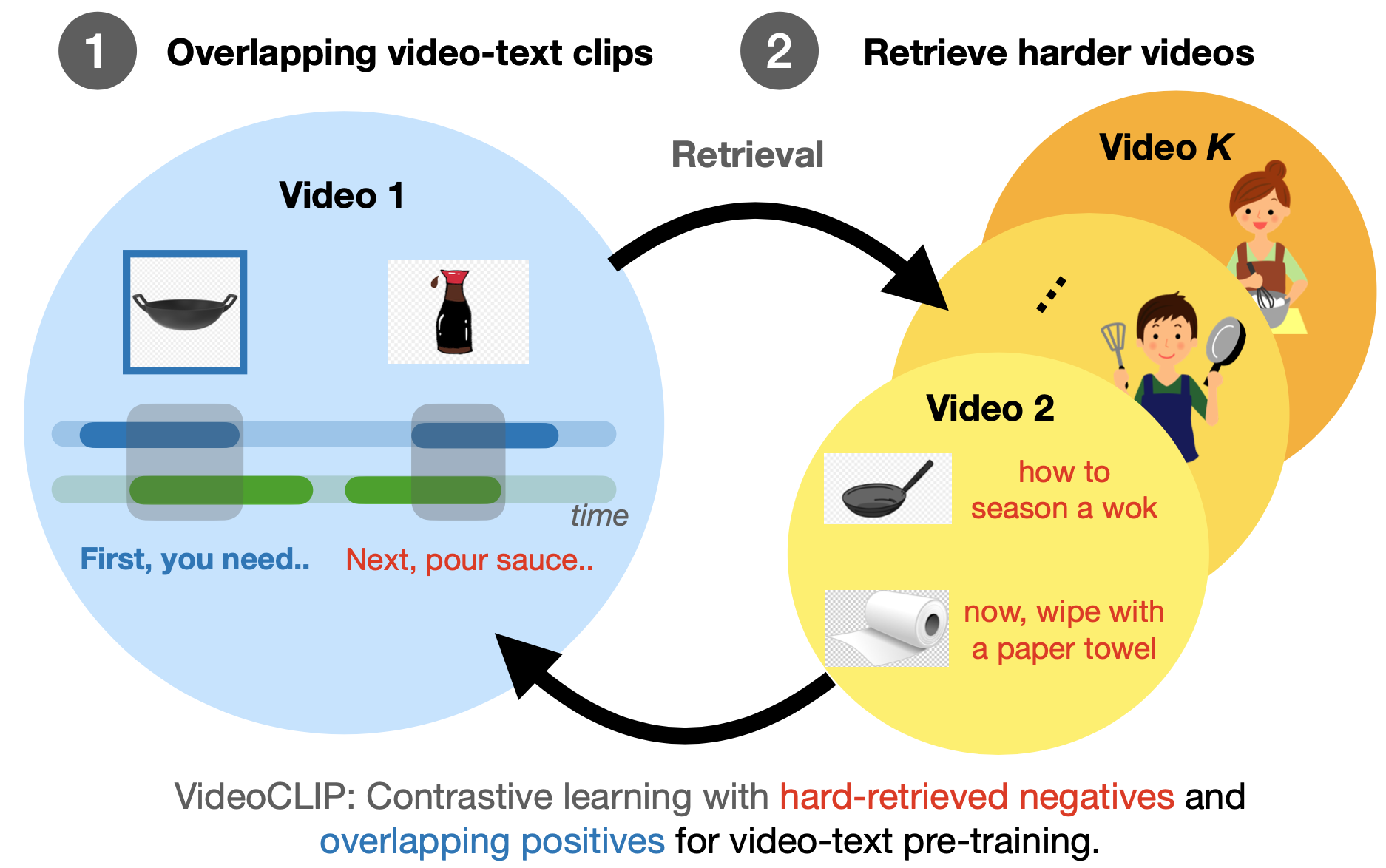

examples/MMPT/videoclip.png

0 → 100644

377 KB

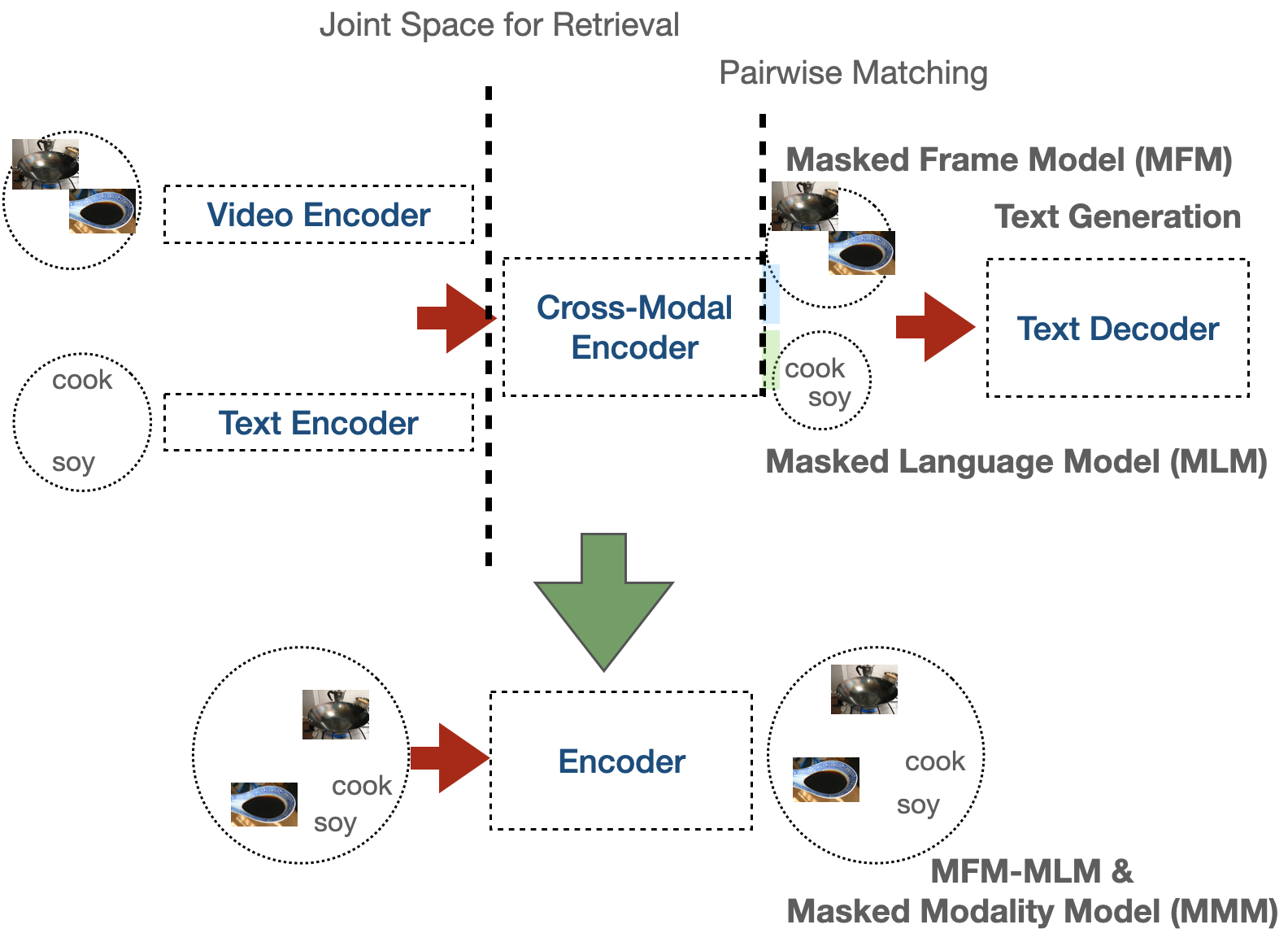

examples/MMPT/vlm.png

0 → 100644

409 KB

examples/__init__.py

0 → 100644