v

Showing

File added

File added

File added

File added

File added

File added

libs/utils/checkpoint.py

0 → 100644

libs/utils/comm.py

0 → 100644

libs/utils/counter.py

0 → 100644

libs/utils/logger.py

0 → 100644

libs/utils/metric.py

0 → 100644

libs/utils/time_counter.py

0 → 100644

libs/utils/utils.py

0 → 100644

libs/utils/vocab.py

0 → 100644

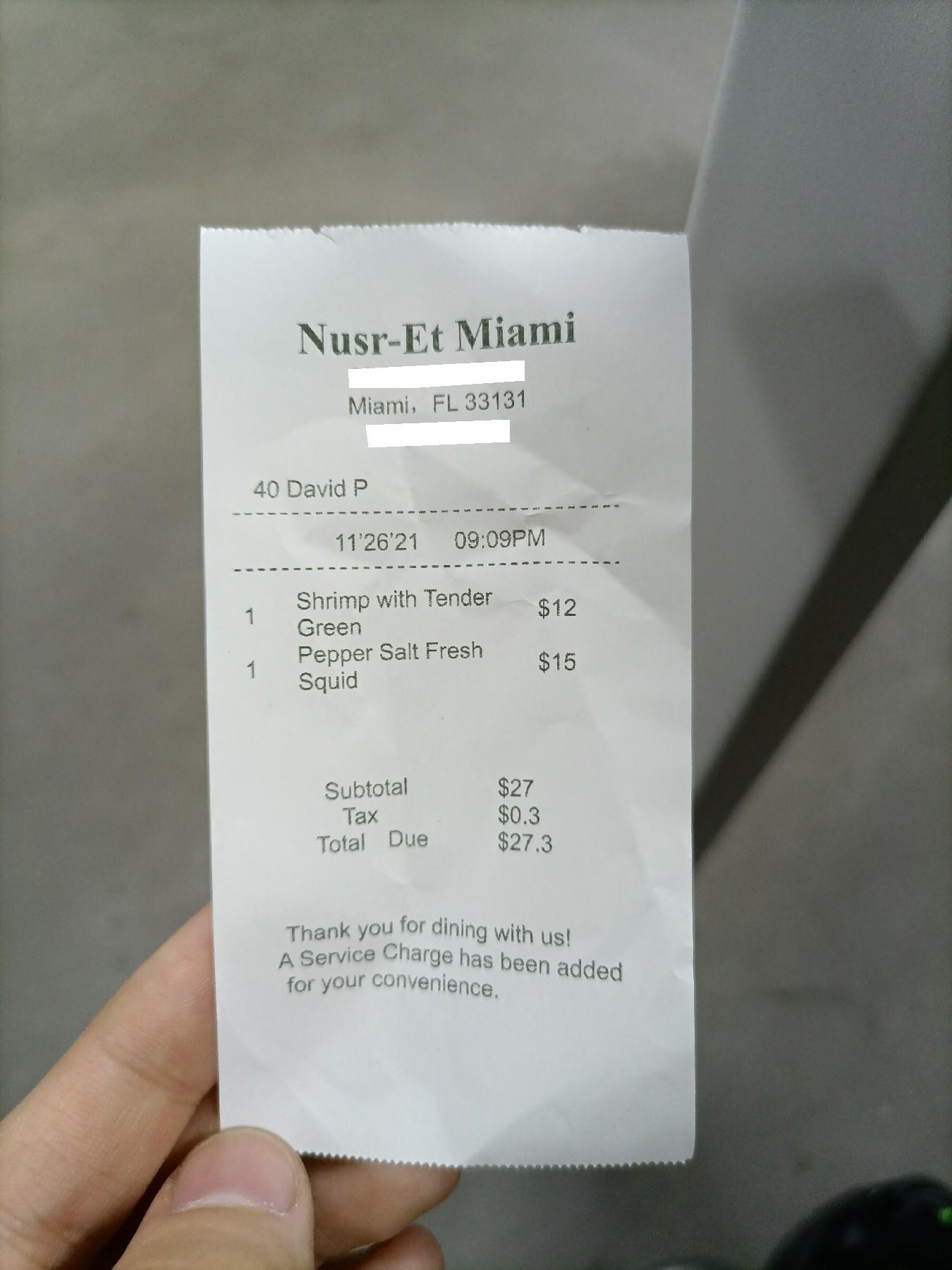

samples/001.jpg

0 → 100644

131 KB

samples/001.json

0 → 100644