Merge

Showing

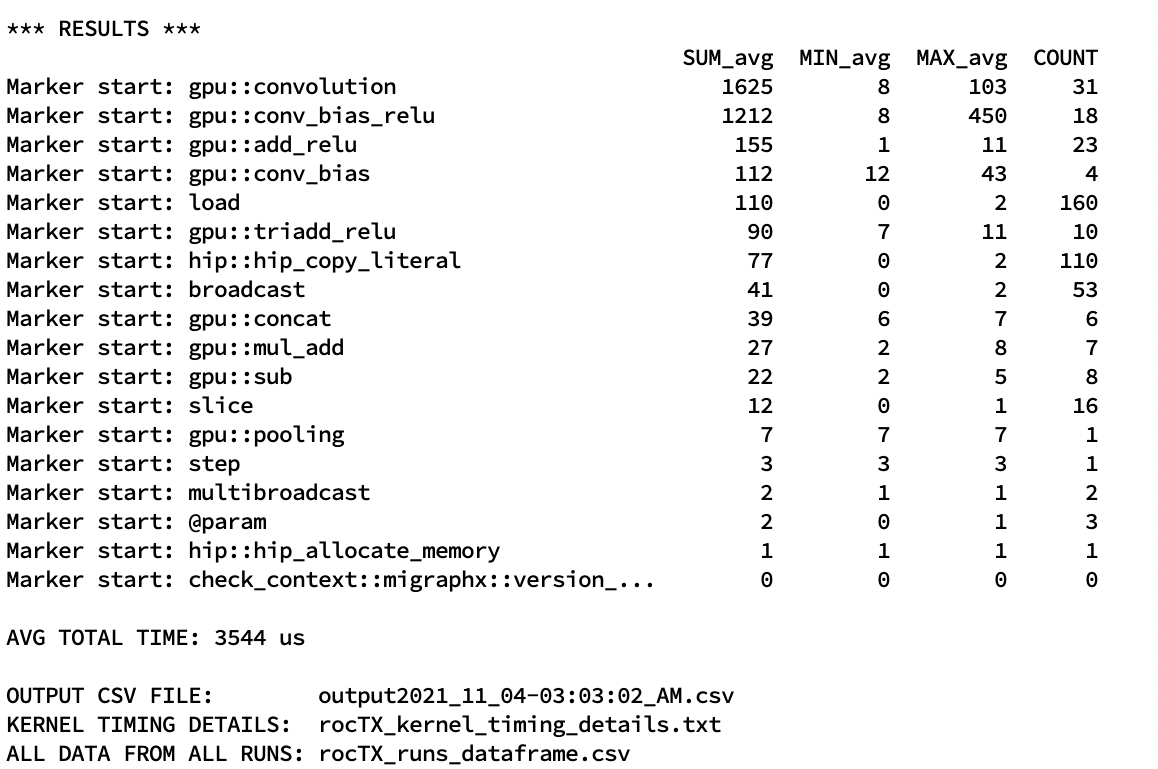

doc/src/dev/roctx1.jpg

0 → 100644

396 KB

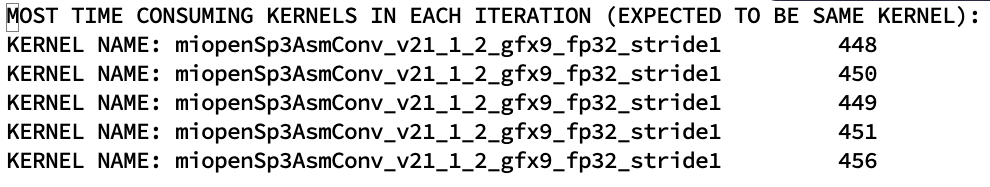

doc/src/dev/roctx2.jpg

0 → 100644

123 KB

doc/src/dev/tools.rst

0 → 100644

doc/src/dev_intro.rst

0 → 100644

doc/src/overview.rst

deleted

100644 → 0

examples/migraphx/README.md

0 → 100644

File moved

File moved

File moved

File moved

File moved

File moved

File moved