[CK_TILE]Moe update index (#1672)

* update MOCK_ID for moe-sorting

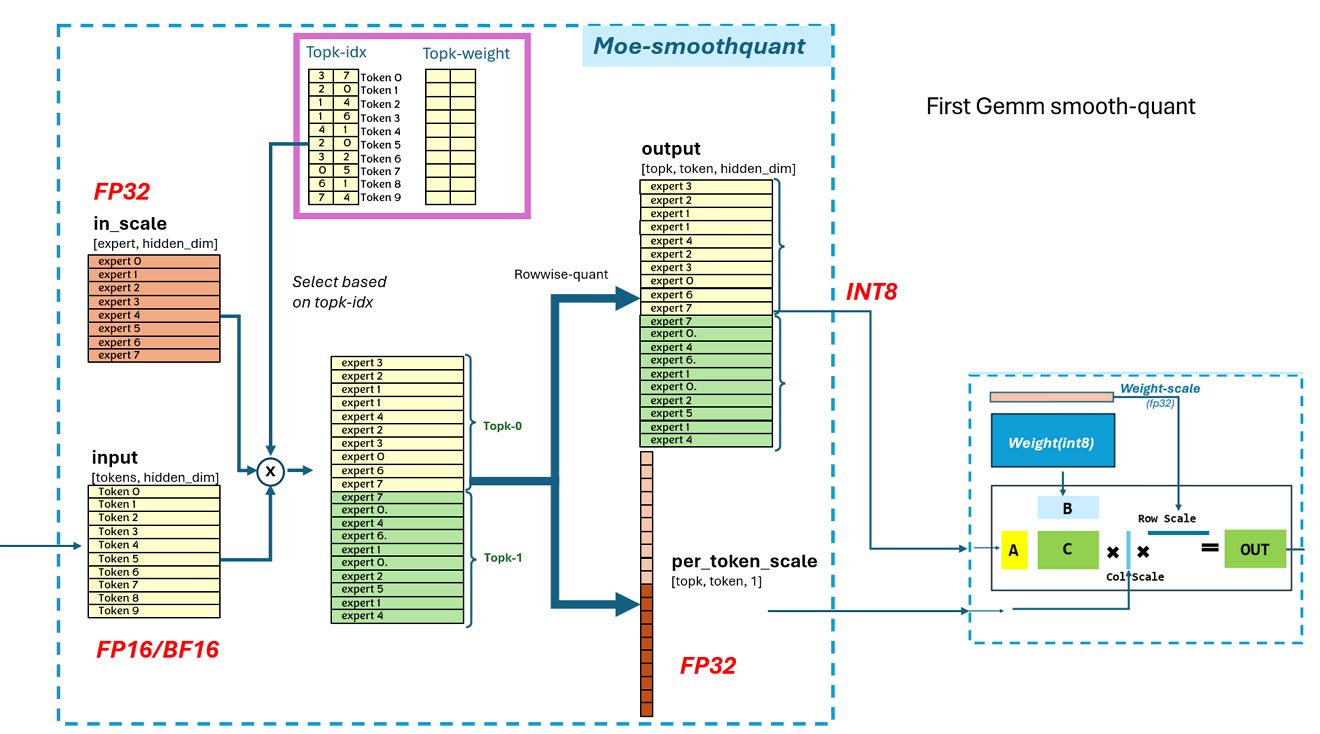

* add moe-smoothquant

* update a comment

* fix format

* hot fix

* update topk in overflow case

* update comments

* update bf16 cvt

---------

Co-authored-by:  valarLip <340077269@qq.com>

valarLip <340077269@qq.com>

Showing

202 KB