*This model was released on 2022-03-23 and added to Hugging Face Transformers on 2022-08-04.*

# VideoMAE

## Overview

The VideoMAE model was proposed in [VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training](https://huggingface.co/papers/2203.12602) by Zhan Tong, Yibing Song, Jue Wang, Limin Wang.

VideoMAE extends masked auto encoders ([MAE](vit_mae)) to video, claiming state-of-the-art performance on several video classification benchmarks.

The abstract from the paper is the following:

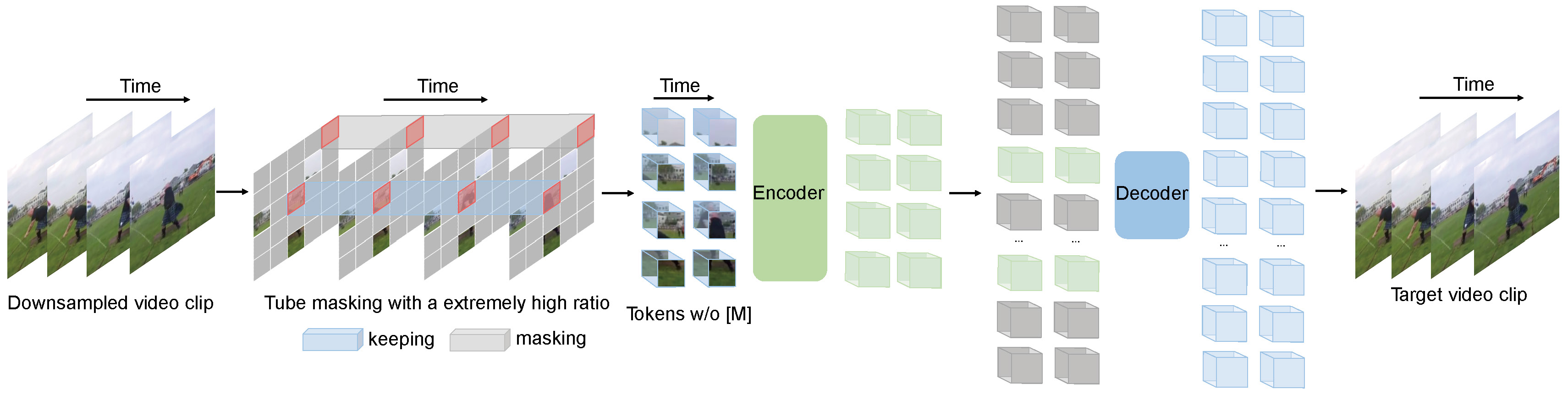

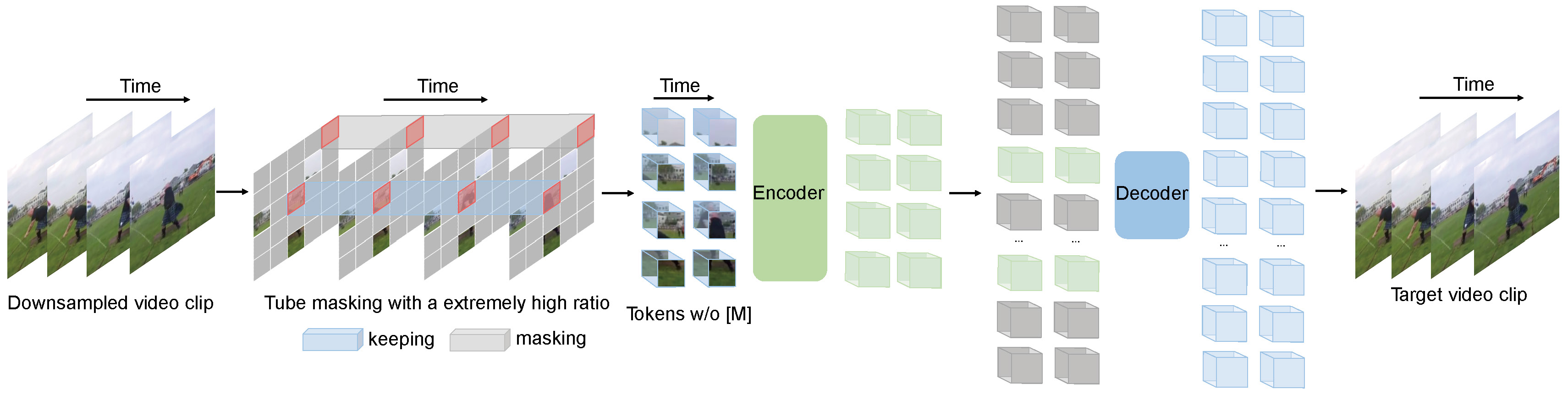

*Pre-training video transformers on extra large-scale datasets is generally required to achieve premier performance on relatively small datasets. In this paper, we show that video masked autoencoders (VideoMAE) are data-efficient learners for self-supervised video pre-training (SSVP). We are inspired by the recent ImageMAE and propose customized video tube masking and reconstruction. These simple designs turn out to be effective for overcoming information leakage caused by the temporal correlation during video reconstruction. We obtain three important findings on SSVP: (1) An extremely high proportion of masking ratio (i.e., 90% to 95%) still yields favorable performance of VideoMAE. The temporally redundant video content enables higher masking ratio than that of images. (2) VideoMAE achieves impressive results on very small datasets (i.e., around 3k-4k videos) without using any extra data. This is partially ascribed to the challenging task of video reconstruction to enforce high-level structure learning. (3) VideoMAE shows that data quality is more important than data quantity for SSVP. Domain shift between pre-training and target datasets are important issues in SSVP. Notably, our VideoMAE with the vanilla ViT backbone can achieve 83.9% on Kinects-400, 75.3% on Something-Something V2, 90.8% on UCF101, and 61.1% on HMDB51 without using any extra data.*

VideoMAE pre-training. Taken from the original paper.

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/MCG-NJU/VideoMAE).

## Using Scaled Dot Product Attention (SDPA)

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

from transformers import VideoMAEForVideoClassification

model = VideoMAEForVideoClassification.from_pretrained("MCG-NJU/videomae-base-finetuned-kinetics", attn_implementation="sdpa", dtype=torch.float16)

...

```

For the best speedups, we recommend loading the model in half-precision (e.g. `torch.float16` or `torch.bfloat16`).

On a local benchmark (A100-40GB, PyTorch 2.3.0, OS Ubuntu 22.04) with `float32` and `MCG-NJU/videomae-base-finetuned-kinetics` model, we saw the following speedups during inference.

| Batch size | Average inference time (ms), eager mode | Average inference time (ms), sdpa model | Speed up, Sdpa / Eager (x) |

|--------------|-------------------------------------------|-------------------------------------------|------------------------------|

| 1 | 37 | 10 | 3.7 |

| 2 | 24 | 18 | 1.33 |

| 4 | 43 | 32 | 1.34 |

| 8 | 84 | 60 | 1.4 |

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with VideoMAE. If

you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll

review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

**Video classification**

- [A notebook](https://github.com/huggingface/notebooks/blob/main/examples/video_classification.ipynb) that shows how

to fine-tune a VideoMAE model on a custom dataset.

- [Video classification task guide](../tasks/video_classification)

- [A 🤗 Space](https://huggingface.co/spaces/sayakpaul/video-classification-ucf101-subset) showing how to perform inference with a video classification model.

## VideoMAEConfig

[[autodoc]] VideoMAEConfig

## VideoMAEImageProcessor

[[autodoc]] VideoMAEImageProcessor

- preprocess

## VideoMAEVideoProcessor

[[autodoc]] VideoMAEVideoProcessor

- preprocess

## VideoMAEModel

[[autodoc]] VideoMAEModel

- forward

## VideoMAEForPreTraining

`VideoMAEForPreTraining` includes the decoder on top for self-supervised pre-training.

[[autodoc]] transformers.VideoMAEForPreTraining

- forward

## VideoMAEForVideoClassification

[[autodoc]] transformers.VideoMAEForVideoClassification

- forward

VideoMAE pre-training. Taken from the original paper.

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/MCG-NJU/VideoMAE).

## Using Scaled Dot Product Attention (SDPA)

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

from transformers import VideoMAEForVideoClassification

model = VideoMAEForVideoClassification.from_pretrained("MCG-NJU/videomae-base-finetuned-kinetics", attn_implementation="sdpa", dtype=torch.float16)

...

```

For the best speedups, we recommend loading the model in half-precision (e.g. `torch.float16` or `torch.bfloat16`).

On a local benchmark (A100-40GB, PyTorch 2.3.0, OS Ubuntu 22.04) with `float32` and `MCG-NJU/videomae-base-finetuned-kinetics` model, we saw the following speedups during inference.

| Batch size | Average inference time (ms), eager mode | Average inference time (ms), sdpa model | Speed up, Sdpa / Eager (x) |

|--------------|-------------------------------------------|-------------------------------------------|------------------------------|

| 1 | 37 | 10 | 3.7 |

| 2 | 24 | 18 | 1.33 |

| 4 | 43 | 32 | 1.34 |

| 8 | 84 | 60 | 1.4 |

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with VideoMAE. If

you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll

review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

**Video classification**

- [A notebook](https://github.com/huggingface/notebooks/blob/main/examples/video_classification.ipynb) that shows how

to fine-tune a VideoMAE model on a custom dataset.

- [Video classification task guide](../tasks/video_classification)

- [A 🤗 Space](https://huggingface.co/spaces/sayakpaul/video-classification-ucf101-subset) showing how to perform inference with a video classification model.

## VideoMAEConfig

[[autodoc]] VideoMAEConfig

## VideoMAEImageProcessor

[[autodoc]] VideoMAEImageProcessor

- preprocess

## VideoMAEVideoProcessor

[[autodoc]] VideoMAEVideoProcessor

- preprocess

## VideoMAEModel

[[autodoc]] VideoMAEModel

- forward

## VideoMAEForPreTraining

`VideoMAEForPreTraining` includes the decoder on top for self-supervised pre-training.

[[autodoc]] transformers.VideoMAEForPreTraining

- forward

## VideoMAEForVideoClassification

[[autodoc]] transformers.VideoMAEForVideoClassification

- forward

VideoMAE pre-training. Taken from the original paper.

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/MCG-NJU/VideoMAE).

## Using Scaled Dot Product Attention (SDPA)

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

from transformers import VideoMAEForVideoClassification

model = VideoMAEForVideoClassification.from_pretrained("MCG-NJU/videomae-base-finetuned-kinetics", attn_implementation="sdpa", dtype=torch.float16)

...

```

For the best speedups, we recommend loading the model in half-precision (e.g. `torch.float16` or `torch.bfloat16`).

On a local benchmark (A100-40GB, PyTorch 2.3.0, OS Ubuntu 22.04) with `float32` and `MCG-NJU/videomae-base-finetuned-kinetics` model, we saw the following speedups during inference.

| Batch size | Average inference time (ms), eager mode | Average inference time (ms), sdpa model | Speed up, Sdpa / Eager (x) |

|--------------|-------------------------------------------|-------------------------------------------|------------------------------|

| 1 | 37 | 10 | 3.7 |

| 2 | 24 | 18 | 1.33 |

| 4 | 43 | 32 | 1.34 |

| 8 | 84 | 60 | 1.4 |

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with VideoMAE. If

you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll

review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

**Video classification**

- [A notebook](https://github.com/huggingface/notebooks/blob/main/examples/video_classification.ipynb) that shows how

to fine-tune a VideoMAE model on a custom dataset.

- [Video classification task guide](../tasks/video_classification)

- [A 🤗 Space](https://huggingface.co/spaces/sayakpaul/video-classification-ucf101-subset) showing how to perform inference with a video classification model.

## VideoMAEConfig

[[autodoc]] VideoMAEConfig

## VideoMAEImageProcessor

[[autodoc]] VideoMAEImageProcessor

- preprocess

## VideoMAEVideoProcessor

[[autodoc]] VideoMAEVideoProcessor

- preprocess

## VideoMAEModel

[[autodoc]] VideoMAEModel

- forward

## VideoMAEForPreTraining

`VideoMAEForPreTraining` includes the decoder on top for self-supervised pre-training.

[[autodoc]] transformers.VideoMAEForPreTraining

- forward

## VideoMAEForVideoClassification

[[autodoc]] transformers.VideoMAEForVideoClassification

- forward

VideoMAE pre-training. Taken from the original paper.

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/MCG-NJU/VideoMAE).

## Using Scaled Dot Product Attention (SDPA)

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

from transformers import VideoMAEForVideoClassification

model = VideoMAEForVideoClassification.from_pretrained("MCG-NJU/videomae-base-finetuned-kinetics", attn_implementation="sdpa", dtype=torch.float16)

...

```

For the best speedups, we recommend loading the model in half-precision (e.g. `torch.float16` or `torch.bfloat16`).

On a local benchmark (A100-40GB, PyTorch 2.3.0, OS Ubuntu 22.04) with `float32` and `MCG-NJU/videomae-base-finetuned-kinetics` model, we saw the following speedups during inference.

| Batch size | Average inference time (ms), eager mode | Average inference time (ms), sdpa model | Speed up, Sdpa / Eager (x) |

|--------------|-------------------------------------------|-------------------------------------------|------------------------------|

| 1 | 37 | 10 | 3.7 |

| 2 | 24 | 18 | 1.33 |

| 4 | 43 | 32 | 1.34 |

| 8 | 84 | 60 | 1.4 |

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with VideoMAE. If

you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll

review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

**Video classification**

- [A notebook](https://github.com/huggingface/notebooks/blob/main/examples/video_classification.ipynb) that shows how

to fine-tune a VideoMAE model on a custom dataset.

- [Video classification task guide](../tasks/video_classification)

- [A 🤗 Space](https://huggingface.co/spaces/sayakpaul/video-classification-ucf101-subset) showing how to perform inference with a video classification model.

## VideoMAEConfig

[[autodoc]] VideoMAEConfig

## VideoMAEImageProcessor

[[autodoc]] VideoMAEImageProcessor

- preprocess

## VideoMAEVideoProcessor

[[autodoc]] VideoMAEVideoProcessor

- preprocess

## VideoMAEModel

[[autodoc]] VideoMAEModel

- forward

## VideoMAEForPreTraining

`VideoMAEForPreTraining` includes the decoder on top for self-supervised pre-training.

[[autodoc]] transformers.VideoMAEForPreTraining

- forward

## VideoMAEForVideoClassification

[[autodoc]] transformers.VideoMAEForVideoClassification

- forward