"docs/backend/backend.md" did not exist on "82136eb0b58cf93c953b9f701360aa1fe4718c14"

First commit

Showing

docs/INSTALL.md

0 → 100644

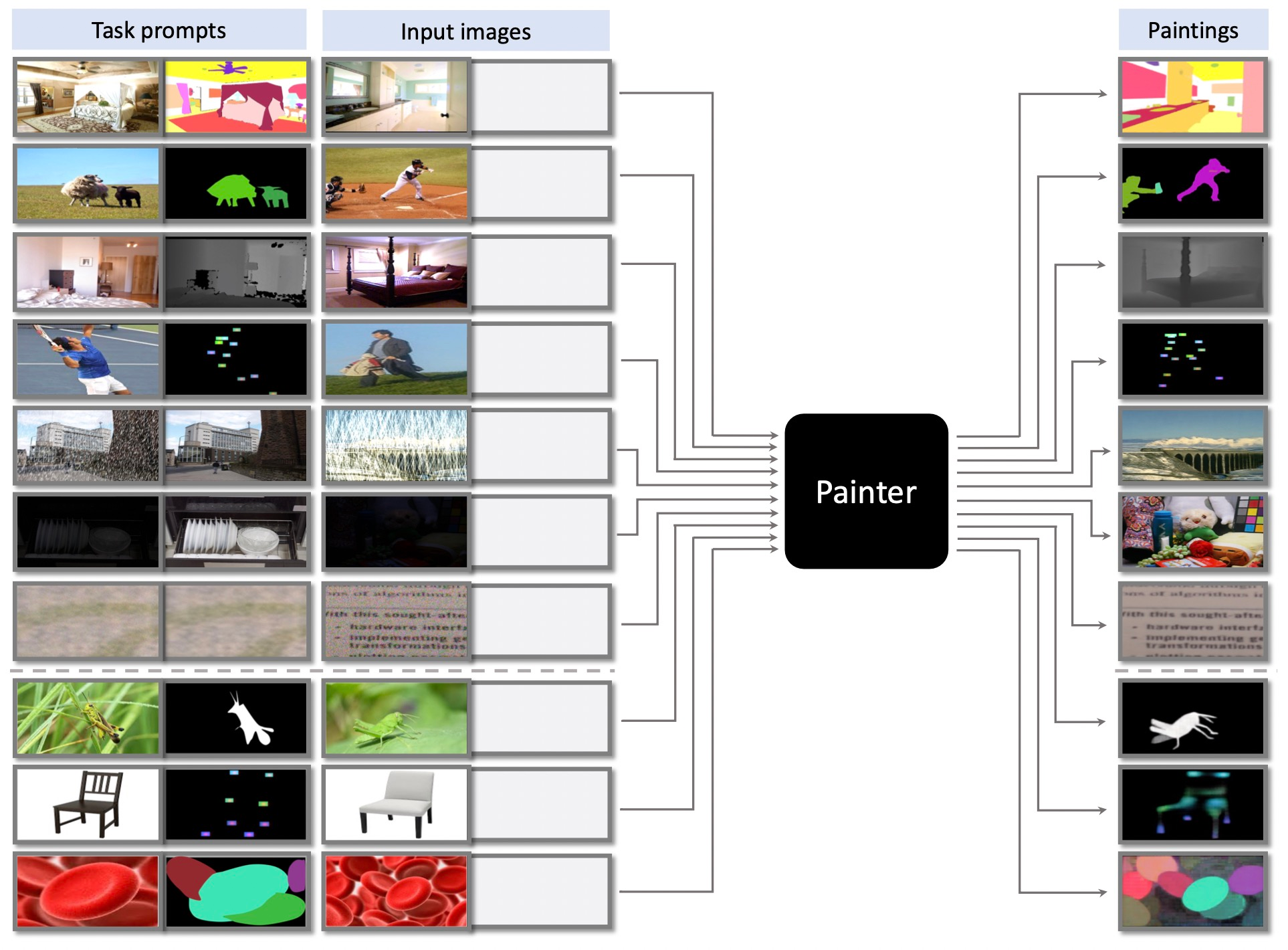

docs/teaser.jpg

0 → 100644

1.93 MB

engine_train.py

0 → 100644

eval/ade20k_semantic/eval.sh

0 → 100644

eval/coco_panoptic/eval.sh

0 → 100644