Merge branch 'master' into fix-xlnet-squad2.0

Showing

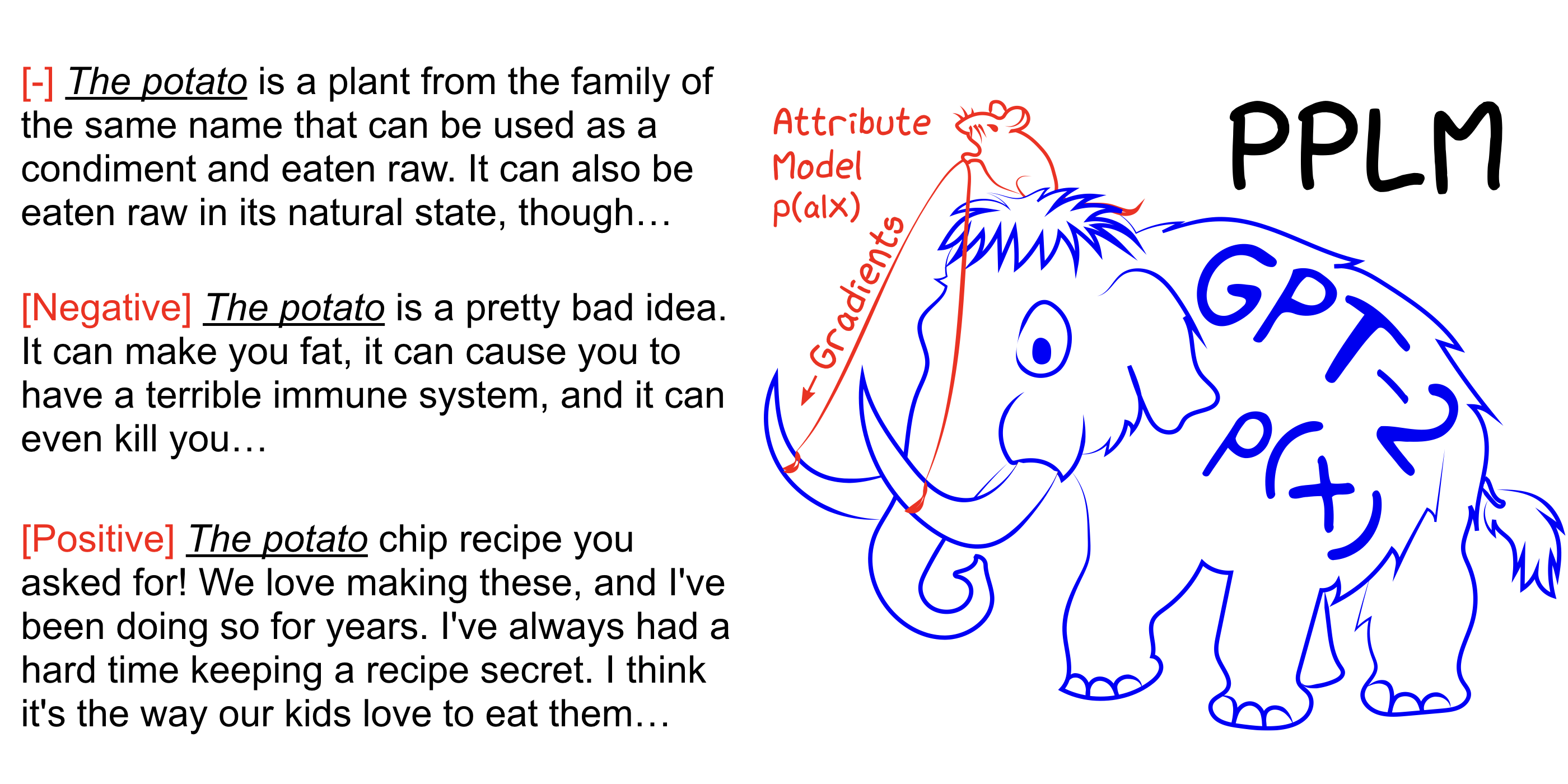

examples/pplm/README.md

0 → 100644

653 KB

examples/pplm/imgs/wooly.png

0 → 100644

664 KB

examples/pplm/run_pplm.py

0 → 100644

examples/run_tf_ner.py

0 → 100644

examples/run_xnli.py

0 → 100644