"git@developer.sourcefind.cn:dadigang/Ventoy.git" did not exist on "537f0eaa7e16df83a9e826cda9aa7967bc77717d"

Initial commit.

parents

Showing

This source diff could not be displayed because it is too large. You can view the blob instead.

comfy/sd2_clip.py

0 → 100644

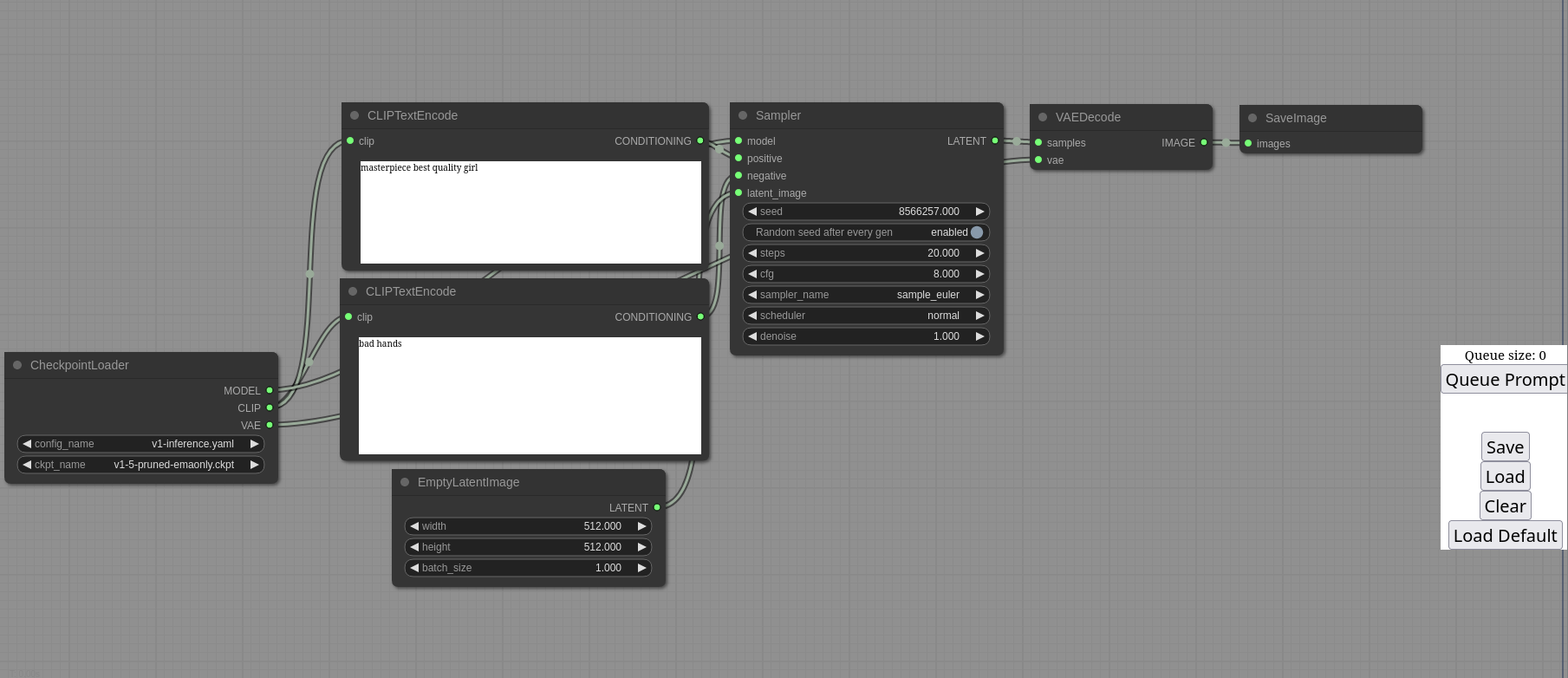

comfyui_screenshot.png

0 → 100644

115 KB

main.py

0 → 100644

models/vae/put_vae_here

0 → 100644

nodes.py

0 → 100644

requirements.txt

0 → 100644

webshit/index.html

0 → 100644

webshit/litegraph.core.js

0 → 100644

This source diff could not be displayed because it is too large. You can view the blob instead.

webshit/litegraph.css

0 → 100644