Initial commit

Showing

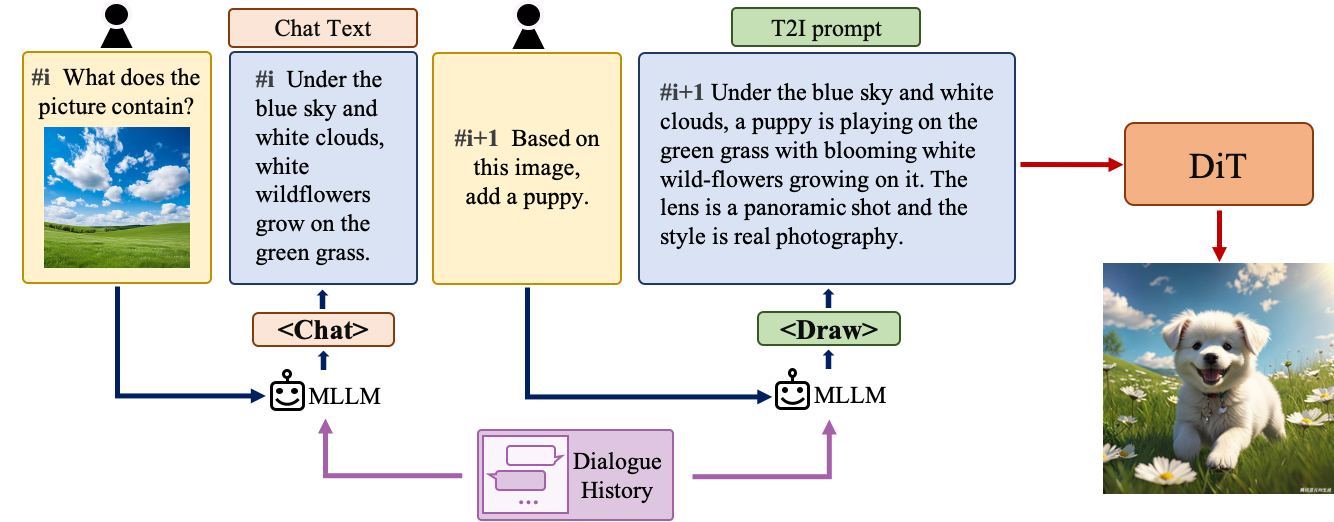

asset/mllm.png

0 → 100644

290 KB

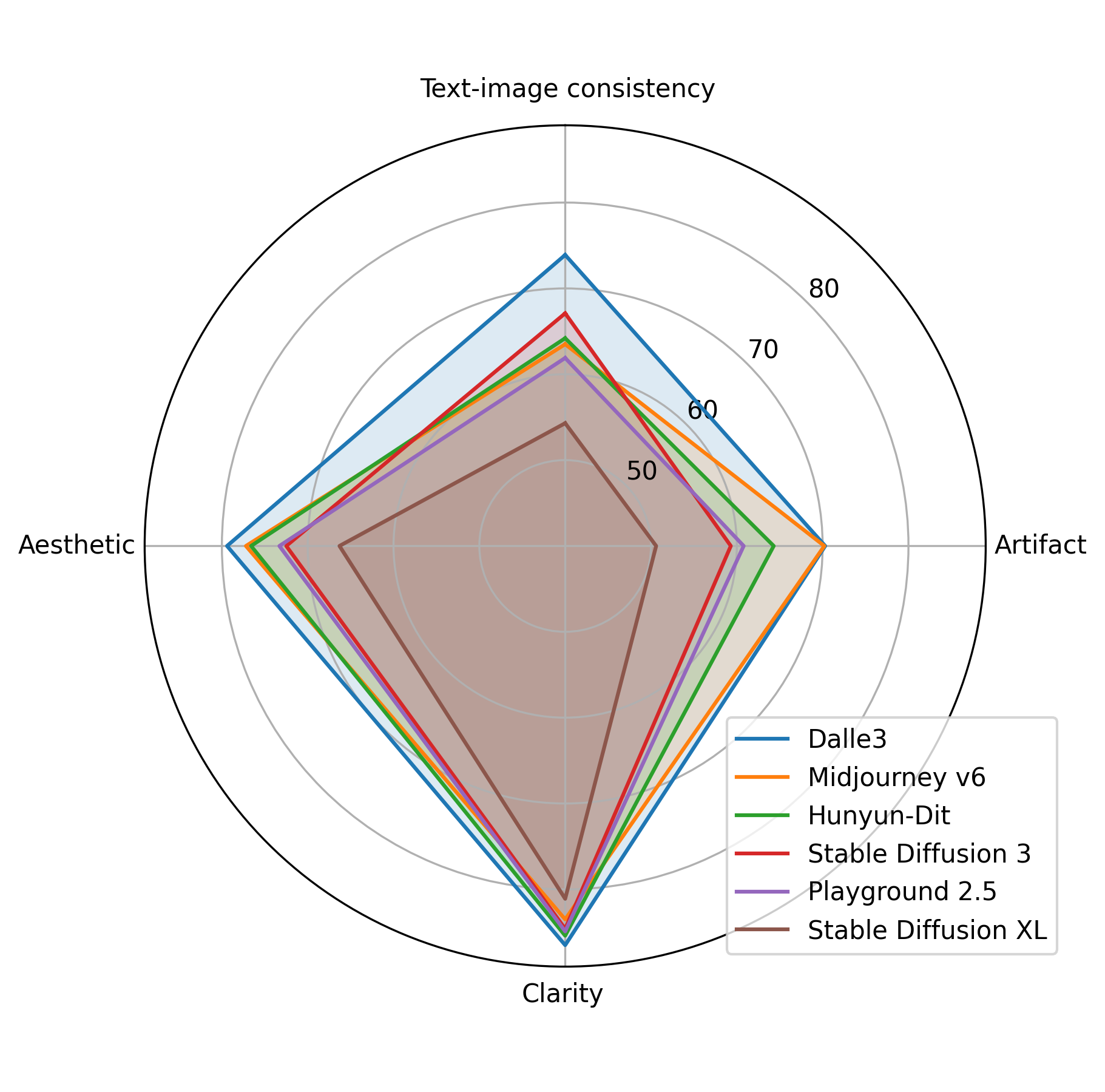

asset/radar.png

0 → 100644

500 KB

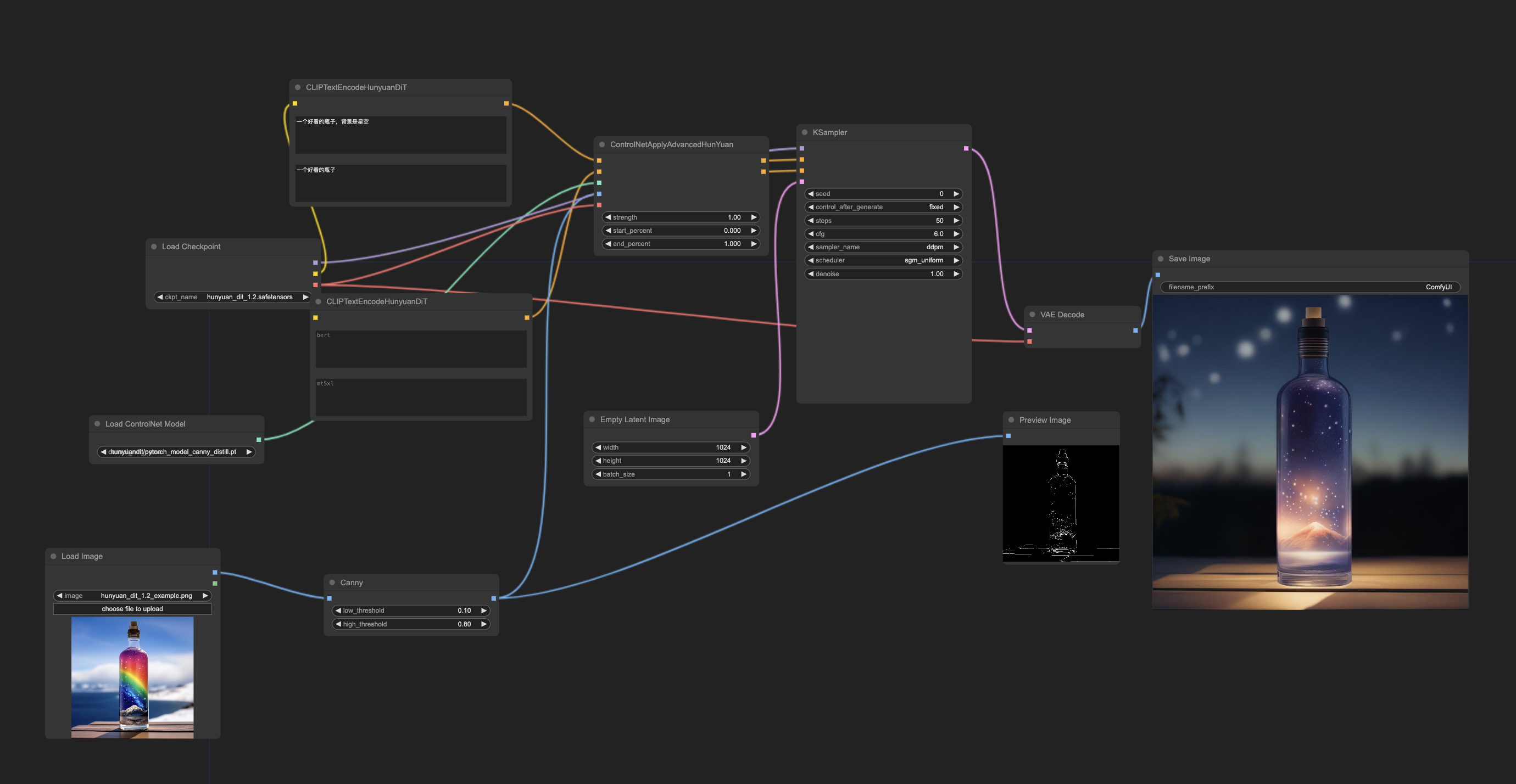

comfyui/README.md

0 → 100644

971 KB

1.5 MB

1.17 MB

controlnet/README.md

0 → 100644

210 KB

31.7 KB

24.7 KB

1.39 MB

1.36 MB

1.56 MB

dataset/yamls/porcelain.yaml

0 → 100644

diffusers/README.md

0 → 100644

environment.yml

0 → 100644

example_prompts.txt

0 → 100644

hydit/__init__.py

0 → 100644