docs: add docs for docs.ollama.com (#12805)

Showing

52.6 KB

3.57 MB

76.6 KB

55.5 KB

25.2 KB

docs/images/welcome.png

0 → 100644

233 KB

186 KB

182 KB

146 KB

38.4 KB

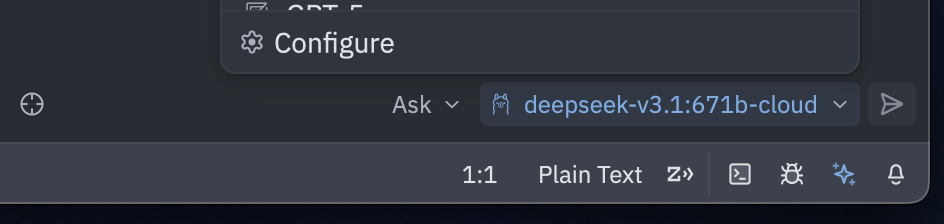

docs/images/zed-settings.png

0 → 100644

57.1 KB

docs/index.mdx

0 → 100644

docs/integrations/cline.mdx

0 → 100644

docs/integrations/codex.mdx

0 → 100644

docs/integrations/droid.mdx

0 → 100644

docs/integrations/goose.mdx

0 → 100644

docs/integrations/n8n.mdx

0 → 100644