Merge pull request #3029 from liuzhe-lz/v2.0-merge

Merge master into v2.0

Showing

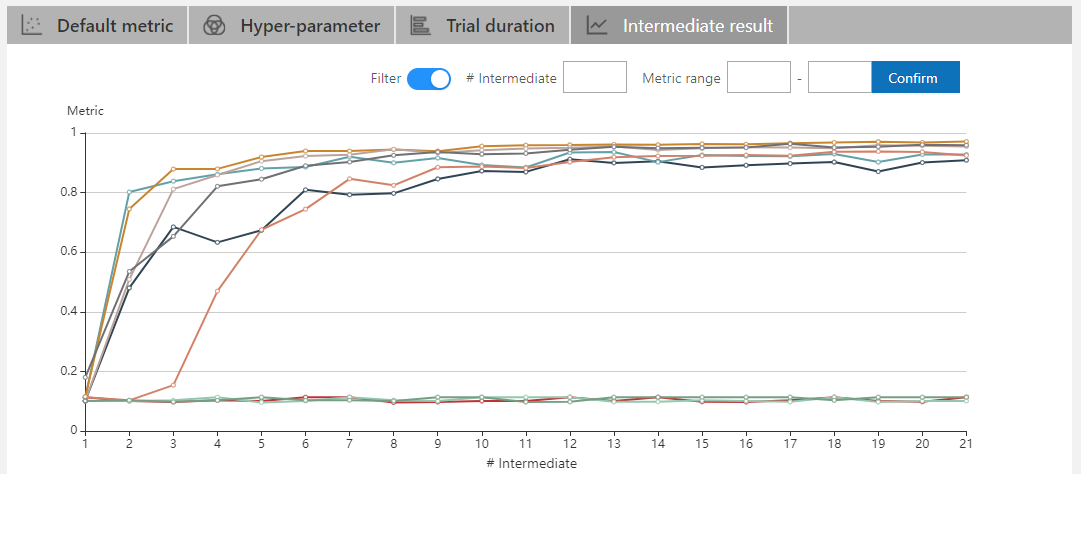

24.4 KB

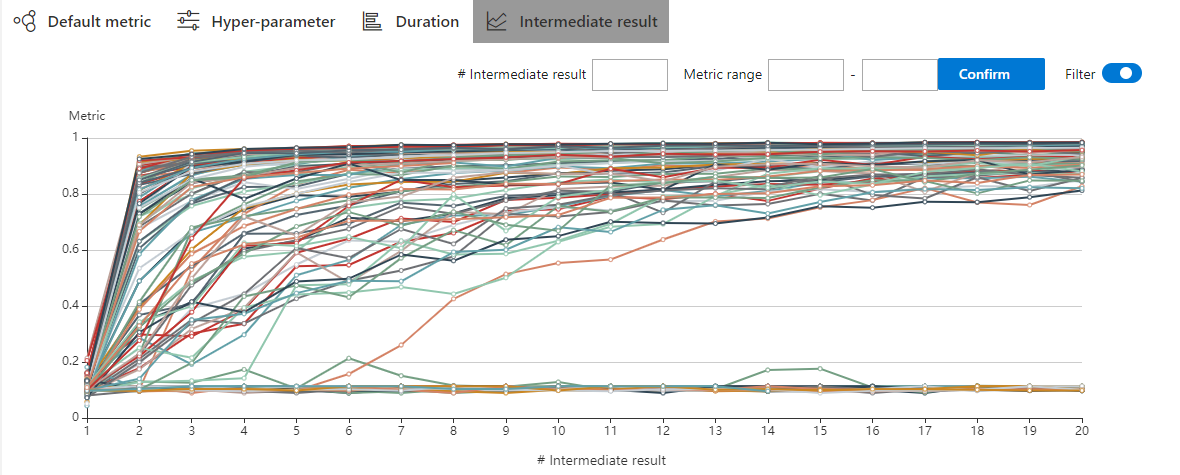

22.1 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

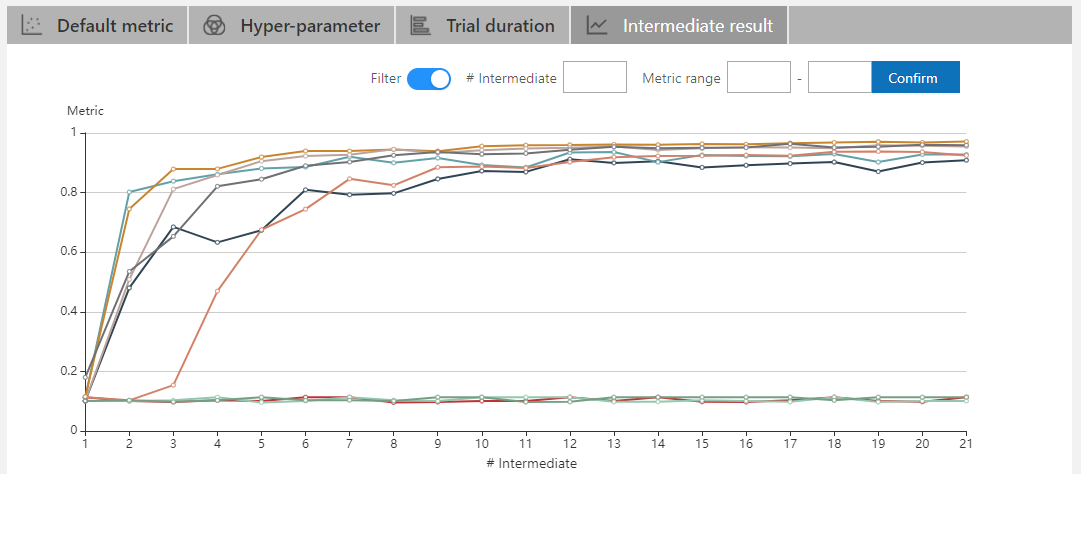

22 KB

| W: | H:

| W: | H:

Merge master into v2.0

24.4 KB

22.1 KB

21.6 KB | W: | H:

18 KB | W: | H:

22.3 KB | W: | H:

22.7 KB | W: | H:

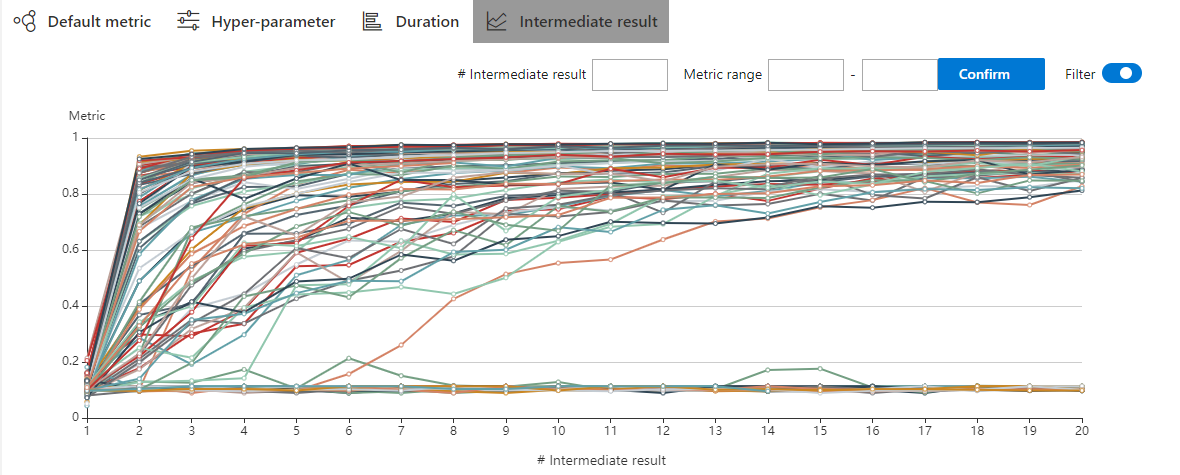

22 KB

67.1 KB | W: | H:

243 KB | W: | H: