"ts/vscode:/vscode.git/clone" did not exist on "e8de5eb4f42a85139f79339ddf1f135357b9551e"

Neural Architecture Search Interface (#1103)

* Dev nas interface -- document (#1049)

* nas interface doc

* Dev nas compile -- code generator (#1067)

* finish code for parsing mutable_layers annotation and testcode

* Dev nas interface -- update figures (#1070)

update figs

* update searchspace_generator (#1071)

* GeneralNasInterfaces.md: Fix a typo (#1079)

Signed-off-by:  Ce Gao <gaoce@caicloud.io>

* add NAS example and fix bugs (#1083)

update searchspace_generator, add example, update NAS example

* fix bugs (#1108)

* Remove NAS example (#1116)

remove example

* update (#1119)

* Dev nas interface2 (#1121)

update doc

* Fix comment for pr of nas (#1122)

resolve comment

Ce Gao <gaoce@caicloud.io>

* add NAS example and fix bugs (#1083)

update searchspace_generator, add example, update NAS example

* fix bugs (#1108)

* Remove NAS example (#1116)

remove example

* update (#1119)

* Dev nas interface2 (#1121)

update doc

* Fix comment for pr of nas (#1122)

resolve comment

Showing

59.6 KB

44.7 KB

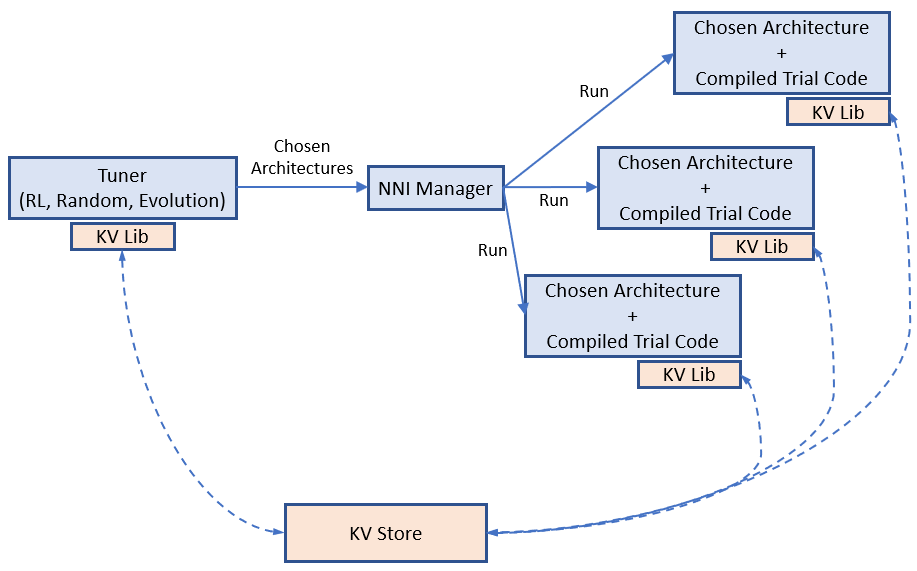

docs/img/example_enas.png

0 → 100644

133 KB

58.6 KB

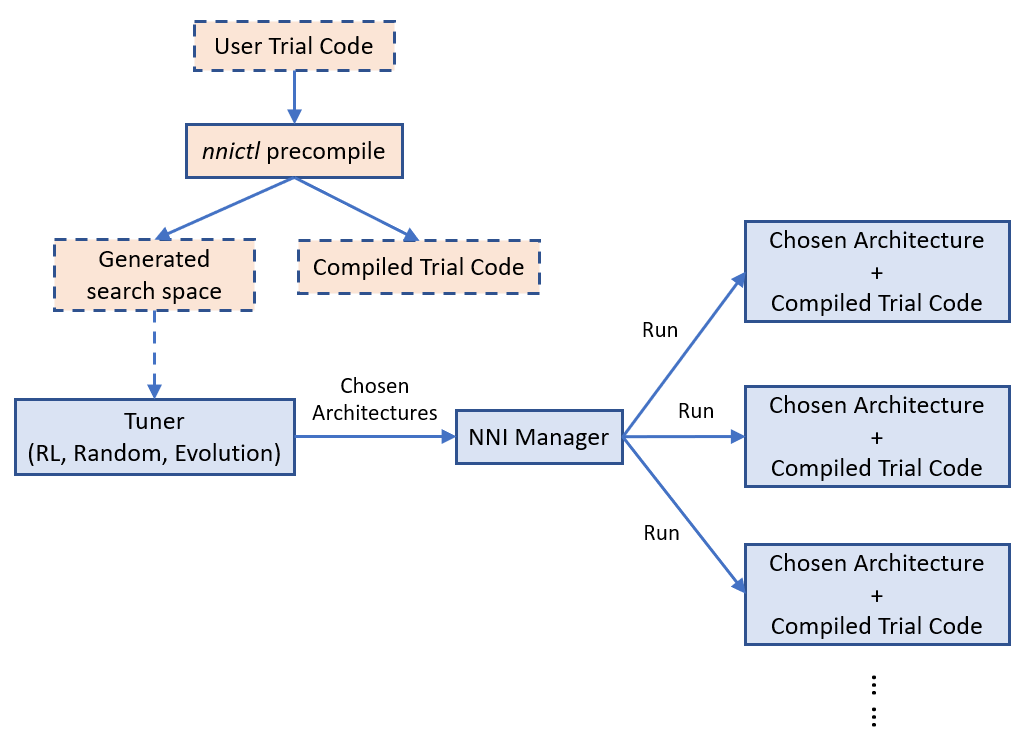

docs/img/nas_on_nni.png

0 → 100644

40.1 KB

28.9 KB

22.9 KB