Merge pull request #3023 from microsoft/v1.9

[do not squash!] merge v1.9 back to master

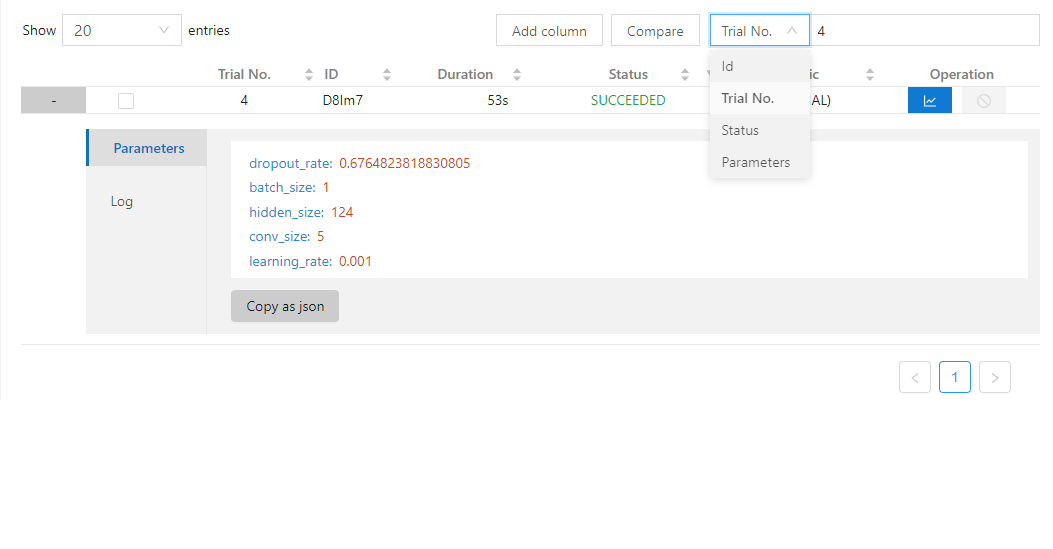

Showing

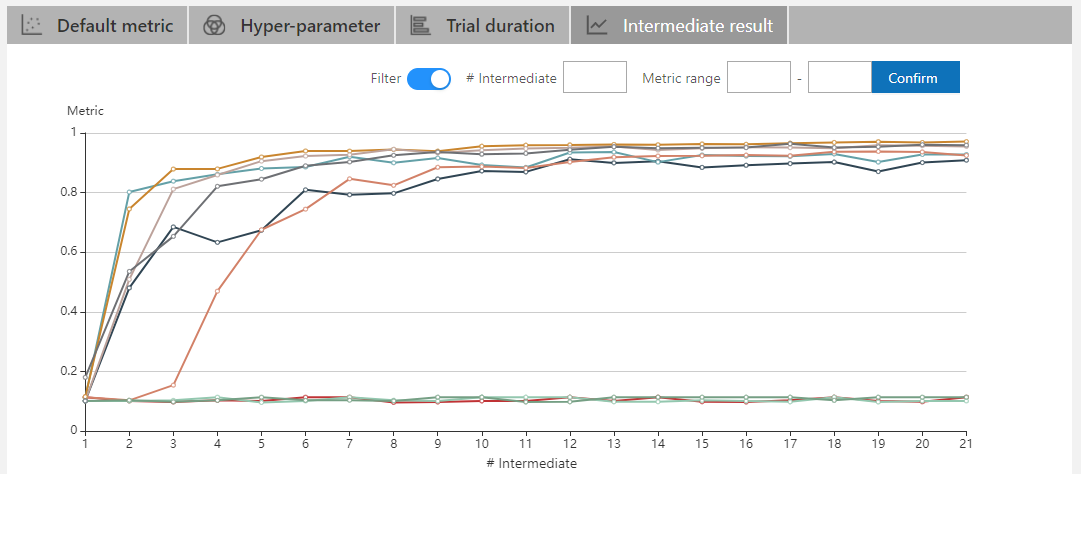

22.1 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

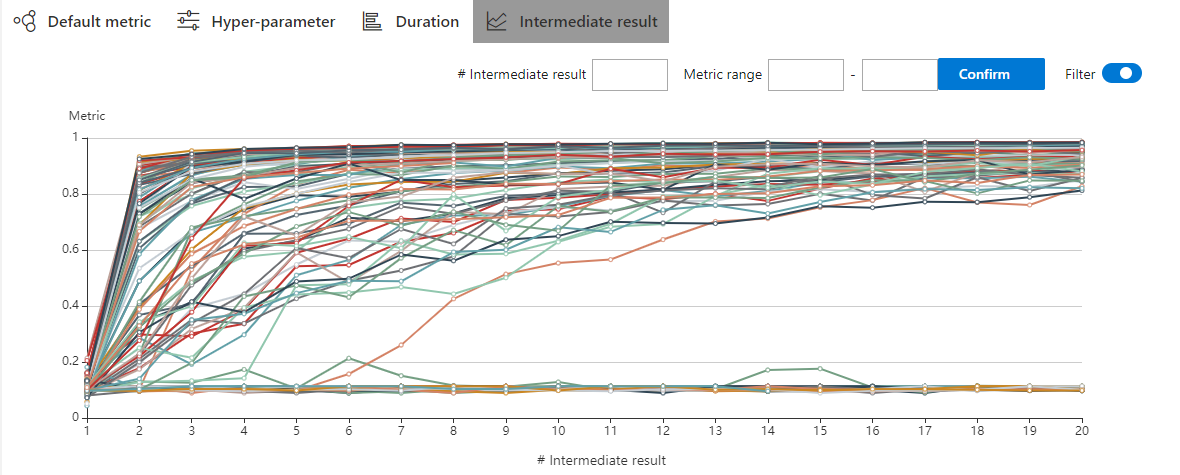

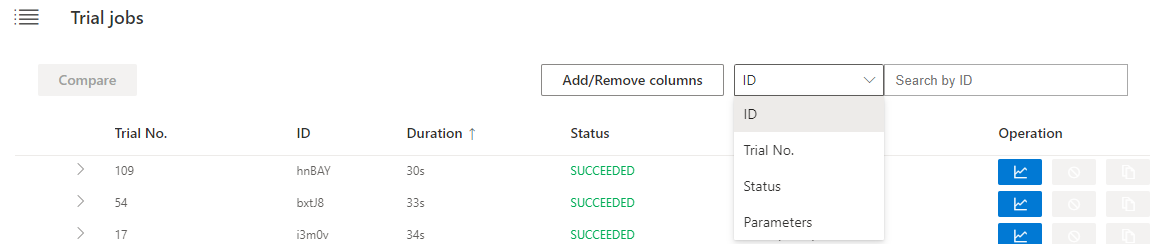

22 KB

| W: | H:

| W: | H:

[do not squash!] merge v1.9 back to master

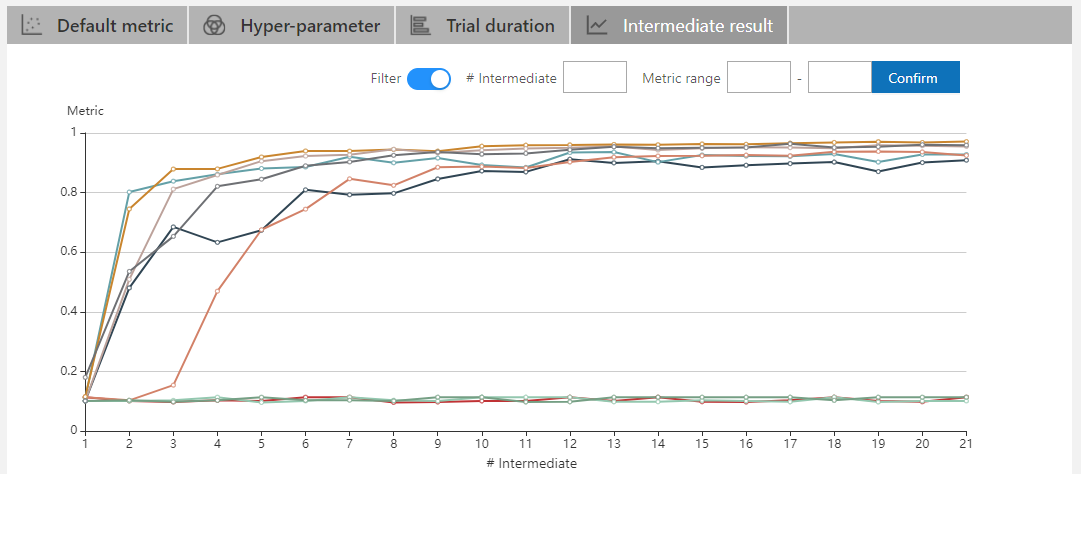

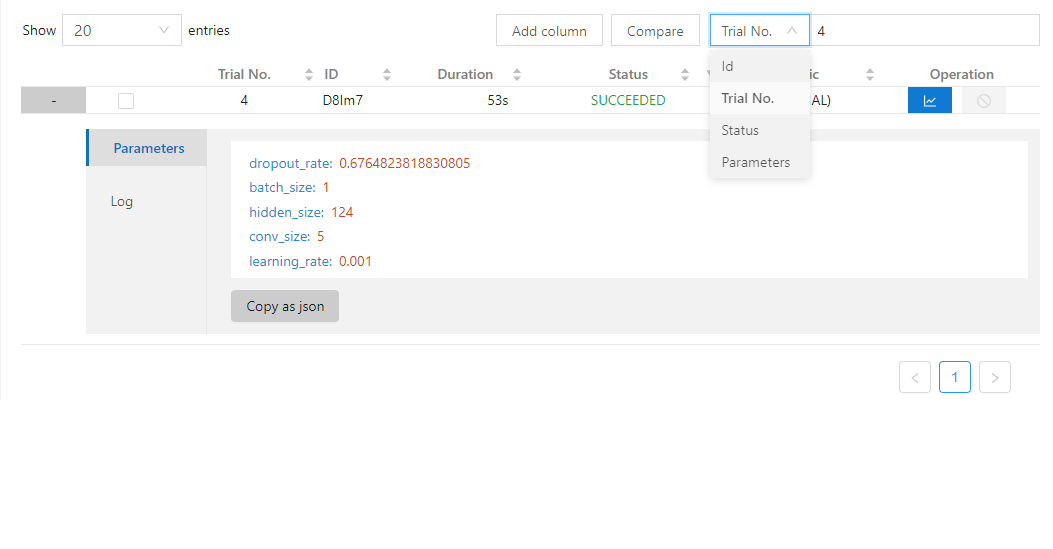

22.1 KB

21.6 KB | W: | H:

18 KB | W: | H:

22.3 KB | W: | H:

22.7 KB | W: | H:

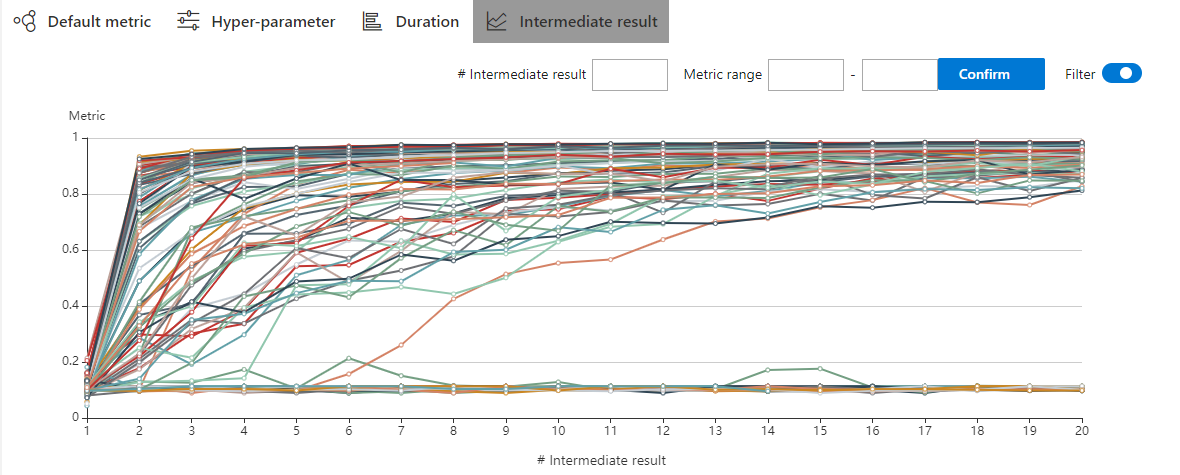

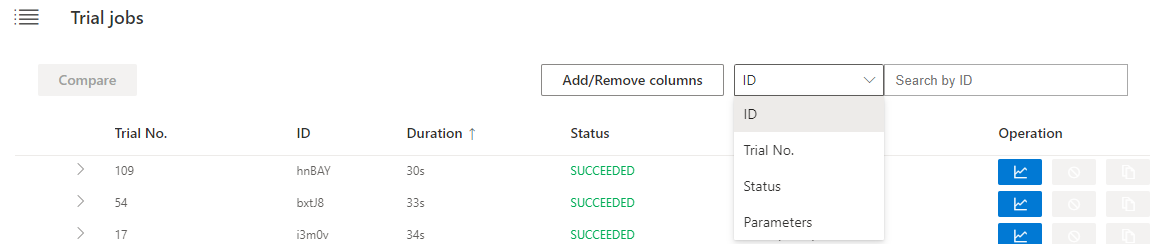

22 KB

67.1 KB | W: | H:

243 KB | W: | H: